Vision Algorithms for Embedded Vision

Most computer vision algorithms were developed on general-purpose computer systems with software written in a high-level language

Most computer vision algorithms were developed on general-purpose computer systems with software written in a high-level language. Some of the pixel-processing operations (ex: spatial filtering) have changed very little in the decades since they were first implemented on mainframes. With today’s broader embedded vision implementations, existing high-level algorithms may not fit within the system constraints, requiring new innovation to achieve the desired results.

Some of this innovation may involve replacing a general-purpose algorithm with a hardware-optimized equivalent. With such a broad range of processors for embedded vision, algorithm analysis will likely focus on ways to maximize pixel-level processing within system constraints.

This section refers to both general-purpose operations (ex: edge detection) and hardware-optimized versions (ex: parallel adaptive filtering in an FPGA). Many sources exist for general-purpose algorithms. The Embedded Vision Alliance is one of the best industry resources for learning about algorithms that map to specific hardware, since Alliance Members will share this information directly with the vision community.

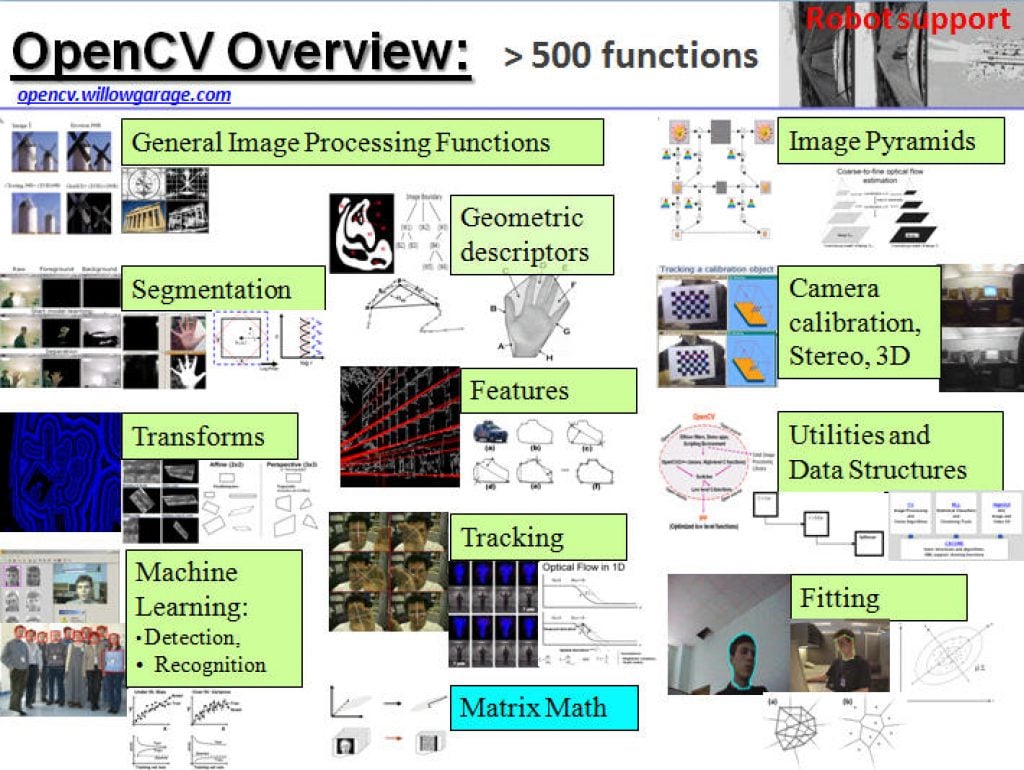

General-purpose computer vision algorithms

One of the most-popular sources of computer vision algorithms is the OpenCV Library. OpenCV is open-source and currently written in C, with a C++ version under development. For more information, see the Alliance’s interview with OpenCV Foundation President and CEO Gary Bradski, along with other OpenCV-related materials on the Alliance website.

Hardware-optimized computer vision algorithms

Several programmable device vendors have created optimized versions of off-the-shelf computer vision libraries. NVIDIA works closely with the OpenCV community, for example, and has created algorithms that are accelerated by GPGPUs. MathWorks provides MATLAB functions/objects and Simulink blocks for many computer vision algorithms within its Vision System Toolbox, while also allowing vendors to create their own libraries of functions that are optimized for a specific programmable architecture. National Instruments offers its LabView Vision module library. And Xilinx is another example of a vendor with an optimized computer vision library that it provides to customers as Plug and Play IP cores for creating hardware-accelerated vision algorithms in an FPGA.

Other vision libraries

- Halcon

- Matrox Imaging Library (MIL)

- Cognex VisionPro

- VXL

- CImg

- Filters

The SHD Group, in Collaboration with the Edge AI and Vision Alliance™, to Release a Complimentary Edge AI Processor and Ecosystem Report

SAN JOSE, Calif. – May 14, 2024 – The SHD Group, a leading strategic marketing, research, and business development firm, today announced the creation of an edge AI report that will be a resource for both product developers and ecosystem providers. This guide will detail processors integrating AI accelerators, standalone acceleration chips, accelerator IP, software,

Morpho and DOOGEE Announce Strategic Cooperation for Smartphone Imaging Technology

Tokyo, Japan – May 14th, 2024 – Morpho, Inc. (hereinafter “Morpho”) , a global leader in image processing and imaging AI solutions, announced today that it has forged a strategic partnership with Shenzhen DOOGEE Hengtong Technology CO., LTD (hereinafter “DOOGEE”), a leading global manufacturer of mobile phone terminals. Through this partnership, Mopho provided image processing

Fully Sharded Data Parallelism (FSDP)

This blog post was originally published at CLIKA’s website. It is reprinted here with the permission of CLIKA. In this blog we will explore Fully Sharded Data Parallelism (FSDP), which is a technique that allows for the training of large Neural Network models in a distributed manner efficiently. We’ll examine FSDP from a bird’s eye

Avnet to Exhibit at the 2024 Embedded Vision Summit

05/09/2024 – PHOENIX – Avnet’s exhibit plans for the 2024 Embedded Vision Summit include new development kits supporting AI applications. The summit is the premier event for practical, deployable computer vision and edge AI, for product creators who want to bring visual intelligence to products. This year’s Summit will be May 21-23, in Santa Clara, California. This

Beyond Smart: The Rise of Generative AI Smartphones

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. From live translations to personalized content management — the new era of mobile intelligence It’s 2024, and generative artificial intelligence (AI) is finally in people’s hands. Literally. This year’s early slate of flagship smartphone releases is a

Outsight Wins the 2024 Airport Technology Excellence Award

May 8, 2024 – Outsight’s groundbreaking Spatial AI Software Platform, Shift, has received the 2024 Airport Technology Excellence Awards, an event aiming to celebrate the best and greatest achievements in the aviation industry. This award recognises Outsight’s role in pushing the boundaries of Spatial Intelligence and 3D perception technologies on the global stage, with its

Say It Again: ChatRTX Adds New AI Models, Features in Latest Update

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Plus, new AI-powered NVIDIA DLSS 3.5 with Ray Reconstruction enhances ray-traced mods in NVIDIA RTX Remix. Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more accessible, and

Basler Presents a New, Programmable CXP-12 Frame Grabber

With the imaFlex CXP-12 Quad, Basler AG is expanding its CXP-12 vision portfolio with a powerful, individually programmable frame grabber. Using the graphical FPGA development environment VisualApplets, application-specific image pre-processing and processing for high-end applications can be implemented on the frame grabber. Basler’s boost cameras, trigger boards, and cables combined with the card form a

Why CLIKA’s Auto Lightweight AI Toolkit is the Key to Unlocking Hardware-agnostic AI

This blog post was originally published at CLIKA’s website. It is reprinted here with the permission of CLIKA. Recent advances in artificial intelligence (AI) research have democratized access to models like ChatGPT. While this is good news in that it has urged organizations and companies to start their own AI projects either to improve business

LLMs, MoE and NLP Take Center Stage: Key Insights From Qualcomm’s AI Summit 2023 On the Future of AI

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Experts at Microsoft, Duke and Stanford weigh in on the advancements and challenges of AI Qualcomm’s annual internal artificial intelligence (AI) Summit brought together industry experts and Qualcomm employees from over the world to San Diego in

More than 500 AI Models Run Optimized on Intel Core Ultra Processors

Intel builds the PC industry’s most robust AI PC toolchain and presents an AI software foundation that developers can trust. What’s New: Today, Intel announced it surpassed 500 AI models running optimized on new Intel® Core™ Ultra processors – the industry’s premier AI PC processor available in the market today, featuring new AI experiences, immersive graphics

Mixed Messages on MaaS Market Readiness: An Analysis of New Driverless Vehicle Testing Data From the California DMV

Over the last three to four years, the driverless robotaxi industry has begun to flourish. Driverless services are coming online in multiple cities across the US and China. IDTechEx‘s recent report, “Future Automotive Technologies 2024-2034: Applications, Megatrends, Forecasts“, predicts that the driverless robotaxi industry will be generating over US$470 billion annually through services in 2034.

Moving Pictures: Transform Images Into 3D Scenes With NVIDIA Instant NeRF

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Learn how the AI research project helps artists and others create 3D experiences from 2D images in seconds. Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more

2024 Embedded Vision Summit Showcase: Keynote Presentation

Check out the keynote presentation “Learning to Understand Our Multimodal World with Minimal Supervision” at the upcoming 2024 Embedded Vision Summit, taking place May 21-23 in Santa Clara, California! The field of computer vision is undergoing another profound change. Recently, “generalist” models have emerged that can solve a variety of visual perception tasks. Also known

2024 Embedded Vision Summit Showcase: Expert Panel Discussion

Check out the expert panel discussion “Multimodal LLMs at the Edge: Are We There Yet?” at the upcoming 2024 Embedded Vision Summit, taking place May 21-23 in Santa Clara, California! The Summit is the premier conference for innovators incorporating computer vision and edge AI in products. It attracts a global audience of technology professionals from

2024 Embedded Vision Summit Showcase: Qualcomm General Session Presentation

Check out the general session presentation “What’s Next in On-Device Generative AI” at the upcoming 2024 Embedded Vision Summit, taking place May 21-23 in Santa Clara, California! The generative AI era has begun! Large multimodal models are bringing the power of language understanding to machine perception, and transformer models are expanding to allow machines to