Yesterday morning, Embedded Vision Alliance founder Jeff Bier and I had the honor and pleasure of spending around an hour with Jitendra Malik, Arthur J. Chick Professor of EECS at the University of California at Berkeley for the past 25 years and one of the world's foremost computer vision researchers. I hope to have the video of the ~45 minute interview posted on the EVA website within a week; for now, I'll share a brief excerpt from the transcript of Malik's thoughts:

It's worth keeping in mind how difficult the problem is. Something like 30-50% of the human brain is devoted to visual processing. The human brain is a remarkable instrument. It's the result of billions of years of evolution. The computing power associated with this 30% of the brain is quite significant. We have on the order of a hundred billion neurons in the brain, and each of these neurons may be connected to 10,000 other neurons. And if we think that the computing power resides in the synapses, then we are talking of 1015 units.

Now, let's think about where computers are today. Now we can have devices which are 109 units quite readily. And these devices' transistors are faster than neurons. So essentially we get a little gain from that, when designing artificial systems. And now, say, we put a thousand of today's machines together, or maybe five years from now, we are approaching the kind of computing power we need.

A processing innovation unveiled by IBM earlier today may bring that aspiration to reality more quickly than previously forecasted. Announced on the same day as the 23rd IEEE Hot Chips Conference, where IBM presented on 'The IBM Blue Gene/Q Compute chip+SIMD floating-point unit', IBM's SyNAPSE (Systems of Neuromorphic Adaptive Plastic Scalable Electronics) project is partially funded by DARPA (the U.S. Defense Advanced Research Projects Agency). Here are some choice excerpts from the press release:

Today, IBM researchers unveiled a new generation of experimental computer chips designed to emulate the brain’s abilities for perception, action and cognition. The technology could yield many orders of magnitude less power consumption and space than used in today’s computers.

In a sharp departure from traditional concepts in designing and building computers, IBM’s first neurosynaptic computing chips recreate the phenomena between spiking neurons and synapses in biological systems, such as the brain, through advanced algorithms and silicon circuitry. Its first two prototype chips have already been fabricated and are currently undergoing testing.

Called cognitive computers, systems built with these chips won’t be programmed the same way traditional computers are today. Rather, cognitive computers are expected to learn through experiences, find correlations, create hypotheses, and remember – and learn from – the outcomes, mimicking the brains structural and synaptic plasticity.

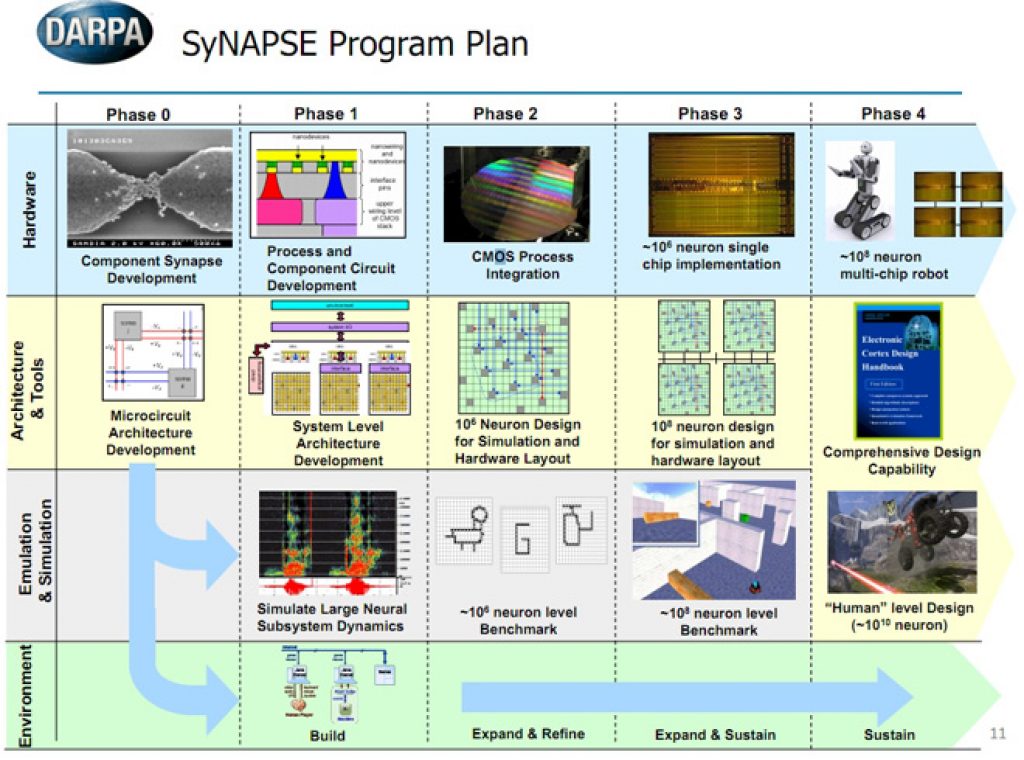

The goal of SyNAPSE is to create a system that not only analyzes complex information from multiple sensory modalities at once, but also dynamically rewires itself as it interacts with its environment – all while rivaling the brain’s compact size and low power usage. The IBM team has already successfully completed Phases 0 and 1.

While they contain no biological elements, IBM’s first cognitive computing prototype chips use digital silicon circuits inspired by neurobiology to make up what is referred to as a “neurosynaptic core” with integrated memory (replicated synapses), computation (replicated neurons) and communication (replicated axons).

IBM has two working prototype designs. Both cores were fabricated in 45 nm SOI-CMOS and contain 256 neurons. One core contains 262,144 programmable synapses and the other contains 65,536 learning synapses. The IBM team has successfully demonstrated simple applications like navigation, machine vision, pattern recognition, associative memory and classification.

IBM’s overarching cognitive computing architecture is an on-chip network of light-weight cores, creating a single integrated system of hardware and software. This architecture represents a critical shift away from traditional von Neumann computing to a potentially more power-efficient architecture that has no set programming, integrates memory with processor, and mimics the brain’s event-driven, distributed and parallel processing.

IBM’s long-term goal is to build a chip system with ten billion neurons and hundred trillion synapses, while consuming merely one kilowatt of power and occupying less than two liters of volume. Future chips will be able to ingest information from complex, real-world environments through multiple sensory modes and act through multiple motor modes in a coordinated, context-dependent manner.

For example, a cognitive computing system monitoring the world's water supply could contain a network of sensors and actuators that constantly record and report metrics such as temperature, pressure, wave height, acoustics and ocean tide, and issue tsunami warnings based on its decision making. Similarly, a grocer stocking shelves could use an instrumented glove that monitors sights, smells, texture and temperature to flag bad or contaminated produce. Making sense of real-time input flowing at an ever-dizzying rate would be a Herculean task for today’s computers, but would be natural for a brain-inspired system.

“Imagine traffic lights that can integrate sights, sounds and smells and flag unsafe intersections before disaster happens or imagine cognitive co-processors that turn servers, laptops, tablets, and phones into machines that can interact better with their environments,” said Dr. Modha [editor note: Dharmendra Modha, project leader for IBM Research].

For Phase 2 of SyNAPSE, IBM has assembled a world-class multi-dimensional team of researchers and collaborators to achieve these ambitious goals. The team includes Columbia University; Cornell University; University of California, Merced; and University of Wisconsin, Madison.

The press release also notes that IBM's artificial intelligence research extends back to 1956, when the company performed the world's first large-scale (512 neuron) cortical simulation. I also have 'ancient history' personal experience with the technology. My first job out of college was with Intel Corporation, where I spent eight years working in the nonvolatile memory group. For the first several years, my focus was on UV-erasable EPROMs and OTP (one-time-programmable) ROMs; meanwhile, my peers in the electrically reprogrammable NVM group were evaluating both 'pure' EEPROMs and flash memories and nonvolatile, in-system rewriteable memory-derived neural networking.

The desired commercialization of neural network ICs and their associated software didn't come to pass quickly enough, in sufficiently high volumes, to warrant Intel's long-term investment. However, the folks focused on neural networking at Intel were not only technically competent but also deeply passionate about their work. As such, it's good to see IBM pick up the torch and move the technology forward.

For more on IBM's SyNAPSE project, be sure to check out Wired Magazine's interview with Dr. Modha.