I suspect that at least some of you are also subscribers to BDTI's InsideDSP monthly email newsletter and, as such, may have already seen the editorial in yesterday's edition from company president (and Embedded Vision Alliance founder) Jeff Bier. The title, 'I Know How You Feel,' is a clever teaser to the subject matter inside; Jeff talks about how courtesy of burgeoning embedded vision technology, devices will soon be able to add emotional state to the list of other characteristics they can already discern about the person whose visage is exposed to an image sensor at a particular point in time:

- Human-ness (versus, say, canine-ness)

- Gender

- Age

- Ethnicity

- Individuality (i.e. "John A. Doe, 222 Main Street, Anytown, California")

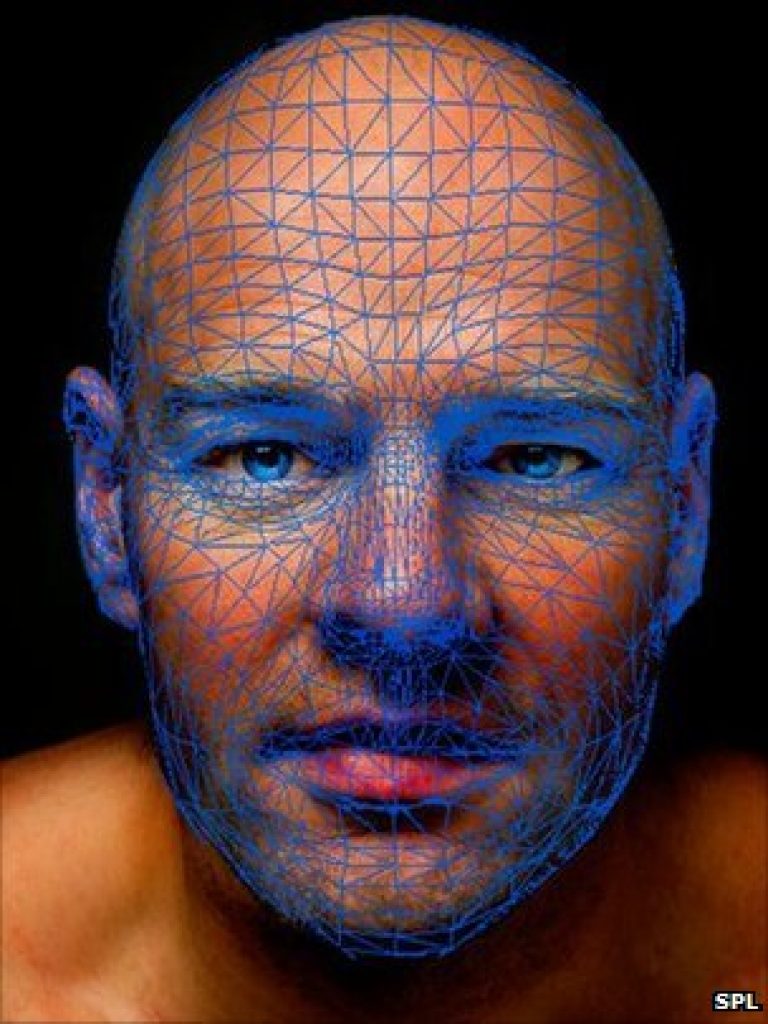

There's a "back story" to Jeff's editorial, actually. In mid-July, during my first week on the job, he and I (along with another BDTI employee) met with Tim Llewellynn, the founder of a small start-up company called nViso. The demonstration Tim provided us, running on a Nvidia GPU-based laptop (therefore leveraging the CUDA API) with a bezel-embedded webcam, was quite compelling. Simplistically speaking, the company's software maps a person's face to a set of data points, and then algorithmically ascertains the emotion of the person by the relative orientations and frame-to-frame movement of those data points. Facial reflections of primary emotions are largely generic, irrespective of age, gender or cultural background, and the software auto-calibrates for various users' face sizes and feature locations.

Although the nViso demo was, strictly speaking, a computer vision application, the company definitely plans to broaden its API leverage beyond Nvidia-proprietary CUDA to industry-standard OpenCL, as well as targeting tablets, smartphones, and other embedded platforms. The potential applications for a technology like nViso's are quite staggering, once you ponder the possibilities for even a few minutes. One could imagine, for example, how interested a retailer might be in assessing a shopper's visceral response to an advertisement or other promotional setup, along with recognizing that shopper ("Hello! I see you're back! Still deciding whether to buy this product? Would a 10%-off coupon seal the deal?").

Consider, too, the intriguing possibilities inherent in weaving the player's emotional state into the plot line of a computer or console game, or other piece of on-the-fly-rendered entertainmment content. Two Sony executives, as showcased in Slashdot late last month, recently predicted "that in merely ten years' time, video games will have the ability to read more than just movement on the part of the player. Reading player emotions will be a key feature that is possible now and might be implemented into games in the future." Perhaps someone at nViso should request a meeting with Sony, as a means of informing them that "ten years hence" is already here?

Finally, and ironically considering that I'm writing this piece four days past the 10 year anniversary of the September 11, 2001 terrorist attacks, ponder that even if specific-individual-identifying facial recognition technology (at least at reasonable recognition speeds, and with sufficiently high accuracy) remains an in-progress aspiration, ascertaining a particular individual's degree or nervousness or otherwise-agitation is also a notably valuably goal. To wit, I encourage you to check out a more recent Slashdot showcase, which discusses an under-development vision-based lie detector setup that doesn't rely on traditional pulse rate, respiration, perspiration and other close-proximity techniques. From the summary:

A sophisticated new camera system can detect lies just by watching our faces as we talk, experts say. The computerized system uses a simple video camera, a high-resolution thermal imaging sensor and a suite of algorithms. … It successfully discriminates between truth and lies in about two-thirds of cases, said lead researcher Professor Hassan Ugail from Bradford University. … We give our emotions away in our eye movements, dilated pupils, biting or pressing together our lips, wrinkling our noses, breathing heavily, swallowing, blinking and facial asymmetry. And these are just the visible signs seen by the camera. Even swelling blood vessels around our eyes betray us, and the thermal sensor spots them too.

p.s…extra credit points to those who recognized the musical origins of this post's title!