Sometimes, I confess, I get a little jaded about technology…when it seems that all of the fundamental problems have been solved, and that we're all just waiting on Moore's Law semiconductor trends to enable the algorithm-implementation ICs to be sufficiently cost-effective, low-energy-consumptive, etc to enable broad market adoption. Sometimes…but then a news item drops into my inbox and resurrects my confidence in continued tech breakthroughs. From the Slashdot summary:

At the Association for Computing Machinery's Symposium on Discrete Algorithms (SODA) this week, a group of MIT researchers will present a new algorithm that, in a large range of practically important cases, improves on the fast Fourier transform. Under some circumstances, the improvement can be dramatic — a tenfold increase in speed. The new algorithm could be particularly useful for image compression, enabling, say, smartphones to wirelessly transmit large video files without draining their batteries or consuming their monthly bandwidth allotments.

Coverage at The Verge further elaborates on both the theory and implementation:

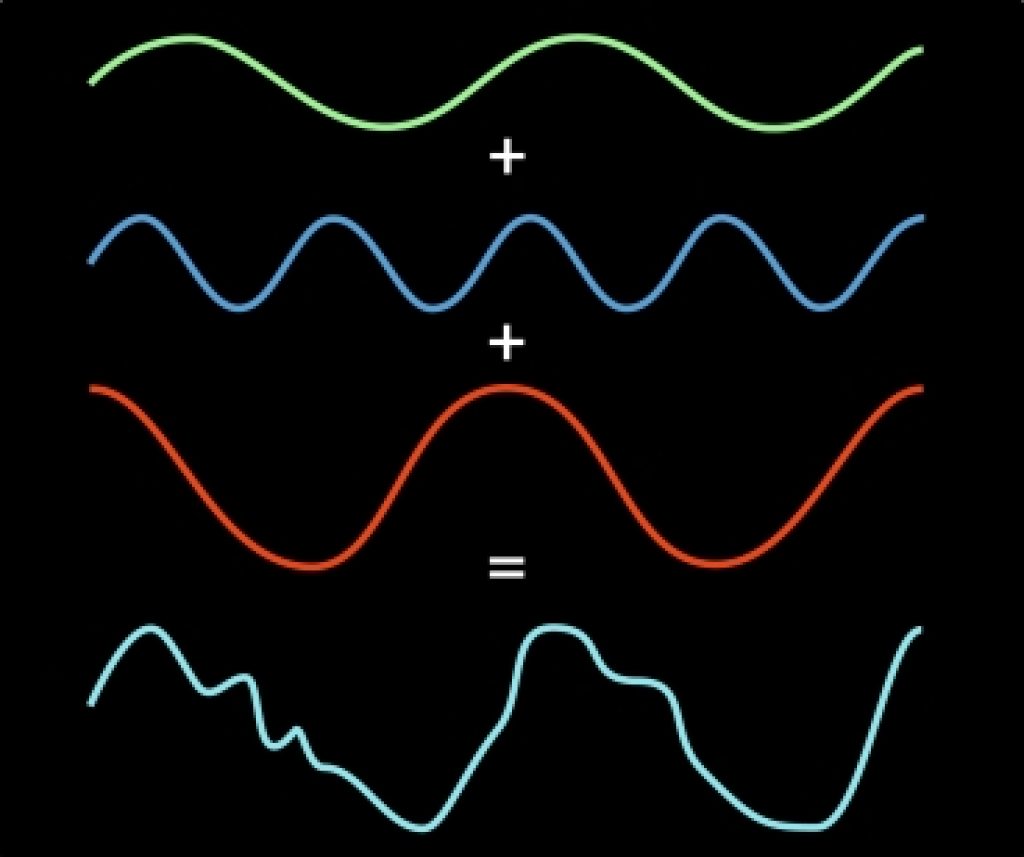

FFT has been used in compression and other fields already — once you split up a transmission into its composite parts, you can forget the less important frequencies and just send along the major players, reducing file sizes. This works for compression because most media is "sparse." For example, the researchers found that in a block of 64 pixels from a larger image, 57 could be discarded on average with little to no effect on the overall picture. With the researchers' new findings, they say there's now an even faster method to split signals and determine what's negligible.

The researchers claim to have improved on the FFT by more efficiently using filters to isolate smaller individual slivers of the signal which typically only have one predominate frequency. Once they've singled out a bit of the signal, there are two different methods (explained in two different papers) for determining what can be discarded. One keeps cutting down that sliver of data and retains the parts with the most powerful signal, and the other borrows a technique used in 4G data networks, called OFDMA [editor note: Orthogonal frequency-division multiple access]. The researchers claim that most kinds of data that you'd care about (including audio, images, and video) are "sparse," and therefore can be compressed with the new algorithm up to ten times faster.

Presumably (at least I would hope), the compression speed boost would come about with no (or at least no notable) corresponding decrease in image quality, a factor that's important both in photography and in various vision processing applications. For more information, check out the MIT News writeup or the even more detailed paper.