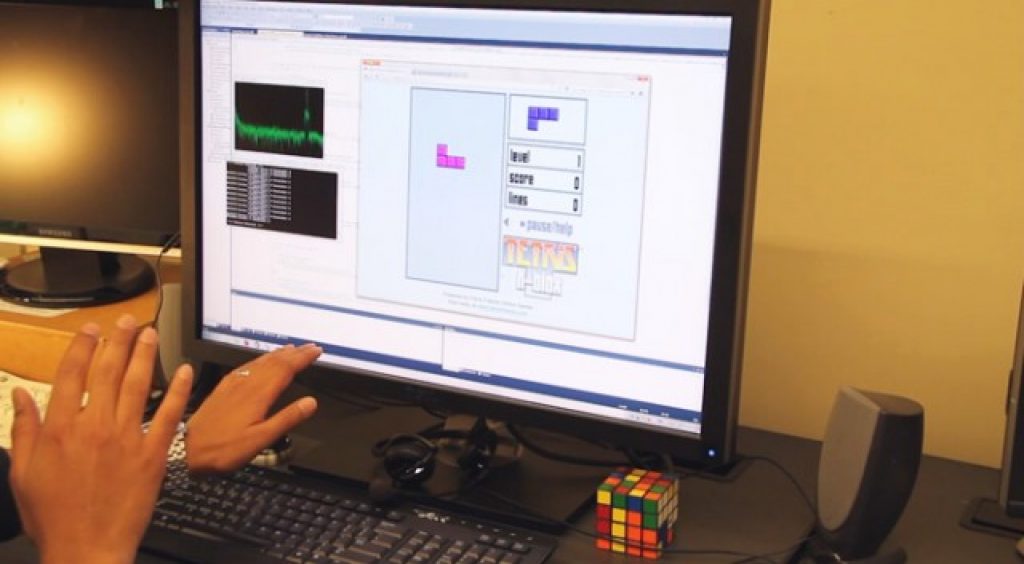

File this one under "cool concept; unclear implementation fit." Microsoft Research and the University of Washington have partnered to develop SoundWave, a proof-of-concept system that leverages a computer's microphone and speaker combo to implement rudimentary gesture control. Doppler shifts, akin to those harnessed by sonar and astronomers, are at the root of the scheme. The speaker emits tones in the 18-22 kHz frequency range, which are spectrally affected by hand movement. A microphone picks up the audio, and software subsequently compares the source to the altered output, thereby discerning hand distance, location and speed and direction of gesture movement.

One issue I see upfront with the scheme is that it seemingly requires a fairly high quality transducer set to both generate and capture such high frequency sounds; the cheap speakers and microphones used with most computers probably aren't up to the task. Also, given the prevalence of webcams built not only into laptop bezels but also within standalone computer displays, an embedded vision-based gesture scheme would seem to be a more robust alternative solution, even more so if a depth-discerning image sensor were included in the mix.

Nonetheless, SoundWave is a nifty hack and might be valuable as an embedded vision gesture interface algorithm supplement even if it's insufficiently robust in its own right. For more, check out the video below:

If the video player doesn't appear (it works for me in Safari but not Firefox, for example), download the Quicktime MP4 source.