Semiconductor and software advances are enabling medical devices to derive meaning from digital still and video images.

By Brian Dipert

Editor-in-Chief, Embedded Vision Alliance

Senior Analyst, BDTI

and

Kamran Khan

Technical Marketing Engineer, Xilinx

This article was originally published in the May 2013 edition of MD+DI (Medical Device and Diagnostic Industry) Magazine. It is reprinted here with the permission of the original publisher.

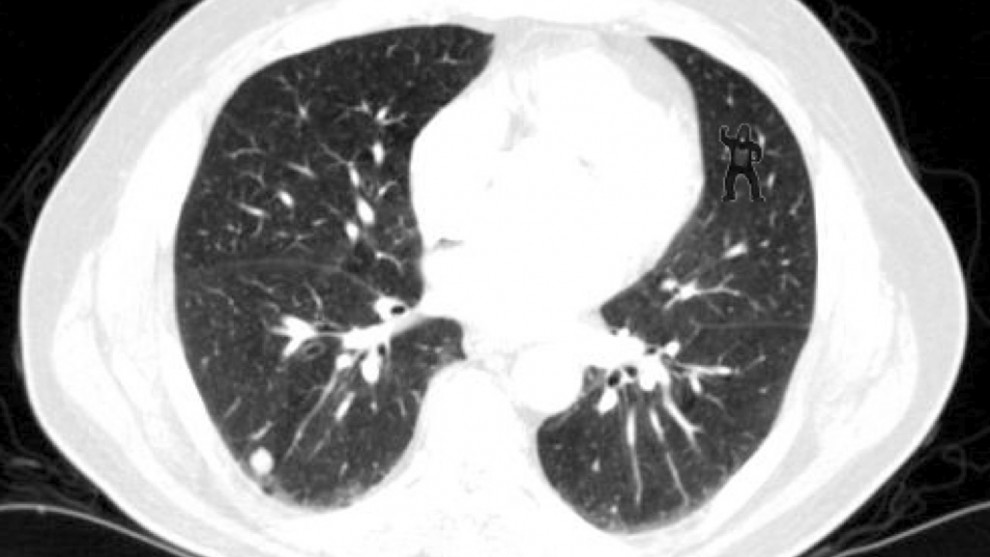

Imaging technologies such as x-rays and MRI have long been critical diagnostic tools used by healthcare professionals. But it's ultimately up to a human operator to analyze and interpret the images these technologies produce, and that leaves open the possibility that critical information will be overlooked. In a recent study, for example, 20 out of 24 radiologists missed the image of a gorilla that was around 48 times the size of a typical cancer nodule artificially inserted into CT scans of patients' lungs (Figure 1). This oversight occurred even though the radiologists were presented with the highly anomalous and seemingly easily detectible primate picture on average more than four times in separate images.

Figure 1. See the gorilla in the upper right corner of this CT scan? 20 of 24 radiologists in a recent study didn't notice it.

Advanced, intelligent x-ray and CT systems—which, unlike humans, are not subject to fatigue, distraction, and other degrading factors—could assist radiologists in rapidly and accurately identifying image irregularities. Such applications are among many examples of the emerging technology known as embedded vision, the incorporation of automated image analysis, or computer vision, capabilities into a variety of electronic devices. Such systems extract meaning from visual inputs, and similar to the way wireless communication has become pervasive over the past 10 years, embedded vision technology is poised to be widely deployed in medical electronics.

Just as high-speed wireless connectivity began as an exotic, costly technology, embedded vision has until recently been found only in complex, expensive systems, such as quality-control inspection systems for manufacturing. Advances in digital integrated circuits were critical in enabling high-speed wireless technology to evolve from fringe to mainstream. Similarly, advances in digital chips such as processors, image sensors, and memory devices are paving the way for the proliferation of embedded vision into a variety of applications, including high-volume consumer devices such as smartphones and tablets (see sidebar “Mobile Electronics Applications Showcase Embedded Vision Opportunities").

How It Works

Vision algorithms typically require high compute performance, and, of course, embedded systems of all kinds are usually required to fit into tight cost and power consumption envelopes. In other digital signal processing application domains, such as wireless communications and compression-centric consumer video equipment, chip designers achieve this challenging combination of high performance, low cost, and low power by using specialized coprocessors and accelerators to implement the most demanding processing tasks in the application. These coprocessors and accelerators are typically not programmable by the chip user, however.

This tradeoff is often acceptable in applications where software algorithms are standardized. In vision applications, however, there are no standards constraining the choice of algorithms. On the contrary, there are often many approaches to choose from to solve a particular vision problem. Therefore, vision algorithms are very diverse, and change rapidly over time. As a result, the use of nonprogrammable accelerators and coprocessors is less attractive.

Achieving the combination of high performance, low cost, low power and programmability is challenging. Although there are few chips dedicated to embedded vision applications today, these applications are adopting high-performance, cost-effective processing chips developed for other applications, including DSPs, CPUs, FPGAs and GPUs. Particularly demanding embedded vision applications often use a combination of processing elements. As these chips and cores continue to deliver more programmable performance per dollar and per watt, they will enable the creation of more high-volume embedded vision products. Those high-volume applications, in turn, will attract more attention from silicon providers, who will deliver even better performance, efficiency and programmability.

A Diversity of Opportunities

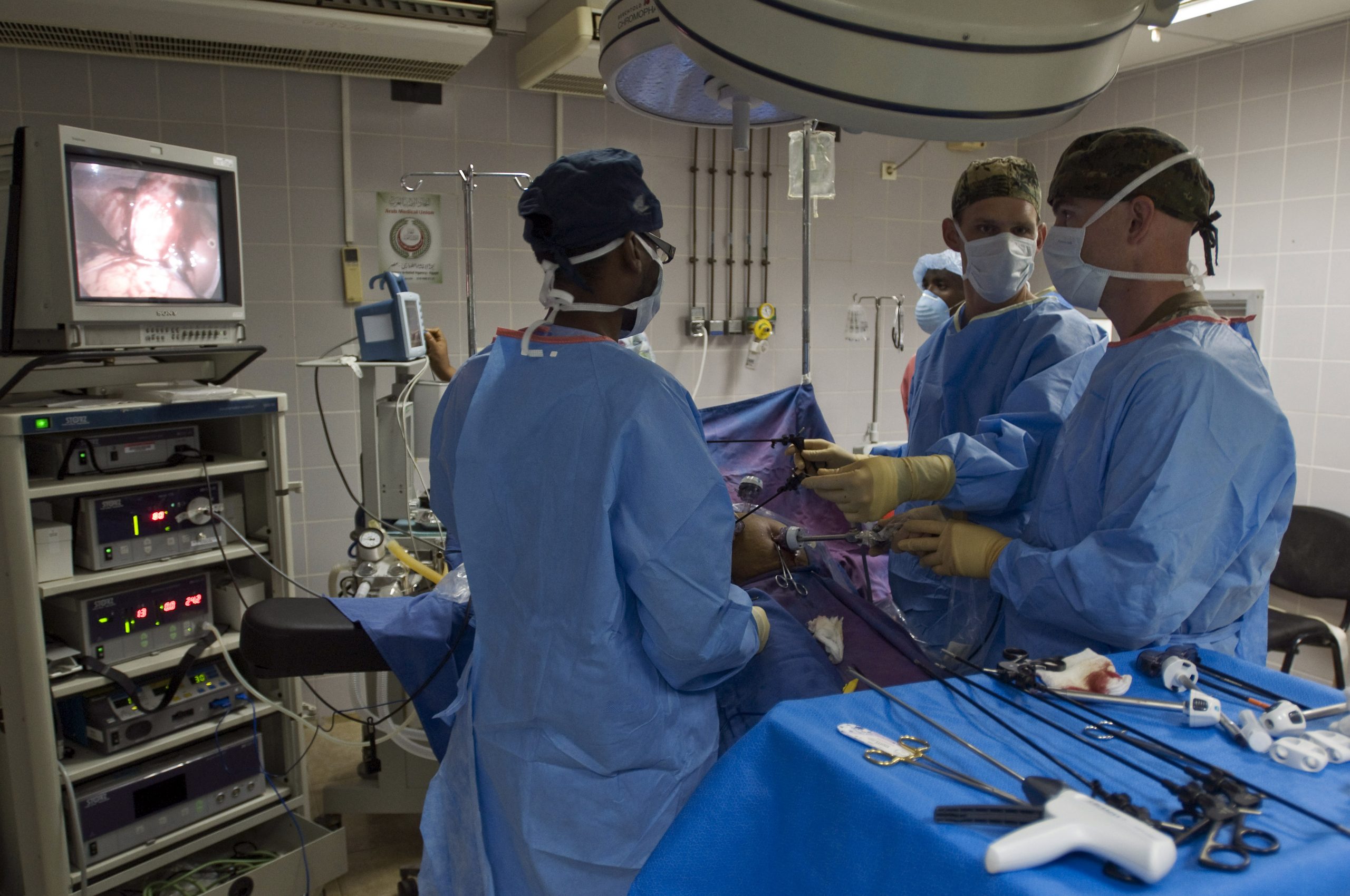

Medical imaging is not the only opportunity for embedded vision to supplement—if not supplant—human medical analysis and diagnosis. Consider endoscopy (Figure 2), for example. Historically, endoscope systems only displayed unenhanced, low-resolution images. Physicians had to interpret the images they saw based solely on knowledge and experience (see sidebar "Codifying Intuition"). The low-quality images and subjective nature of the diagnoses inevitably resulted in overlooked abnormalities and incorrect treatments. Today, endoscope systems are armed with high-resolution optics, image sensors, and embedded vision processing capabilities. They can distinguish among tissues, enhance edges and other image attributes; perform basic dimensional analytics (e.g., length, angle); and overlay this data on top of the video image in real time. Advanced designs can even identify and highlight unusual image features, so physicians are unlikely to overlook them.

Figure 2. Leading-edge endoscope systems not only output high-resolution images but also enhance and information-augment them to assist in physician analysis and diagnosis.

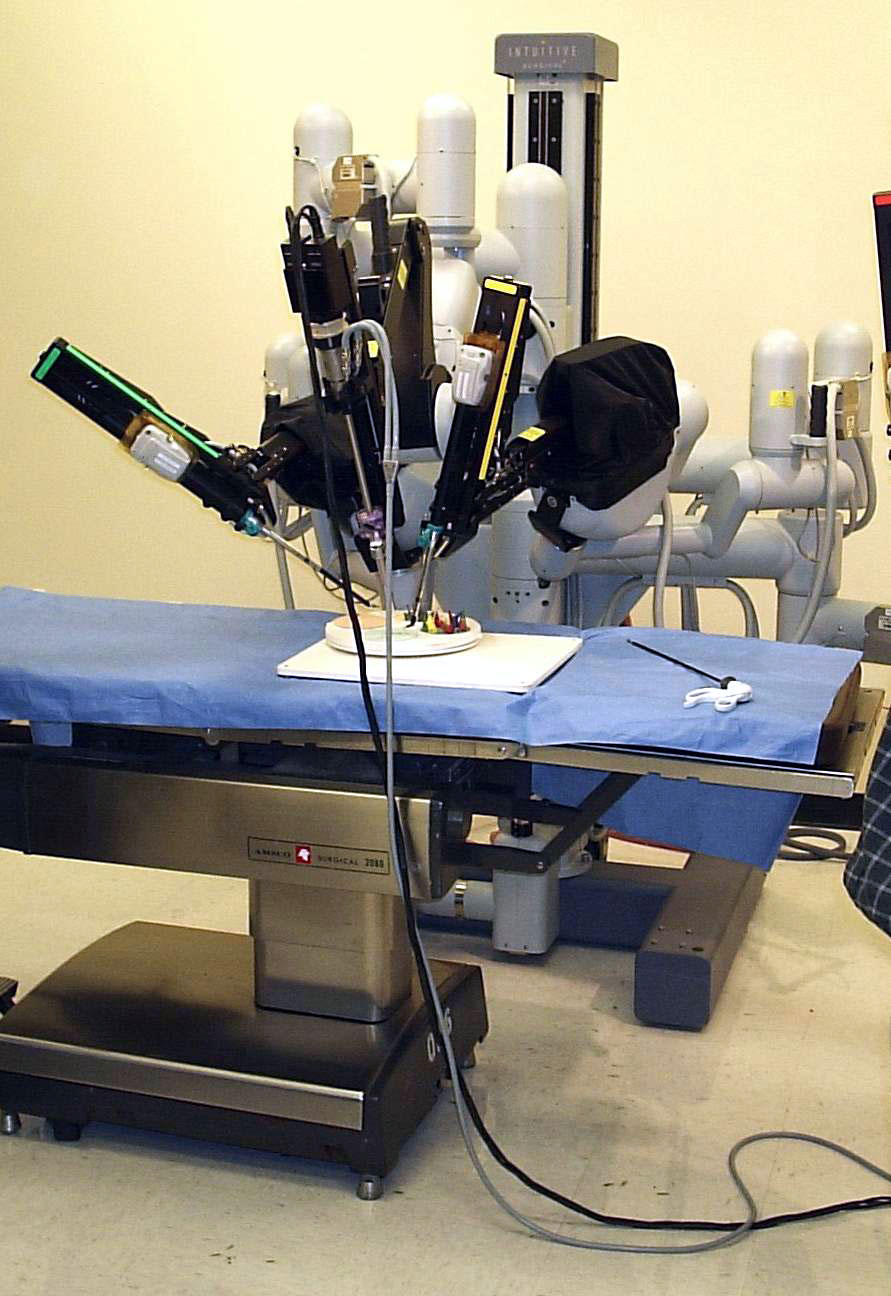

In ophthalmology, doctors historically relied on elementary cameras that only took pictures of the inner portions of the eye. Subsequent analysis was left to the healthcare professional. Today, ophthalmologists use medical devices armed with embedded vision capabilities to create detailed 2-D and 3-D models of the eye in real time as well as overlay analytics such as volume metrics and the dimensions of critical ocular components. With ophthalmological devices used for cataract or lens correction surgery preparation, for example, embedded vision processing helps differentiate the cornea from the rest of the eye. It then calculates an overlay, complete with surgical cut lines, based on various dimensions it has ascertained. Physicians now have a customized operation blueprint, which dramatically reduces the likelihood of mistakes. Such data can even be used to guide human-assisted or fully automated surgical robots with high precision (Figure 3).

Figure 3. Robust vision capabilities are essential to robotic surgery systems, whether they be human-assisted (either local or remote) or fully automated.

Another example of how embedded vision can enhance medical devices involves advancements in clinical testing. In the past, it took days, if not weeks, to receive the results of blood and genetic tests. Today, more accurate results are often delivered in a matter of hours. DNA sequencers use embedded vision to accelerate analysis by focusing in on particular areas of a sample. After DNA molecules and primers are added to a slide, the sample begins to group into clusters. A high-resolution camera then scans the sample, creating numerous magnified images. After stitching these images together, the embedded vision–enhanced system identifies the clusters, along with their density parameters. These regions are then subjected to additional chemical analysis to unlock their DNA attributes. This method of visually identifying clusters before continuing the process drastically reduces procedure times and allows for more precise results. Faster blood or genetic test results enables quicker treatment and improves healthcare.

Magnifying Minute Variations

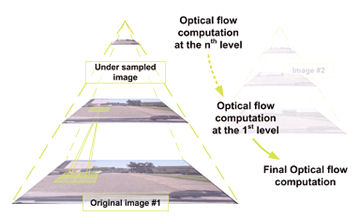

Electronic systems are also adept at detecting and accentuating minute image-to-image variations that the human visual system is unable to perceive, whether due to insufficient sensitivity or inadequate attention. As research at MIT and elsewhere has shown, it's possible to accurately measure pulse rate simply by placing a camera in front of a person and logging the minute facial color change cycles that are reflective of capillary blood flow (Figure 4). Similarly, embedded vision systems can precisely assess respiration rate by measuring the periodic rise and fall of the subject's chest.

Figure 4. Embedded vision systems are capable of discerning (and amplifying) the scant frame-to-frame color changes in a subject's face, suggestive of blood flow.

This same embedded vision can be used to provide early indication of neurological disorders such as amyotrophic lateral sclerosis (also known as Lou Gehrig's disease) and Parkinson's disease. Early warning signs such as minute trembling or aberrations in gait—so slight that they may not yet even be perceptible to the patient—are less likely to escape the perceptive gaze of an embedded vision–enabled medical device.

Microsoft's Kinect game console peripheral (initially harnessed for image-processing functions such as a gesture interface and facial detection and recognition) is perhaps the best known embedded vision product. More recently, its official sanction expanded beyond the Xbox 360 to Windows 7 and 8 PCs. In March of this year, Microsoft began shipping an upgraded Kinect for Windows SDK that includes Fusion, a feature that transforms Kinect-generated images into 3-D models. Microsoft demonstrated the Fusion algorithm in early March by transforming brain scans into 3-D replicas of subjects' brains, which were superimposed onto a mannequin's head and displayed on a tablet computer's LCD screen.

Gesture and Security Enhancements

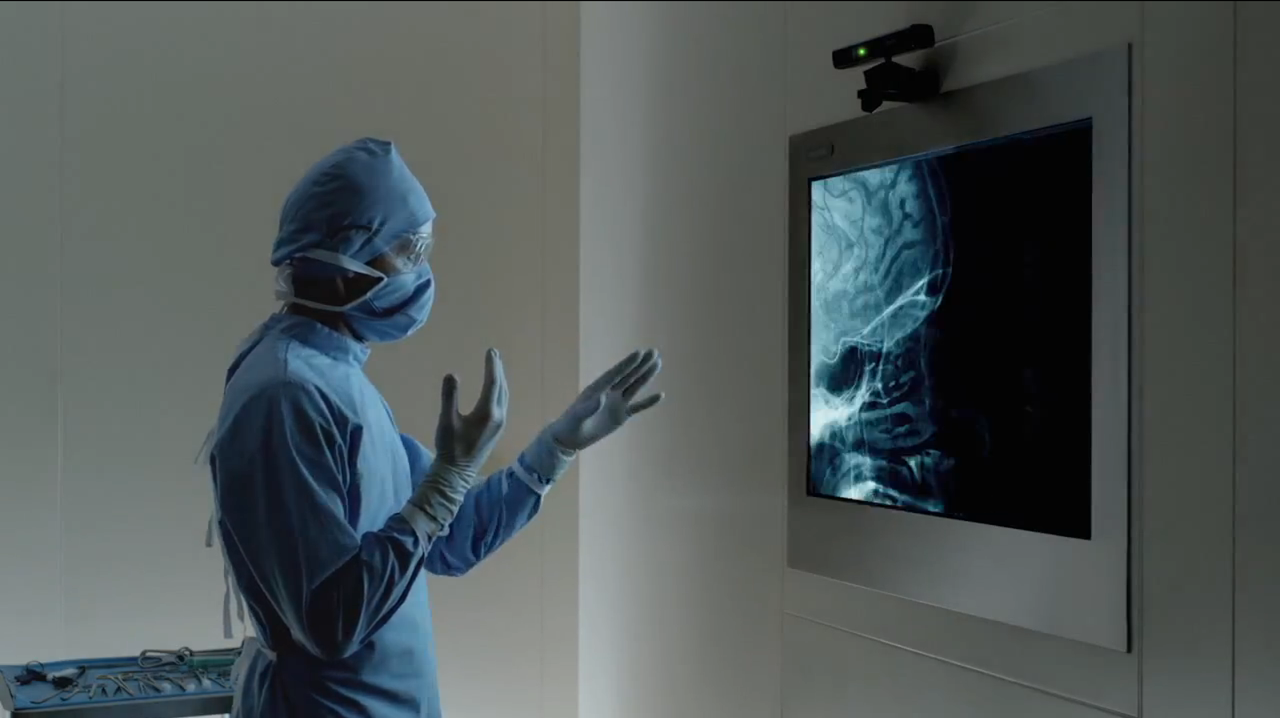

The Kinect and its 2-D and 3-D sensor counterparts have other notable medical uses. At the end of October 2011, Microsoft released "The Kinect Effect," a video showcasing a number of Kinect for Windows applications then under development. One showed a surgeon in the operating room flipping through LCD-displayed x-ray images simply by gesturing with his hand in the air (Figure 5). A gesture interface is desirable in at least two scenarios: when the equipment to be manipulated is out of arm's reach (thereby making conventional buttons, switches, and touchscreens infeasible) and when sanitary concerns prevent tactile control of the gear.

Figure 5. Gesture interfaces are useful in situations where the equipment to be controlled is out of arm's reach and when sanitary or other concerns preclude tactile manipulation of it.

Embedded vision intelligence can also ensure medical facility employees follow adequate sanitary practices. Conventional video-capture equipment, such as that found in consumer and commercial settings, records constantly while it is operational, creating an abundance of wasted content that must be viewed in its entirety in order to pick out footage of note. An intelligent video monitoring system is a preferable alternative; it can employ motion detection to discern when an object has entered the frame and use facial detection to confirm the object is a person. Recording will only continue until the person has exited the scene. Subsequent review—either by a human operator or, increasingly commonly, by the computer itself—will assess whether adequate sanitary practices have been followed in each case.

Future Trends

In the future, medical devices will use increasing degrees of embedded vision technology to better diagnose, analyze, and treat patients. The technology has the potential to make healthcare safer, more effective, and more reliable than ever before. Subjective methods of identifying ailments and guess-and-check treatment methods are fading. Smarter medical devices with embedded vision are the future. Indicative of this trend, a recent Indiana University study found that analyzing patient data with simulation-modeling machine-learning algorithms can drastically reduce healthcare costs and improve quality. The artificial intelligence models used in the study for diagnosing and treating patients resulted in a 30–35% increase in positive patient outcomes.

Vision-enhanced medical devices will be able to capture high-resolution images, analyze them, and provide recommendations and guidance for treatment. Next-generation devices will also be increasingly automated, performing preoperation analysis, creating surgical plans from visual and other inputs, and in some cases even performing surgeries with safety and reliability that human hands cannot replicate. As embedded vision in medical devices evolves, more uses for it will emerge. The goal is to improve safety, remove human subjectivity and irregularity, and administer the correct treatment the first (and only) time.

Embedded vision has the potential to enable a range of medical and other electronic products that will be more intelligent and responsive than before—and thus more valuable to users. The technology can both add helpful features to existing products and open up brand new markets for equipment manufacturers. The examples discussed in this article, along with its accompanying sidebars, have hopefully sparked your creativity. A worldwide industry alliance of semiconductor, software and services suppliers is also poised to assist you in rapidly and robustly transforming your next-generation ideas into shipping-product reality (see sidebar "The Embedded Vision Alliance"). The era of cost-effective, power-efficient, and full-featured embedded vision capabilities has arrived. What you do with the technology's potential is fundamentally limited only by your imagination.

Sidebar: Mobile Electronics Applications Showcase Embedded Vision

Thanks to service provider subsidies coupled with high shipment volumes, relatively inexpensive smartphones and tablets supply formidable processing capabilities: multi-core GHz-plus CPUs and graphics processors, on-chip DSPs and imaging coprocessors, and multiple gigabytes of memory. Plus, they integrate front- and rear-viewing cameras capable of capturing high-resolution still images and HD video clips. Harnessing this hardware potential, developers have created medical applications for mobile electronics devices.

Sidebar: Codifying Intuition

Medicine, like any science, heavily relies on data to diagnose and treat patient ailments. However, as any healthcare professional will tell you physicians and nurses also commonly rely on intuition. Simply by glancing at a patient, and relying in no small part on past experience, a medical professional can often deliver an accurate diagnosis, even in the presence of nebulous or contradictory information.

While we typically don’t understand the processes by which such snap judgments are created, this does not necessarily preclude harnessing them to create insightful automated systems. The field of machine learning, which is closely allied with embedded vision, involves creating machines that can learn by example. By inputting to a computer the data sets of various patient cases, complete with information on healthcare professionals’ assessments, the system's artificial intelligence algorithms may subsequently be able to intuit with human-like success rates.

Sidebar: The Embedded Vision Alliance

The Embedded Vision Alliance, a worldwide organization of technology developers and providers, is working to empower engineers to transform the potential of embedded vision technology into reality. The alliance’s mission is to provide engineers with practical education, information, and insights to help them incorporate embedded vision capabilities into products. To execute this mission, the alliance has developed a Web site with tutorial articles, videos, code downloads, and a discussion forum staffed by a diversity of technology experts. For more information on the Embedded Vision Alliance, including membership details, please email [email protected] or call 925/954-1411.

Brian Dipert is editor-in-chief of the Embedded Vision Alliance (Walnut Creek, CA) and a senior analyst at Berkeley Design Technology Inc. (BDTI; Walnut Creek), a firm that provides analysis, advice, and engineering for embedded processing technology and applications. Dipert holds a bachelor’s degree in electrical engineering from Purdue University. Reach him at [email protected].

Kamran Khan is a technical marketing engineer at Xilinx Corp. (San Jose, CA). He has more than six years of technical marketing and application experience in the semiconductor industry, focusing on FPGAs. Prior to joining Xilinx, he worked on developing FPGA-based applications and solutions for customers in an array of end markets, including medical. Contact him at [email protected].

Xilinx is a platinum member of the Embedded Vision Alliance. Xilinx enables smarter devices by pioneering new technologies and tools to make embedded vision more powerful. Xilinx’s All Programmable SoCs provide embedded applications with the productivity of a dual-core processor and FPGA fabric, all in one device. Building smaller, smarter and otherwise better medical equipment begins with the right devices and tools to accelerate applications. You can, for example, off-load real-time analytics to hardware accelerator blocks in the FPGA fabric, while the integrated microprocessor handles secure operating systems and communications protocols via software. Xilinx’s commitment to innovating All Programmable SoCs will usher in new medical devices that will be smarter, safer and more accurate. For more information, please visit http://www.xilinx.com.