by Tom Wilson

CogniVue

Brian Dipert

Embedded Vision Alliance

This article was originally published at Electronic Engineering Journal. It is reprinted here with the permission of TechFocus Media.

Courtesy of service provider subsidies coupled with high shipment volumes, relatively inexpensive smartphones and tablets supply formidable processing capabilities: multi-core GHz-plus CPUs and graphics processors, on-chip DSPs and imaging coprocessors, and multiple gigabytes of memory. Plus, they integrate front- and rear-viewing cameras capable of capturing high-resolution still images and HD video clips. Harnessing this hardware potential, developers are leveraging these same cameras, primarily intended for still and video photography and videoconferencing purposes, to also create diverse embedded vision applications. Implementation issues must be sufficiently comprehended, however, for this potential to translate into compelling reality.

Introduction

Wikipedia defines the term "computer vision" as (Reference 1):

A field that includes methods for acquiring, processing, analyzing, and understanding images…from the real world in order to produce numerical or symbolic information…A theme in the development of this field has been to duplicate the abilities of human vision by electronically perceiving and understanding an image.

As the name implies, this image perception, understanding and decision-making process has historically been achievable only using large, heavy, expensive, and power-draining computers, restricting its usage to a short list of applications such as factory automation and military systems. Beyond these few success stories, computer vision has mainly been a field of academic research over the past several decades.

Today, however, a major transformation is underway. With the emergence of increasingly capable (i.e., powerful, low-cost, and energy-efficient) processors, image sensors, memories, and other semiconductor devices, along with robust algorithms, it's becoming practical to incorporate computer vision capabilities into a wide range of embedded systems. By "embedded system," we're referring to any microprocessor-based system that isn’t a general-purpose computer. Embedded vision, therefore, refers to the implementation of computer vision technology in embedded systems, mobile devices, special-purpose PCs, and the cloud.

Similar to the way that wireless communication technology has become pervasive over the past 10 years, embedded vision technology is poised to be widely deployed in the next 10 years. High-speed wireless connectivity began as a costly niche technology; advances in digital integrated circuits were critical in enabling it to evolve from exotic to mainstream. When chips got fast enough, inexpensive enough, and energy efficient enough, high-speed wireless became a mass-market technology.

Embedded Vision Goes Mobile

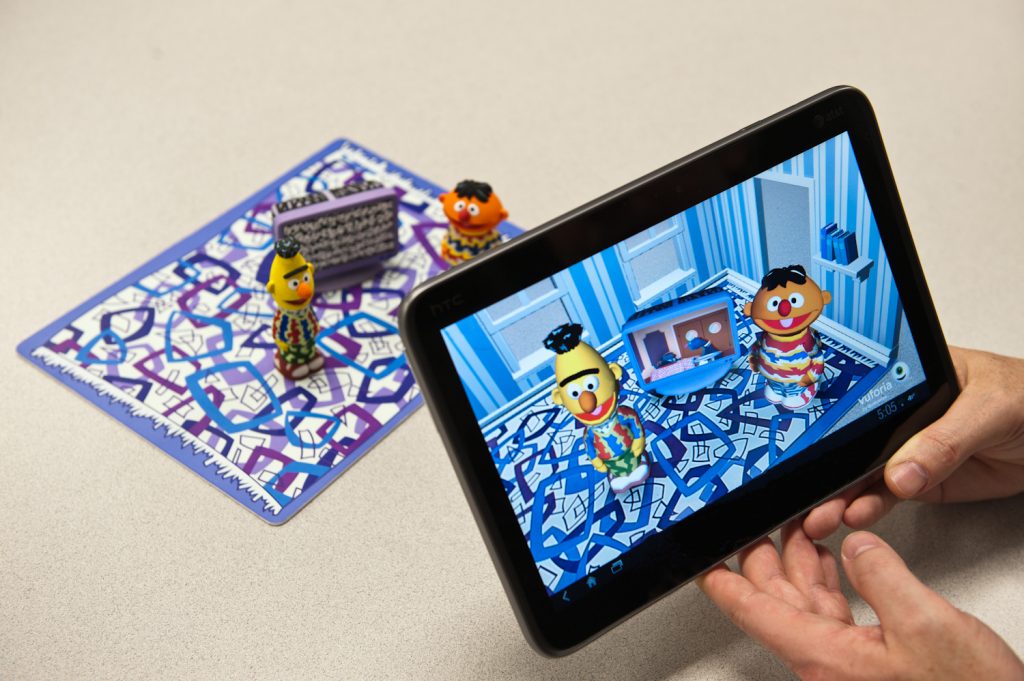

Advances in digital chips are now likewise paving the way for the proliferation of embedded vision into high-volume applications. Odds are high, for example, that the cellular handset in your pocket and the tablet computer in your satchel contain at least one rear-mounted image sensor for photography (perhaps two for 3D image capture capabilities) and/or a front-mounted camera for video chat (Figure 1). Embedded vision opportunities in mobile electronics devices include functions such as gesture recognition, face detection and recognition, video tagging, and natural feature tracking for augmented reality. These and other capabilities can be grouped under the broad term "mobile vision."

Figure 1. Google's Nexus 4 smartphone and Nexus 10 tablet, along with the Apple-developed iPhone 5 and iPad 4 counterparts, are mainstream examples of the robust hardware potential that exists in modern mobile electronics devices (a) and b) courtesy of Google, c) and d) courtesy of Apple).

ABI Research forecasts, for example, that approximately 600 million smartphones will implement vision-based gesture recognition by 2017 (Reference 2). This estimate encompasses roughly 40 percent of the 1.5 billion smartphones that some researchers expect will ship that year (Figure 2). And the gesture recognition opportunity extends beyond smartphones to portable media players, portable game players and in particular, media tablets. According to ABI Research, “It is projected that a higher percentage of media tablets will have the technology than smartphones,” and IDC Research estimates that 350 million tablets will ship in 2017 (Reference 3). These forecasts combine to create a compelling market opportunity for mobile vision, even if only gesture recognition is considered.

Figure 2. Gesture interfaces can notably enhance the utility of mobile technology…and not just when the phone rings while you're busy in the kitchen (courtesy of eyeSight).

Face recognition also promises to be a commercially important vision processing function for smartphones and tablets (Figure 3). Some applications are fairly obvious, such as device security (acting as an adjunct or replacement for traditional unlock codes). But plenty of other more subtle, but equally or more lucrative, uses for face recognition also exist, such as discerning emotional responses to advertising. Consider, for example, the $12 million in funding that Affectiva, a developer of facial response software, recently received (Reference 4). This investment comes on the heels of a deal between Affectiva and Ebuzzing, the leading global platform for social video advertising (Reference 5). A consumer who encounters an Affectiva-enabled ad is given the opportunity to activate the device's webcams to measure his or her response to the ad and assess how it compares against the reactions of others. Face response analysis currently takes place predominantly on a "cloud" server, but in the future vision processing may increasingly occur directly on the mobile platform, as advertising becomes more pervasive on smartphones and tablets. Such an approach will also enable more rapid responses, along with offline usage.

Figure 3. Robust face recognition algorithms ensure that you (and only you) can operate your device, in spite of your child's attempts to mimic your mustache with his finger (courtesy of Google).

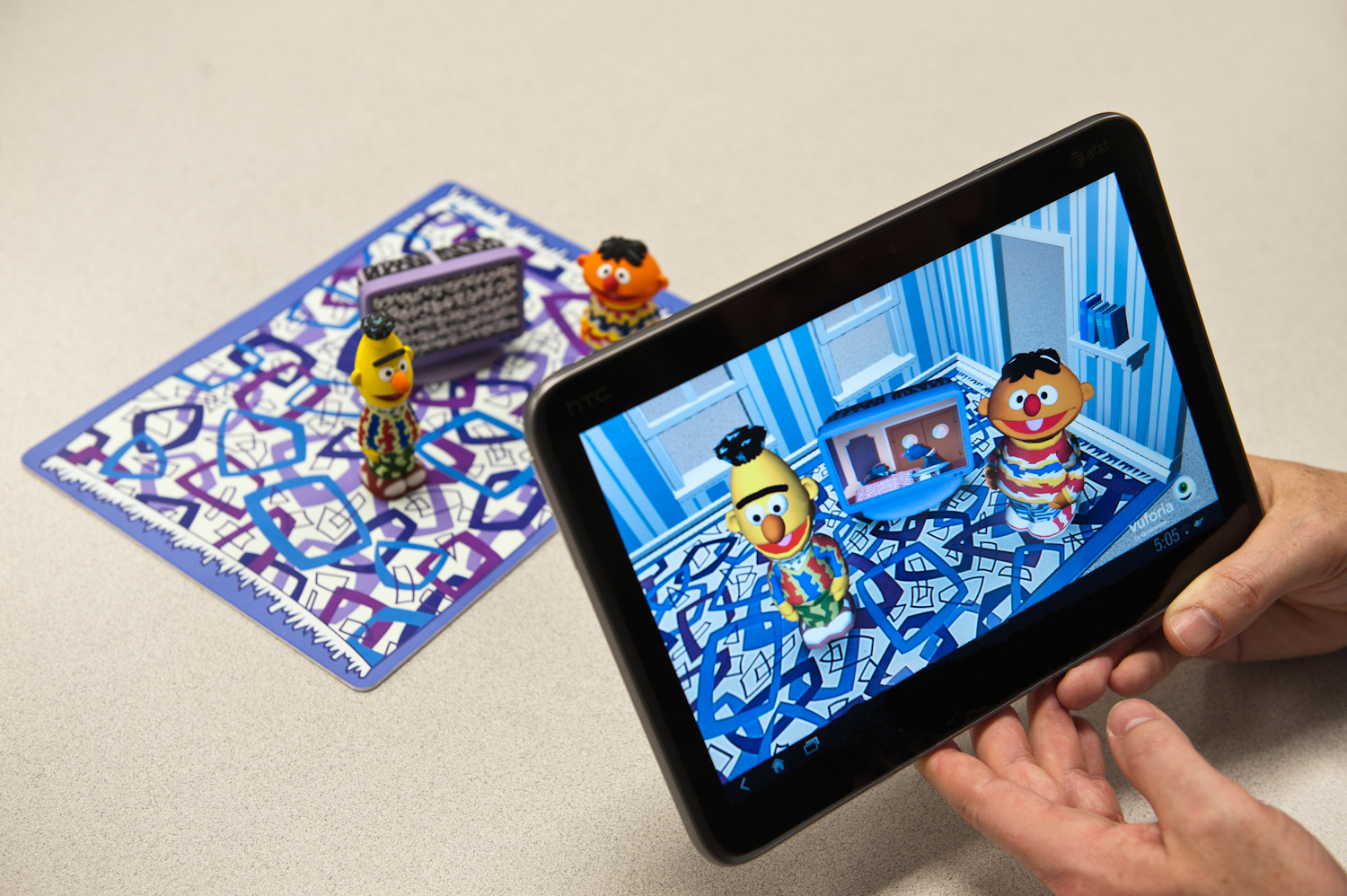

Mobile advertising spending is potentially a big "driver" for building embedded vision applications into mobile platforms. Such spending is expected to soar from about $9.6 billion in 2012 to more than $24 billion in 2016, according to Gartner Research (Reference 6). Augmented reality will likely play a role in delivering location-based advertising, along with enabling other applications (Figure 4). Juniper Research has recently found that brands and retailers are already deploying augmented reality applications and marketing materials, which are expected to generate close to $300 million in global revenue in 2013 (Reference 7). The high degree of retailer enthusiasm for augmented reality suggested to Juniper Research that advertising spending had increased significantly in 2012 and was positioned for continued growth in the future. Juniper found that augmented reality is an important method for increasing consumer engagement because, among other reasons, it provides additional product information. However, the Juniper report also highlighted significant technical challenges for robust augmented reality implementations, most notably problems linked to the vision-processing performance of the mobile platform.

Figure 4. Augmented reality can tangibly enhance the likelihood of purchasing a product, the experience of using the product, the impact of an advertisement, or the general information-richness of the world around us (courtesy of Qualcomm).

Embedded Vision is Compute-Intensive (and Increasingly So)

Embedded vision processing is notably demanding, for a variety of reasons. First, simply put, there are massive amounts of data to consider. Frame resolutions higher than 1 Mpixel need to be streamed uncompressed at 30 to 60 frames per second, and every pixel of each frame requires processing attention. Typical vision processing algorithms for classification or tracking, for example, also combine a mix of vector and scalar processing steps, which demand intensive external memory access patterns with conventional processing architectures. Shuttling intermediate processing results back and forth between the CPU and external memory drives up power consumption and increases overall processing latency.

In addition, a robust vision-processing algorithm for object classification, for example, must achieve high levels of performance and reliability. A careful algorithm design balance must be achieved between sensitivity (reducing the miss rate) and reliability (reducing "false positives"). In a classification algorithm, this requirement necessitates adding more classification stages to reduce errors. In addition, it may compel more image frame pre-processing to reduce noise, or perhaps a normalization step to remove irregularities in lighting or object orientation. As these vision-based functions gain in acceptance and adoption, user tolerance for unreliable implementations will decrease. Gesture recognition, face recognition and other vision-based functions will also need to become more sophisticated and robust to operate reliably in a broad range of environments. These demands translate into more pixels, more complex processing, and therefore an even tougher challenge for conventional processing architectures.

On the processing side, it might seem at first glance that plenty of processing capacity exists in modern mobile application processor SoCs, since they contain dual- or quad-core CPUs running at 1 GHz and above. But CPUs are not optimized for data-intensive, parallelizable tasks, and—critically for mobile devices—CPUs are not the most energy-efficient processors for executing vision tasks. Today’s mobile application processors incorporate a range of specialized coprocessors for tasks such as video, 3D graphics, audio, and image enhancement. These coprocessors enable smartphones and tablets to deliver impressive multimedia capabilities with long battery life. While some of these same coprocessors can be pressed into service for vision tasks, none of them have been explicitly designed for vision tasks. As the demand for vision processing increases on mobile processors, specialized vision coprocessors will most likely be added to the ranks of the existing coprocessors, enabling high performance with improved energy efficiency.

Embedded Vision will be Multi-Function

Functions such as gesture recognition, face recognition and augmented reality are just some of the new vision-based methods of interfacing mobile users with their devices, digital worlds and real-time environments. As previously discussed, individual vision processing tasks can challenge today’s mobile application processors. Imagine, therefore, the incremental burden of multiple vision functions running concurrently. For example, the gesture interface employed for simple smartphone control functions may be entirely different than the gestures used for a mobile game. Next, consider that this mobile game might also employ augmented reality. And finally, it's not too much of a "stretch" to imagine that such a game might also use face recognition to distinguish between your "friends" and "enemies." You can see that the processing burden builds as vision functions are combined and used concurrently in gaming, natural feature tracking, user response tracking, and other application scenarios.

For these reasons, expanding on points made earlier, it's even more feasible that future application processors may supplement conventional CPU, GPU and DSP cores with specialized cores specifically intended for vision processing. Several notable examples of this trend already exist: CogniVue's APEX ICP (Image Cognition Processor); CEVA's MM3101 imaging and vision core; Tensilica's IVP (Imaging and Video Processing) core; and the PVP (Pipeline Vision Processor) built into several of Analog Device's latest Blackfin SoCs. Just as today's application processors include CPU cores for applications, GPU cores for graphics, and DSP cores for baseband and general multimedia processing, you should expect the integration of "image cognition processing” cores in the future for mobile vision functions.

Embedded Vision Must Consume Scant Power

The available battery power in mobile devices has not improved significantly in recent years, as evolution of the materials used to construct batteries has stalled. Image sensors consume significant current if running at high frame rates for vision applications, versus their originally intended uses with still images and short videos. Add to this the computationally intense processing of vision algorithms, and batteries may drain quickly. For this reason, many vision applications are currently intended for use over short time durations rather than as always-on features. To enable extended application operation, the mobile electronics industry will need to rely on continued hardware and software evolution, potentially aided by more fundamental architectural revolutions.

An Industry Alliance Accelerates Mobile Vision Understanding, Implementation, Adoption, and Evolution

Embedded vision technology has the potential to enable a wide range of electronic products (such as the mobile devices discussed in this article) that are more intelligent and responsive than before, and thus more valuable to users. It can add helpful features to existing products. And it can provide significant new markets for hardware, software and semiconductor manufacturers. The Embedded Vision Alliance, a worldwide organization of technology developers and providers, is working to empower engineers to transform this potential into reality.

CogniVue, the co-author of this article, is a member of the Embedded Vision Alliance, as are Analog Devices, BDTI, CEVA, eyeSight, Qualcomm and Tensilica, also mentioned in the article. First and foremost, the Alliance's mission is to provide engineers with practical education, information, and insights to help them incorporate embedded vision capabilities into new and existing products. To execute this mission, the Alliance has developed a website (www.Embedded-Vision.com) providing tutorial articles, videos, code downloads and a discussion forum staffed by a diversity of technology experts. Registered website users can also receive the Alliance’s twice-monthly email newsletter (www.embeddedvisioninsights.com), among other benefits.

Transforming a mobile vision experience into a product ready for shipping entails compromises touched on in this article—in cost, performance, and accuracy, to name a few. The Embedded Vision Alliance catalyzes conversations on these issues in a forum where such tradeoffs can be rapidly understood and resolved, and where the effort to productize mobile vision can therefore be accelerated, enabling system developers to effectively harness various mobile vision technologies.

For more information on the Embedded Vision Alliance, including membership details, please visit www.Embedded-Vision.com, email [email protected] or call 925-954-1411. Please also consider attending the Alliance's upcoming Embedded Vision Summit, a free day-long technical educational forum to be held on October 2nd in the Boston, Massachusetts area and intended for engineers interested in incorporating visual intelligence into electronic systems and software. The event agenda includes how-to presentations, seminars, demonstrations, and opportunities to interact with Alliance member companies. For more information on the Embedded Vision Summit, including an online registration application form, please visit www.embeddedvisionsummit.com.

References:

- http://en.wikipedia.org/wiki/Computer_vision

- Flood, Joshua, "Gesture Recognition Enabled Mobile Devices." ABI Research, Web. 4, Dec 2012

- IDC Worldwide Quarterly Tablet Tracker, March 2013

- http://www.affectiva.com/news-article/affectiva-raises-12-million-to-extend-emotion-insight-technology-to-online-video-and-consumer-devices/

- http://www.techcrunch.com/2013/02/18/affectiva-inks-deal-with-ebuzzing-social-to-integrate-face-tracking-and-emotional-response-into-online-video-ad-analytics

- http://www.gartner.com/newsroom/id/2306215

- https://www.juniperresearch.com/viewpressrelease.php?pr=348

About the Authors:

Tom Wilson is Vice President of Business Development at CogniVue Corporation, with more than 20 years of experience in various applications such as consumer, automotive, and telecommunications. He has held leadership roles in engineering, sales and product management, and has a Bachelor’s of Science and PhD in Science from Carleton University, Ottawa, Canada.

Brian Dipert is Editor-In-Chief of the Embedded Vision Alliance. He is also a Senior Analyst at BDTI (Berkeley Design Technology, Inc.), and Editor-In-Chief of InsideDSP, the company's online newsletter dedicated to digital signal processing technology. He has a B.S. degree in Electrical Engineering from Purdue University in West Lafayette, IN. His professional career began at Magnavox Electronics Systems in Fort Wayne, IN; Brian subsequently spent eight years at Intel Corporation in Folsom, CA. He then spent 14 years (and five months) at EDN Magazine.