Computational photography is, as a recently published contributed article points out, one of the most visible current examples of embedded vision processing for the masses. And panorama "stitching", the ability to combine multiple horizontally- and vertically-captured frames into one higher-resolution image, is one of the most common computational photography features. Most of today's panorama stitching implementations, done either in a smartphone or tablet application or within a standalone camera, focus on still image processing of multiple sequentially captured frames and are therefore relatively insensitive to subsequent stitching delays.

What, however, if you were interested in stitching together the outputs of four simultaneously captured camera images…at 30 fps or 60 fps processing rates…and at 720p or 1080p per-camera resolutions? What if you wanted to do the processing not in an offline computer workstation or server, but directly within a device that fit in the palm of your hand? And what if you wanted that device to deliver multi-hour battery life? These are only some of the challenges faced by Bill Banta (CEO) and Paul Alioshin (CTO) of CENTR, with whom Jeff Bier and I spoke yesterday. The company's in-progress Kickstarter program indicates that they've successfully surmounted these and other challenges and are gearing up for production.

And the means by which they've solved the various problems they encountered along the way makes for a fascinating embedded vision case study.

Banta and Alioshin both come from Apple, where they were respectively the business and technical leads on the company's efforts to build iSight cameras directly into computers and (later) iPhones, iPod touches and iPads. The CENTR camera, intended to be a 360-degree panorama successor to portable video capture devices such as the GoPro and Contour product lines, targets applications such as wearable photography in extreme sports situations, and quadrotor mounting configurations. As such, it must be compact and lightweight, but high definition and otherwise high quality video capture is also essential. As is long battery life. And cost-effectiveness.

The number of cameras to include in the device was one fundamental decision that CENTR's development team needed to solve up front, and in fact the first several years of the company's life was spent coding and optimizing camera calibration routines, as well as frame combination and other image processing algorithms, before a production-feasible hardware prototype was ever tackled.

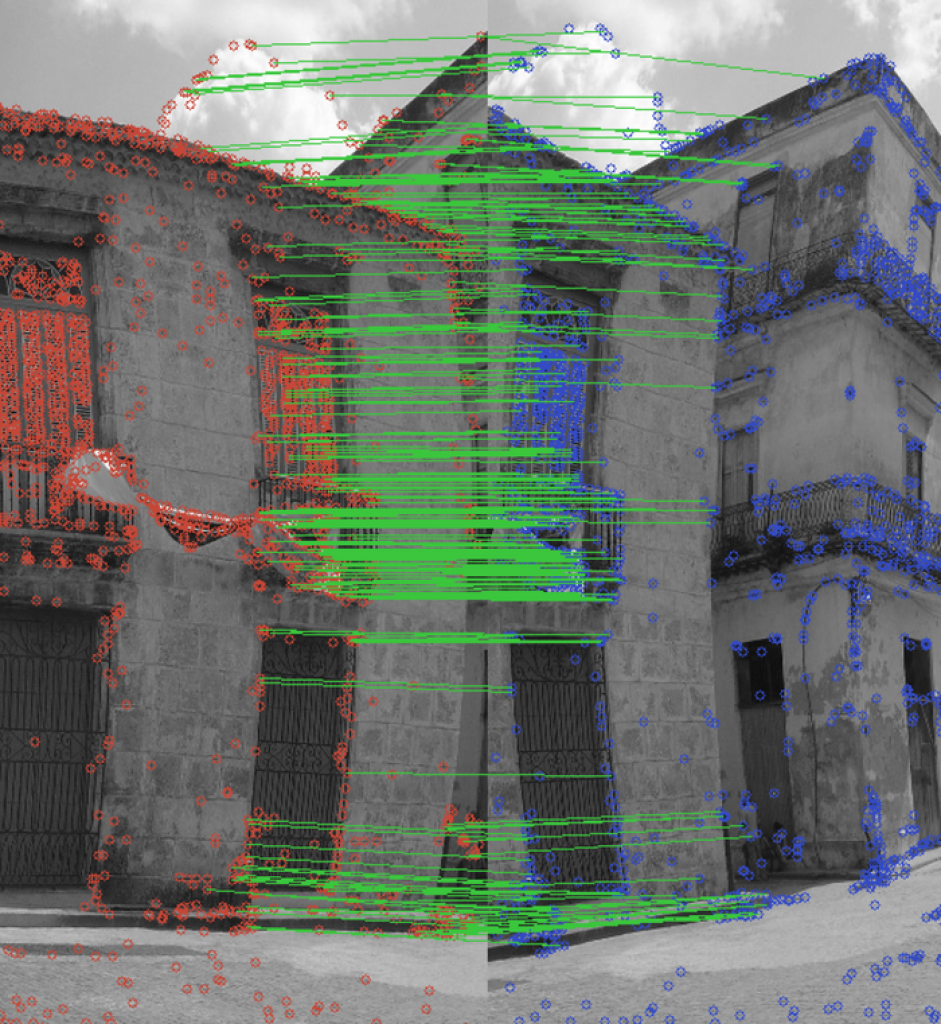

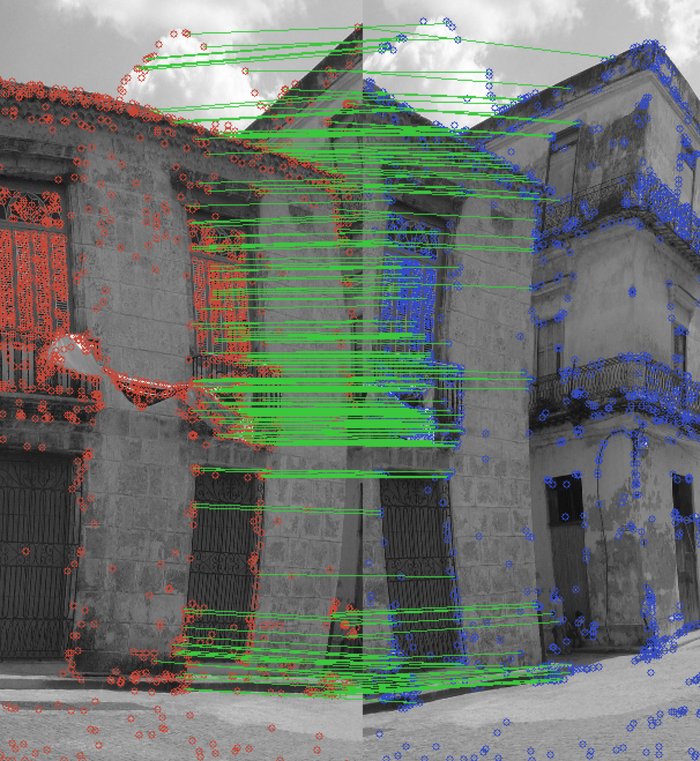

Robust calibration prior to use is a key aspect of CENTR's cameras' success

More cameras would allow for reduced per-camera distortion, thereby minimizing the processing overhead needed to stitch their respective images together. But an increase in cumulative camera count would negatively impact the system's form factor and bill of materials cost.

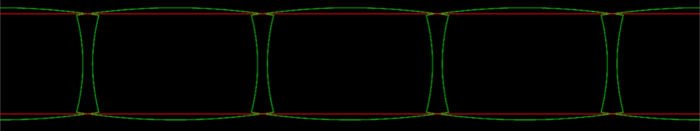

Fewer per-system cameras might seemingly optimize for size, weight and total cost parameters, but at the tradeoff of increased image distortion due to the ultra-wide angle optics necessary to still encompass a full 360-degree aggregate field of view. These optics would out of necessity be non-standard (i.e. "fisheye", etc) compared to those used in high volume (translation: low cost) smartphone and tablet designs. And the higher processing overhead required to minimize per-image distortion would have cost and battery life impacts of its own. So a fewer-camera configuration might not end up being as form factor- and price-optimized as you might initially think would be the case.

CENTR's final hardware design includes four cameras, with overlapping 90+ degree fields of view.

CENTR's four cameras overlap their respective frames' perspectives, in order to simplify the panorama stitching process

The current system prototype leverages OmniVision image sensors with paired per-pixel small and large photodiodes to deliver single-frame HDR (high dynamic range) capture results. Alioshin claims that next-generation OmniVision sensors, to be included in the final production design, will deliver even better light sensitivity.

CENTR uses a Movidius vision processor (as does Google in Project Tango, with two Myriad 1's employed in that particular case) to handle the stitching algorithm, which identifies common reference points in adjacent images and leverages them to accomplish the panorama-combining task. Alioshin was careful to point out that CENTR wasn't using the Movidius processor as a "black box"; the algorithms are CENTR-developed and proprietary, not off-the-shelf. He also noted that the reference point-searching and -matching functions inherently also ascertain rudimentary object depth data, in treating the multiple cameras as a stereo array setup of sorts, although this 3-D information is not yet being directly exploited.

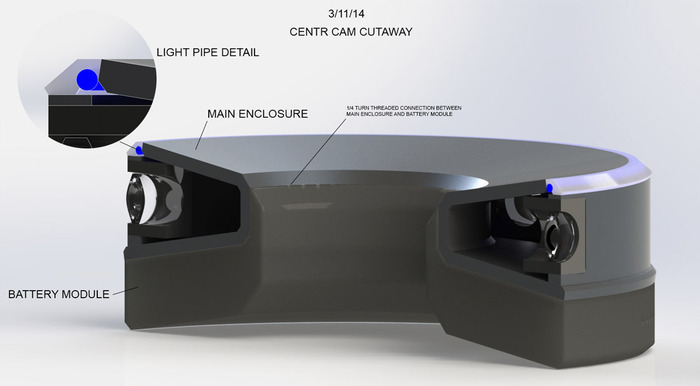

The CENTR system combines the cameras, vision processor and three microphones in a ring-shaped and water-resistant main structure, roughly 70 mm in diameter, to which you thread-add a removable battery module.

The CENTR hardware design is compact, light weight, and rugged

You can mount the camera, or hold it in your hand, without blocking any particular camera's view. You can also control the device over Bluetooth or Wi-Fi via an application running on a wirelessly tethered mobile device. Each battery's roughly 2.5 hour operating life translates to approximately 16 MBytes' worth of captured panorama video, spread among the 8 MBytes' worth of integrated flash memory and additional mass storage available via the unit's microSD expansion connector.

720-pixel (tall) high-definition video frames, recorded at up-to-60 fps rates, are 4,600 pixels wide, accounting for camera-to-camera overlap of each 1280-pixel-wide camera image (single-camera optional operation is also supported). Similarly, the alternative 1080 pixel tall panorama images, captured at up to 30 fps rates, are 6,900 pixels wide, due to overlap of each 1,920 pixel-wide source image. CENTR's leverage of the industry-standard H.264 video format means that the "raw" panorama video footage can be easily imported into an editing program such as Final Cut Pro or Premiere, for example, or uploaded to YouTube.

More elaborate interactive playback scenarios, such as zooming in to a portion of the panorama clip or panning from side to side across it, require a dedicated player. A Flash-based version is currently available in public beta; you can see examples of footage captured with prototype units on CENTR's website. In viewing them, you'll notice that the device supports only horizontal stitching, not vertical (i.e. spherical), which was a conscious design decision. As the FAQ notes:

CENTR has investigated spherical and hemispherical camera arrays. For the initial product, we felt that the additional field of view reduced image quality to an unacceptable level. The additional amount of HD image data, and the increased number of pixels that need to be stitched together created very high image processing requirements that would not have been possible on such a small, light device.

Our beta testers have used CENTR prototypes in a wide variety of applications to create some incredible footage. In the vast majority of cases, CENTR's panoramic video allowed testers to create incredible video experiences and said an additional camera would not have added much value. We acknowledge there are some applications where spherical video may have value, but CENTR is not able to provide that functionality in this product.

A HTML 5 player for non-Flash playback scenarios (such as Apple iOS- and, more recently, Google Android-based devices) is under development.

The Kickstarter offering, according to Banta and Alioshin, is primarily intended for developers and production houses (such as National Geographic and Red Bull, both of which are already working with the company), and will be available in November in limited quantities (~500). General production is currently scheduled for next February, priced at $399.

As impressive as CENTR's product offering is when viewed standalone, it becomes even more so when you consider it in the context of Banta and Alioshin prior project, done with the U.S. Army's Department of Defense. That product also consisted of a 360-degree camera array, combined with a head-mounted display to view the resultant panorama image. But in that particular case, a standalone Mac Pro workstation handled the stitching function, with data transfer from the cameras to it (and from it back to the display) taking place over a proprietary wireless network.

In contrast, only a short time later, that same stitching function is now taking place within the camera, and on a fingernail-sized sliver of silicon, at low cost and with long battery life. Truly CENTR is a case study showcase of the Embedded Vision Alliance's overview observations:

Computer vision is the use of digital processing and intelligent algorithms to interpret meaning from images or video. Computer vision has mainly been a field of academic research over the past several decades. Today, however, a major transformation is underway. Due to the emergence of very powerful, low-cost, and energy-efficient processors, it has become possible to incorporate practical computer vision capabilities into a wide range of embedded systems, mobile devices, PCs and the cloud.

If you're a hardware or software product creator interested, like CENTR, in incorporating practical computer vision intelligence into your next-generation electronic systems, I encourage you to attend the next Embedded Vision Summit, a day-long technical education forum taking place May 29 at the Santa Clara (California) Convention Center. The Summit's comprehensive program encompasses hour-long keynotes from technology experts at Facebook and Google, two tracks' worth of sixteen total technical presentations, and technology demonstrations from nearly two dozen Alliance member companies, along with two prior-day in-depth technical workshops. For more information, including online registration, please see the event area of the Alliance website. I'll see you there!