Computer vision and computational photography are intrinsic aspects of the Internet of Things (IoT), where cameras and sensors reign supreme. This article provides an overview of how computer vision technology is transforming the most critical markets in 2016: mobile, automotive, security and surveillance, and drones.

Mobile

The digital cameras in smartphones are one of their most highlighted and differentiated features. The smartphone’s rear camera is commonly used as a consumer’s sole camera, therefore creating an expectation of similar image quality to a point-and-shoot camera (if not better). The front-facing camera for “selfies” is also becoming a significant social media trend.

2014 marked the unveiling of several smartphones that include dual front and/or rear cameras. The HTC One M8, for example, introduced in February 2014, introduced a rear camera containing two sensors with resolutions of 4 Mpixels and 2 Mpixels, while the front-facing camera was 5 Mpixels in resolution. Why were dual sensors used in the primary (rear) camera? The secondary sensor is a sort of a rangefinder. Its job is to create a depth map of the scene, which allows the user to (for example) add high-quality rendered effects such as background blur (the bokeh effect), or to refocus images after the shot was taken. It can even allow for 3D-like photos containing an extra depth layer, visible when the phone is tilted.

HTC One M8 bokeh effect using dual-sensor rear camera

Since then, many other smartphones have followed the dual-sensor rear camera trend, with even higher resolutions.

Dual-sensor rear camera lineup launched since 2014

In September 2015, LG introduced the V10 smartphone, equipped with dual 5 Mpixel sensors in its front-facing camera. The V10’s interesting two-lens setup allows it to, for example, combine two images using a smart algorithm in order to create a wide-angle “selfie” shot without the need to use a “selfie stick”. Versus using one sensor, which delivers standard 80-degree pictures, the use of both sensors results in 120-degree panorama snapshots.

The LG V10’s dual 5 Mpixel front-facing sensors enable group “selfie” capabilities

Industry speculation forecasts that the upcoming Samsung Galaxy S7 and iPhone 7 might also be equipped with dual-sensor front camera hardware, capable of both still image and video capture. However, wide-angle video support incurs significant real-time challenges, such as how to combine two image streams and perform registration, as well as to handle distortion correction.

More general computational photography challenges include video stabilization, hybrid (optical plus digital) zoom support, handling low light, and delivering crisp resolution. Mobile consumers are demanding visual quality equivalent to DSLRs from their camera phones; this expectation includes features such as fast autofocus, full HD resolution video capture, and multi-frame processing at 30 fps and even 60 fps frame rates. These features will lead to a dramatic increase in required processing power, which must be balanced against keeping power consumption low.

Automotive

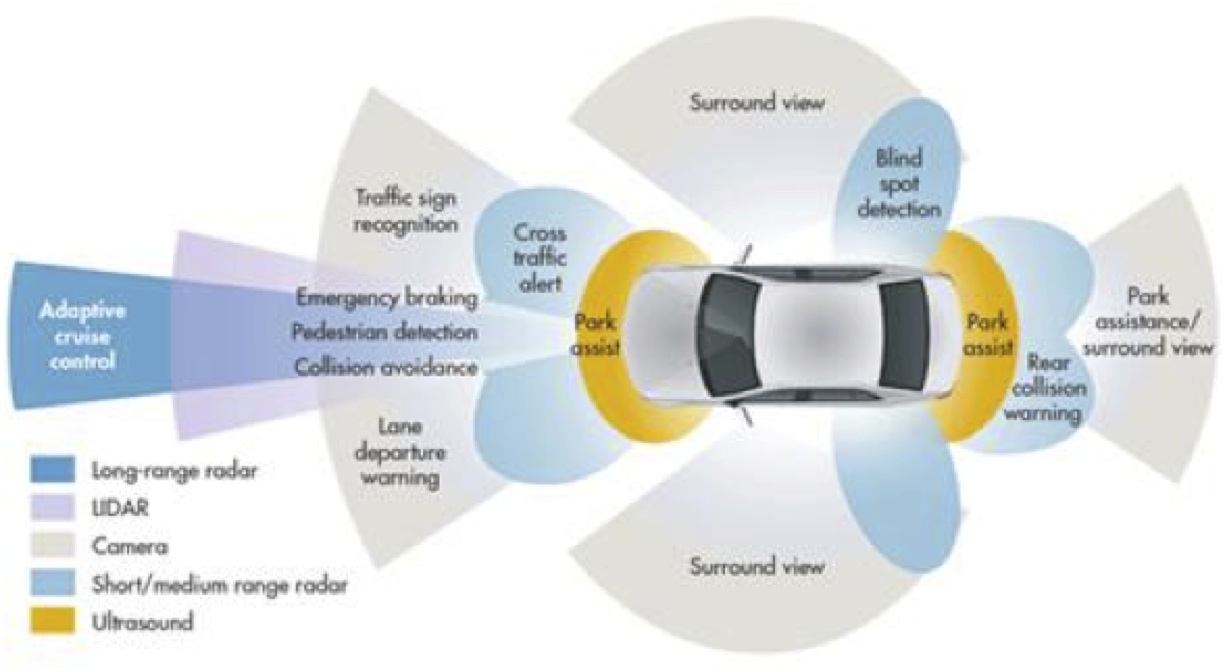

Automotive electronics is the second largest growth market for computer vision chips after mobile. A dramatic increase in safety requirements is a driving factor for the adoption of advanced driving assistance systems (ADAS) products. Governments around the world are also regulating the inclusion of additional security hardware in vehicles.

Cameras outside cars, for example, could prevent car-vs-pedestrian accidents. Inside-car cameras can monitor driver behavior (if he or she is feeling tired or sleepy, for example) or kids’ activities on the backseat. Car insurance companies are promoting a camera-centric vehicle ecosystem, so that they can make coverage decisions based on undeniable facts.

Cars in near future will contain numerous camera sensors

Unsurprisingly, therefore, technologies like ADAS could lead to up to ten always-on cameras in cars in the near future. These cameras and associated local processors will need to be smart enough to make efficient decisions in real-time. They can’t afford to send the data to the “cloud” and wait for a response. And these always-on systems must operate at high efficiency from an energy consumption standpoint.

Cameras inside the vehicle can also perform face recognition. Drivers will not be able to turn on the engine unless they pass the face recognition authentication. And then there is autonomous driving, which will require a lot of smart cameras. Self-driving vehicles are still a ways off, but they’re paving the way for enhanced safety and convenience in today’s driving experience, largely through the introduction of smart cameras.

Security and Surveillance

Security and surveillance devices are time- and mission-critical. They often can’t wait for a response from the costly “cloud”, so there is an increasing need to make cameras smarter and reduce reliance on bulky and expensive monitor rooms. Furthermore, computer vision tasks like motion detection have to be done in a smart manner, which makes it imperative to move video analytics from the “cloud” to the camera. Thankfully, camera processing is becoming cheaper, as well as more function-robust, improving the ability to respond in real-time with high accuracy.

It’s worth noting that security cameras are moving toward 4K resolutions, so it will be increasingly impractical to store all of the data that security cameras capture in operating 24 hours a day. Storage and analysis are both expensive “cloud” propositions. Hence, the smart camera will only triggered in response to irregular behavior, saving further cost in the form of data storage.

Drones

Drones are becoming smarter by the day. However, they face the challenge of video stabilization caused by shaking of the drone motor. Drones currently use gimbals to compensate for vibration; these accessories cost more than $200. Alternatively, a robust computer vision solution with additional processing can implement video stabilization in real-time, eliminating the gimbal cost. Moreover, computer vision features enable drones to recognize objects via software algorithms, thereby achieving situational awareness to avoid collisions with other objects.

Consider, too, “follow me” and “point of interest” features offered in advanced drones such as the DJI Phantom-3, which boasts 4K resolution video capture and image enhancement capabilities. According to a recent study from BCC Research, the global drone market is expected to grow from $639.9 million in 2014 to $725.5 million in 2015. The market is further expected to grow to $1.2 billion by 2020.

Drones features such as “follow me” leverage sophisticated computer vision algorithms

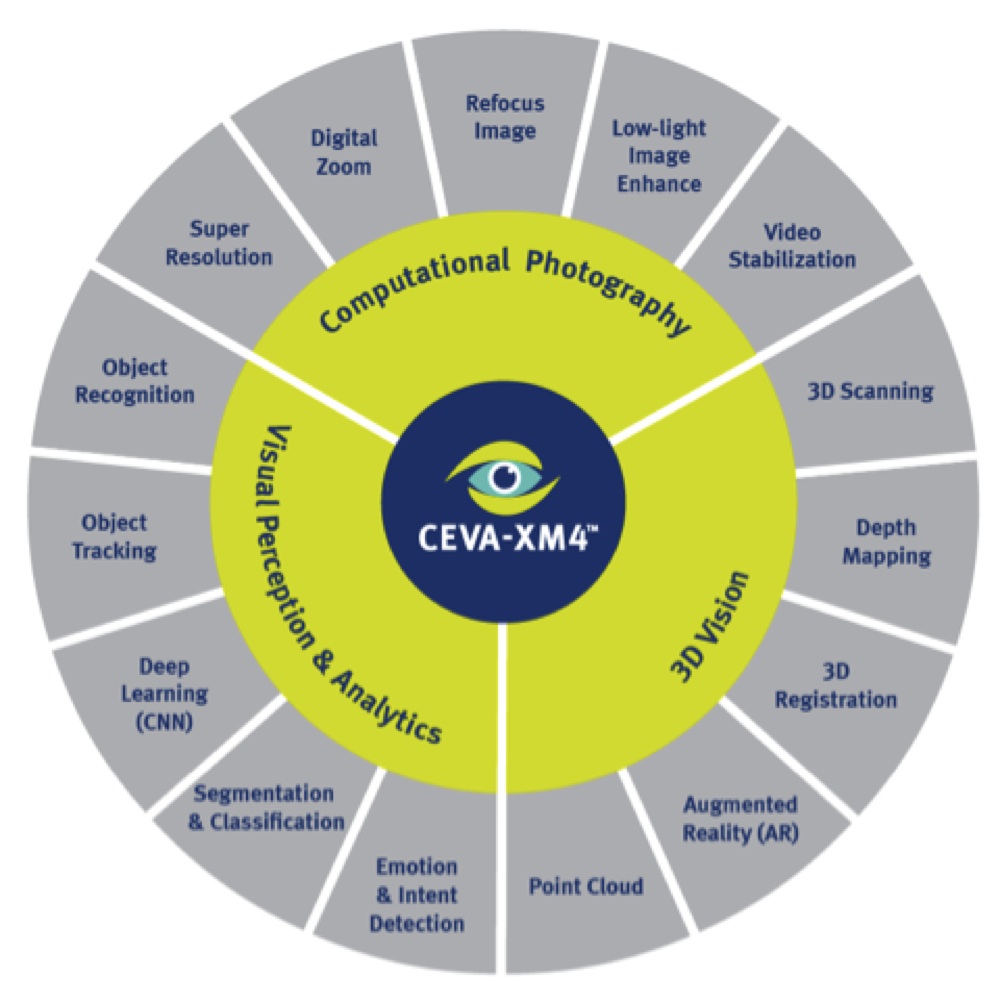

The CEVA-XM4: Profile of a Vision Processor

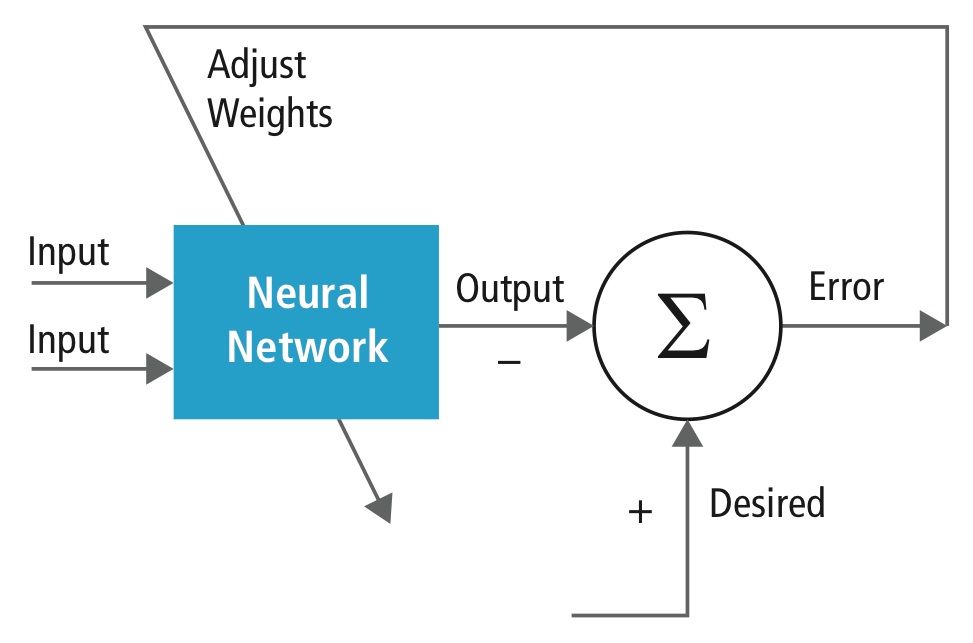

The growth markets outlined previously clearly show the need for a dedicated vision DSP to carry out computer vision tasks rapidly and in a power-efficient manner. The CEVA-XM4 intelligent vision processor has been developed from the ground up for computer vision applications such as video stabilization, digital zoom, super resolution and post-capture image refocus. The CEVA-XM4 vision processor takes advantage of pixel overlap in image processing by reusing the same data to produce multiple outputs, which enhances processing capability, reduces power consumption, and saves external memory bandwidth and frees system buses for other tasks.

CEVA-XM4 enables multi-app processing for gesture and face detection, emotion detection and eye tracking

XM4 is, as its name suggests, CEVA’s fourth-generation imaging and vision processor IP core, and brings embedded systems closer than ever to human vision and visual perception. The CEVA-XM4 vision processor offers up to an 8x performance gain and 2.5x power savings, compared to the firm’s previous vision core.

For more information on CEVA’s computer vision technology and products, please see:

- CEVA-XM4 product page

- CEVA-XM4 white paper

- CEVA-XM4 blog post “Computer Vision One Step Closer to Human Vision”

By Liran Bar

Director of Product Marketing, CEVA