Computer vision technology is complementing GPS sensors in addressing the quest to support autonomous flying features such as object tracking, environment sensing, and collision avoidance. However, 4K video (now a must-have feature for image capture) and other functions such as 3D depth map creation pose significant computational challenges for the high quality image- and video-processing pipelines in visually intelligent drones' electronics.

While computer vision is a clearly dominant aspect of the design evolution in such flying robots, it remains algorithmically, requiring significant intelligence and analytical capabilities in order for drones to understand what they are filming. Software algorithms are continuously improving to address vision applications' increasing computational complexity, thereby calling for flexible hardware platforms to optimally leveraging these improvements.

Computer vision can make up for the lack of operator training or even understanding of the rules of airspace, thereby ensuring that drones don't, for example, knock out power for hundreds of West Hollywood residents or collide with helicopters. The twisted portrayal of delivery drones in a recent Audi commercial further underlines the hidden dangers in the brave new world of these flying machines in the sky.

This dark side of drone technology's market takeoff clearly highlights the imperative to further expand the devices' autonomous capabilities so that they don't become a danger to society. Also, an increase in drones' functionality will open up new opportunities in the commercial arena. According to a study from BCC Research, released in September 2015, the global drone market is expected to grow from $639.9 million in 2014 to $725.5 million in 2015. The drone market is further expected to grow to $1.2 billion by 2020 with a compound annual growth rate (CAGR) of 11.4 percent during the forecast period of 2015-2020.

Collision Avoidance Systems

A comprehensive collision avoidance system is now essential to prevent drones from flying into everything from power lines to trees and windmills. And in the near future, there may be as many as 10,000 drones flying over a city on a given day. Therefore, drones not only need to avoid flying into buildings, trees and commercial aircraft, they also need to avoid hitting other drones. These requirements all entail the recognition of objects through complex software algorithms, subsequently achieving situational awareness in order to avoid collisions with other objects.

Computer vision enables drones to sense and avoid obstacles

The software can detect objects of interest, track these objects frame-by-frame, and carry out the necessary analysis to recognize and appropriate respond to their behavior. Here, depth information based on the data captured from two stereo cameras can be used to construct 3D depth maps in order to more precisely avoid object collisions.

Moreover, since flying objects such as remote-control drones move around in 3D open space, the video they capture and analyze requires stabilization in all three axes (x, y and z) and six degrees of motion and rotation—up-down, right-left and forward-backwards, plus pitch, roll and yaw (tilting forward, tilting sideways, and rotating around the middle).

Dual-Camera Setups Enable New Markets

Drones are now capable of serving myriad new markets, from mining to agriculture to construction. For example, drones can be used to track the oil delivery, measuring the speed of the vehicle and locating leaks in oil and gas pipelines. However, for that, drones need to sense the surrounding environment, identify objects, and respond to situations in an instant.

Computer vision-equipped drones can evaluate crop-growth progress

Inevitably, drones will require a number of improvements in order to fulfill their potential in these new markets. For a start, drones have to be made easier to operate, thereby requiring features like depth map support in order to ensure a safe takeoff, flight, and landing. And some drones companies already offer object tracking for "Follow Me" and "Point of Interest" features in various outdoors applications.

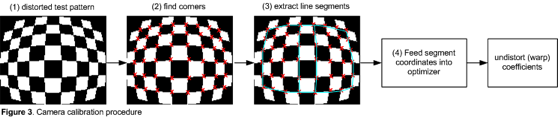

It's also worth remembering that most drones use CMOS sensors to record video, leading to “rolling shutter” effects when capturing fast moving objects. Wide-angle lenses also require real-time lens distortion correction. Consider, too, the inevitable "shake" in the video due to factors such as the drone motor and wind; solutions such as gimbal for vibration dampening, along with various isolation approaches, come at various not-insignificant costs. An alternative viable engineering solution to these issues involves real-time digital video stabilization and other features for enhanced image processing, leveraging dedicated hardware accelerators.

Moreover, a two-camera setup might allow drones to switch between daylight and thermal video streams. Such a drone can, for example, fly over a parking lot and find cars that still have hot engines. It can then scan their license plates for payment or security purposes.

The Battery Conundrum

These new features will inevitably require more battery power, which is already a "hanging sword" over the future of drones. The battery life of a drone is generally limited to 15 to 30 minutes of flying time; this limitation needs to significantly improve if drones are to effectively reach a wider spectrum of applications.

The greater emphasis on image capture and vision processing will lead to more advanced camera subsystems, thereby causing greater demands on drone batteries. A DSP (digital signal processor) solution can handle significant compute workloads for image and video processing at much less power and die area on a chipset, because it supports an ISA (instruction set architecture) tailored for specific applications, such as aerial photography or 4K video post-processing.

CEVA's XM4 outperforms GPUs in vision processing applications, consuming 10% of the power and 5% of the die area

Take the CEVA-XM4 vision processor, for example, which has been designed from the ground up to run complex imaging and vision algorithms in a battery efficient-manner. The XM4 is a DSP-plus-memory subsystem IP core that boasts a vision-oriented low-power instruction set along with a programmable wide vector architecture, multiple simultaneous scalar units, and support for both fixed- and floating-point math.

The CEVA-XM4 imaging and vision DSP, which is fully optimized for convolutional neural network (CNN) layers, software libraries and APIs, can facilitate the design of autonomous drones by enabling object detection and recognition features, thus helping drones to avoid collisions and ensure safe landings. CEVA's Deep Neural Network (CDNN) software framework complements the XM4 processor core with an easy migration of pre-trained Deep Learning networks such as Caffe.

Find out more about computer vision system design and applications in CEVA's white paper on the XM4 intelligent vision processor. And click here to watch CEVA's webinar about implementing machine vision in embedded systems, which includes a "deep dive" into CDNN.

By Liran Bar

Director of Product Marketing, Imaging & Vision DSP Core Product Line, CEVA.

Liran has more than fifteen years of experience in the imaging semiconductor industry. He holds a B.Sc. in Electrical Engineering from Ben-Gurion University.