This news post was originally published at NVIDIA's website. It is reprinted here with the permission of NVIDIA.

ThisIt just got a whole lot easier to add complex AI and deep learning abilities to intelligent machines.

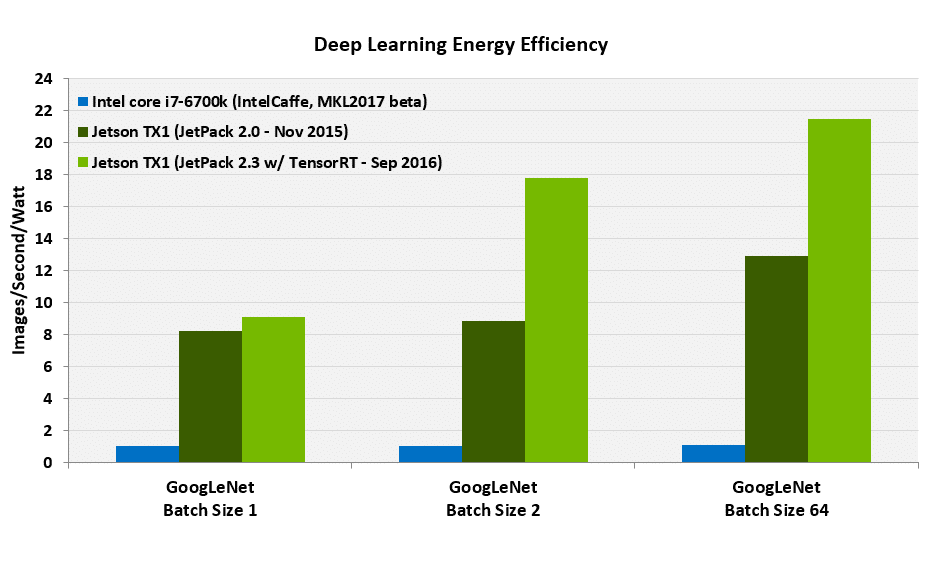

All it takes is NVIDIA JetPack 2.3, available for free download today. Our latest software suite of developer tools and libraries for the Jetson TX1 takes the world’s highest performance platform for deep learning on embedded systems and makes it twice as fast and efficient.

The ability to run complex deep neural networks is key for intelligent machines to solve real world problems in important areas such as public safety, smart cities, manufacturing, disaster relief, agriculture, transportation and infrastructure inspection.

All-in-One Package

The JetPack 2.3 all-in-one package bundles and installs all system software, tools, optimized libraries and APIs, along with providing examples so developers can quickly get up and running with their innovative designs.

Key features in this release include:

- TensorRT: Formerly known as GIE, TensorRT is a deep learning inference engine that maximizes runtime performance for applications such as image classification, segmentation and object detection. This enables developers to deploy real-time neural networks powered on Jetson. It offers double the performance for deep learning over previous implementations of cuDNN.

- cuDNN 5.1: A CUDA-accelerated library for deep learning that provides highly tuned implementations for standard routines such as convolutions, activation functions and tensor transformations. Support for advanced networks models such as LSTM and RNN have also been included in this release.

- Multimedia API: A package of low-level APIs ideal for flexible application development, including:

- Camera API: Per frame control over camera parameters and EGL stream outputs that allow efficient interoperation with GStreamer and V4L2 pipelines. This camera API gives developers lower level access to connect camera sensors over MIPI CSI.

- V4L2 API: Video decode, encode, format conversion and scaling functionality. V4L2 for encode opens up low-level access for features such as bit rate control, quality presets, low-latency encode, temporal tradeoff and motion vector maps. GStreamer implementation from previous releases is also supported.

- CUDA 8: The latest release includes updated host compiler support of GCC 5.x and the NVCC CUDA compiler has been optimized for up to 2x faster compilation. CUDA 8 also includes nvGRAPH, an accelerated library for graph analytics. New APIs for half-precision floating point computation in CUDA kernels and cuBLAS and cuFFT libraries have also been added.

New Partnership

Additionally, NVIDIA is working with Leopard Imaging Inc., a Jetson Preferred Partner that specializes in the creation of camera solutions such as stereo depth mapping for embedded machine vision applications, cameras to enable intelligent machines, and consumer products.

Developers can work with Leopard Imaging to easily integrate multiple RAW image sensors while using NVIDIA’s internal on-chip ISPs or external ISPs via CSI or USB interfaces, in addition to the ISP bypass imaging modes available today for YUV sensors. The new camera API that’s part of Jetpack 2.3 offers enhanced functionality to ease this integration.

Download JetPack 2.3 today. Take a deeper technical dive on JetPack with TensorRT on our developer blog.

Chart footnotes:

- The efficiency was measured using the methodology outlined in the whitepaper.

- Jetson TX1 efficiency is measured at GPU frequency of 691 MHz.

- Intel Core i7-6700k efficiency was measured for 4 GHz CPU clock.

- GoogLeNet batch size was limited to 64 as that is the maximum that could run with Jetpack 2.0. With Jetpack 2.3 and TensorRT, GoogLeNet batch size 128 is also supported for higher performance.

- FP16 results for Jetson TX1 are comparable to FP32 results for Intel Core i7-6700k as FP16 incurs no classification accuracy loss over FP32.

- Latest publicly available software versions of IntelCaffe and MKL2017 beta were used.

- For Jetpack 2.0 and Intel Core i7, non-zero data was used for both weights and input images. For Jetpack 2.3 (TensorRT) real images and weights were used.