|

Dear Colleague,

TensorFlow has become a popular framework for creating machine

learning-based computer vision applications, especially for the

development of deep neural networks (DNNs). If you’re planning to

develop

computer vision applications using deep learning and want to understand

how to use TensorFlow to do it, then don’t miss an upcoming

full-day,

hands-on training class organized by the Embedded Vision Alliance: Deep Learning for

Computer Vision with TensorFlow. It takes place in Hamburg, Germany

next week, on September

7. Learn more and register at https://tensorflow.embedded-vision.com.

Also consider attending the Alliance’s upcoming free webinar “Efficient

Processing for Deep Learning: Challenges and Opportunities,” on September 28 at 10 am Pacific Time. DNN algorithms are very

computationally demanding. To enable DNNs to be used in practical

applications, it’s therefore critical to find efficient ways to

implement them.

This webinar explores how DNNs are being mapped onto today’s processor

architectures, and how both DNN algorithms and specialized processors

are evolving to enable improved efficiency. It is presented by Dr.

Vivienne Sze, Associate Professor of Electrical Engineering and

Computer Science at MIT (www.rle.mit.edu/eems). Sze

concludes with suggestions on how to evaluate competing processor

solutions in order to address your application and design

requirements.

Brian Dipert

Editor-In-Chief, Embedded Vision Alliance

|

|

How to Choose a 3D Vision Technology

Designers of autonomous vehicles, robots,

and many other systems are faced with a critical challenge: Which 3D

perception technology to use? There are a wide variety of sensors on

the market, employing modalities including passive stereo, active

stereo, time of flight, 2D and 3D lasers, and monocular approaches.

There is no perfect sensor technology and no

perfect sensor, but there is always a sensor which best aligns with the

requirements of your application — you just need to find it. This talk

from Chris Osterwood, Chief Technical Officer at Carnegie

Robotics, provides an overview of 3D sensor technologies and their

capabilities and limitations, based on his company’s experience

selecting the right 3D technology and sensor for a diverse range of

autonomous robot designs. Osterwood

describes a quantitative and qualitative evaluation process for 3D

sensors, including testing processes using both controlled environments

and field testing, and some surprising characteristics and limitations

that Carnegie Robotics has uncovered through that testing.

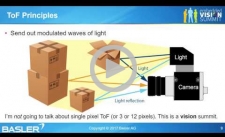

Time of Flight Sensors: How Do I Choose Them and How Do I

Integrate Them?

3D digitalization of the world is becoming

more important. This additional dimension of information allows more

real-world perception challenges to be solved in a wide range of

applications. Time-of-flight (ToF) sensors are one way to obtain depth

information, and several time-of-flight sensors are available on the

market. In this talk, Mark Hebbel, Head of New Business Development at

Basler, examines the strengths and weaknesses of ToF sensors. He

explains how to choose them based on your specifications, and where to

get them. He also briefly discusses things you should watch out for

when incorporating ToF sensors into your systems, along with the future

of ToF technology.

|

|

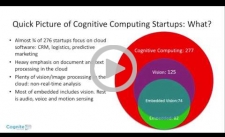

The Vision AI Start-ups That Matter Most

In this presentation, Chris Rowen of

Cognite Ventures shares his unique perspective on innovation in

embedded vision and artificial intelligence (AI). Included in Rowen’s

talk is a discussion of the relationship between cognitive computing

and embedded vision, along with his insights into overall trends

and case study examples of new hardware for embedded vision and AI.

Vision Tank Competition Finalist Presentations

Adam Rowell, CTO of Lucid VR, Nitsa Einan,

VP of Business Development at Imagry, Anthony Ashbrook, Founder and CEO

of Machines with Vision, Grace Tsai, Founding Engineer at PerceptIn,

and Grégoire Gentil, Founder of Always Innovating, deliver their Vision

Tank finalist presentations at the May 2017 Embedded Vision Summit. The

Vision Tank introduces

companies that incorporate visual intelligence in their products in an

innovative way and who are looking for investment, partnerships,

technology, and customers. In a lively, engaging, and interactive

format, these companies compete for awards and prizes as well as

benefitting from the feedback of an expert panel of judges: Nik

Gagvani, President of CheckVideo; Vin Ratford, Executive Director of

the Embedded Vision Alliance; Dave Rosenberg, Managing Director at GE

Ventures; and John Feland, CEO, Argus Insights.

|

|

TensorFlow

Training Class: September 7, 2017, Hamburg, Germany

AutoSens

Conference Brussels: September 19-21, 2017, Autoworld,

Brussels, Belgium

Synopsys

ARC Processor Summit: September 26, 2017, Santa Clara,

California

Embedded Vision Alliance Webinar – Efficient

Processing for Deep Learning: Challenges and Opportunities:

September 28, 2017, 10:00 am PT

More Events

|