This blog post was originally published at videantis' website. It is reprinted here with the permission of videantis.

“This was the first AutoSens show ever, but it sure didn’t show,” I wrote last year in my AutoSens show report. A second successful AutoSens show was held in Detroit in May, and now the who’s who in the world of self-driving cars gathered again at the stunning Autoworld car museum in Brussels — what better place to talk about technology that will transform the automotive industry than among 350 unique cars that represent the past 100 years of automobiles?

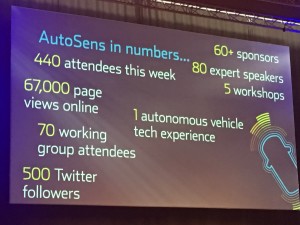

To give you a good feel for its size, here’s the conference in numbers:

- 440 attendees (330 last year)

- 80 speakers

- 45 exhibitors (20 last year)

- 70 work group attendees (IEEE 2020 automotive image quality)

- 3 parallel tracks of talks

Good energy attracts good people, and the show grew again. The show’s organizer, Robert Stead, said to me that he would rather not grow much further though. It’s about quality, not quantity, and again the conference didn’t disappoint there.

Good energy attracts good people, and the show grew again. The show’s organizer, Robert Stead, said to me that he would rather not grow much further though. It’s about quality, not quantity, and again the conference didn’t disappoint there.

Self-driving cars need to know what’s going on around and inside them, which means the cars have many high-quality, fast and powerful intelligent sensors. The show primarily focuses on those key sensors, whether they are image sensors, radar, ultrasonic, or lidar, and the intensive processing of the data gathered by them. More generic topics in the field of developing intelligent sensing solutions for self-driving cars were also tackled. But before the 2-day conference began, there were 2 days of well-attended workshops.

Day 1 was consumed by meetings of the IEEE P2020 working group, which specifies ways to measure and test automotive image quality. This ensures we all speak the same language, ensures consistency, and creates cross-industry reference points, benefiting us all. The workgroup was formed a bit over a year ago and although the topic is complex, there’s been great progress.

Day 2 saw 5 half-day workshops on diverse topics such as understanding the image colour pipeline by industry luminary Prof Albert Theuwissen, the role of AI for autonomous vehicles, developing software for heterogeneous systems, vehicle cybersecurity, and human factors in designing self-driving vehicles.

The following two days were filled with 3 parallel tracks of talks, so it was hard to see all the presentations. A healthy mix of analysts, OEMs, Tier 1s, semiconductor companies, standardization organizations and software vendors gave talks. New this year was the AutoSens Awards evening, in which prizes were awarded to recognize those that made extraordinary contributions to automotive high-tech.

The presentations were filled with many interesting bits of information. EuroNCAP said that from next year on no car can achieve a 5-star safety rating without having both radar and camera on board. They also mentioned that one in four cars sold today has AEB. Woodside capital showed that cameras and radar are by far the largest automotive sensor market, and that cameras are growing fastest. Lyft said that 95% of our transportation needs can be served with autonomous car rides, saving 90% of cost.

We presented a talk titled “Intelligent sensing requires a flexible vision processing architecture” where we highlighted how you can design a vision processor that is able to run the computer vision and imaging tasks that extract meaningful information from the stream of pixels coming from a camera. We’ll post a link to the slides and video once they’re available.

We presented a talk titled “Intelligent sensing requires a flexible vision processing architecture” where we highlighted how you can design a vision processor that is able to run the computer vision and imaging tasks that extract meaningful information from the stream of pixels coming from a camera. We’ll post a link to the slides and video once they’re available.

Last year we wrote in our report that we learned five things from the show: that self-driving cars are hard, deep learning is hard, image quality is hard, we need more sensors, and that surround view systems are replacing rear view. All those things are still true, but the industry’s focus shifted somewhat. We’re one year later, and here’s this year’s “5 lessons learned at AutoSens” below.

- The devil is in the detail

Mighty AI spoke about performance labeling services to generate training data for segmentation tasks. But how to draw the bounding polygon in case vehicles are partially occluded? And should we include the vehicle’s antennas or can we ignore them since they’re not “structural features”?

In previous years, speakers were talking about keeping the car between the lanes. Now we’re not simply talking about staying in lanes, but anticipating little potholes in the road. And recognizing the difference between a red jerry can and a plastic bag, since in each case the vehicle’s response needs to be very different.

Both Nissan and the SWOV Institute for Road Safety spoke about how our autonomous vehicles need to understand the intent of other road users. When a passenger gets out on the sidewalk side, maybe the driver is also likely to get out? When there’s a ball in the road, can the car anticipate a child will likely come running after it?

And these are just a few examples where it’s all about the nitty-gritty.

- No one sensor to rule them all

The industry seems to clearly head in the direction of using 5 key sensors: ultrasonic, image sensors, time-of-flight, radar and lidar. And within those categories there are many variants. For radar there’s short, mid, and long-range systems, for lidar there are also short-range and long-range systems. And even a simple rear-view camera is quite a complex system that includes a lens, image sensor, ISP, computer vision subsystem, and compression for storage or transmission. Cameras can have different focal lengths, resolutions, frame rates, etc. There are so many options, each with its own benefits and trade-offs. There seems to be no trend to standardize on specific sensor configurations. There’s no single sensor that’ll cover all use cases. On the contrary, there’s more sensors coming, each more specialized for its task. Fusion then combines this information from different sensors. This is the way forward for now as there’s nobody talking about doing it the way us humans do it, with only using two cameras.

- No bold predictions

The only bold prediction I heard at the conference came from Lyft in response to a question from the audience. Alex Starns said he expects that Lyft will make a self-driving car service available to the public in 3-4 years. The service should give the same user experience as today, just without a driver in the car. But that was the only one. Yole Développement, a market research & strategy company that focuses on sensors said that by 2045, 70% of all vehicles sold will integrate autonomous capabilities. By 2050, 5% of all vehicles sold should be Level-5-ready. Quite a different message.

Otherwise, nobody dared to make predictions, and even posing the trillion-dollar question of “when” was frowned upon. Everyone seems clearly convinced it’s hard to make a Level 5 car.

- Besides drive itself, what will an autonomous car really be like?

While the conference has a clear focus on the sensor side of things, quite a few talks showed how our self-driving cars will become quite different from our current vehicles. Motion sickness may become a bigger issue for instance. Anything we can do to alleviate that problem? And what if we want the car to go faster than the speed limit, or temporarily need to break some traffic rules? And will we order different vehicles in case we want to sleep, work, eat, or party? Once the car drives itself, everything will change: business models, insurance, the vehicle’s interior, exterior, and the way we use them.

- Deep learning a must-have tool for everyone

Of course deep learning algorithms are often used for detection, classification and segmentation of image data. But deep learning is rapidly also becoming a tool that’s used for many other tasks. There were examples of neural nets being used for lens corrections, neural nets for processing high-resolution maps data, implementing driving policies, actually helping the labelling task itself, and even studying ethical aspects of self-driving cars.

Conclusion

The automotive and transportation industries are very large markets that are in rapid transformation. It’s been another year, and the industry has been moving forward very rapidly. Progress is being made in all areas: the sensors, the algorithms, and the embedded processors that implement them at low power and low cost. We’re excited to be part of this transformation and to be one of the leaders that are delivering a crucial visual processing component to this market.

The next AutoSens show is scheduled to take place in May 2018 in the Detroit area. We’re looking forward to meeting everyone there!

Please don’t hesitate to contact us to learn more about our unified vision/video processor and software solutions.

Picture courtesy of Sven Fleck, Smartsurv.

By Marco Jacobs

Vice President of Marketing, videantis