| LETTER FROM THE EDITOR |

|

Dear Colleague,

The Embedded Vision Alliance is performing research to better understand what types of technologies are needed by product developers who are incorporating computer vision in new systems and applications. To help guide suppliers in creating the technologies that will be most useful to you, please take a few minutes to fill out this brief survey. As our way of saying thanks for completing it, you’ll receive $50 off an Embedded Vision Summit 2018 2-Day Pass. Plus, you'll be entered into a drawing for one of several cool prizes. The deadline for entries is November 20, 2017. Please fill out the survey here.

The Embedded Vision Summit is the preeminent conference on practical computer vision, covering applications from the edge to the cloud. It attracts a global audience of over one thousand product creators, entrepreneurs and business decision-makers who are creating and using computer vision technology. The Embedded Vision Summit has experienced exciting growth over the last few years, with 98% of 2017 Summit attendees reporting that they’d recommend the event to a colleague. The next Summit will take place May 22-24, 2018 in Santa Clara, California. The deadline to submit presentation proposals is November 10, 2017. For detailed proposal requirements and to submit proposals, please visit https://www.embedded-vision.com/summit/2018/call-proposals. For questions or more information, please email [email protected].

Brian Dipert

Editor-In-Chief, Embedded Vision Alliance

|

| IMAGE SENSOR FUNDAMENTALS |

|

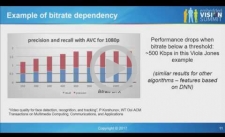

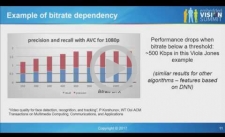

How Image Sensor and Video Compression Parameters Impact Vision Algorithms

Recent advances in deep learning algorithms have brought automated object detection and recognition to human accuracy levels on various datasets. But algorithms that work well on an engineer’s PC often fail when deployed as part of a complete embedded system. In this talk, Ilya Brailovskiy, Principal Engineer at Amazon Lab126, examines some of the key embedded vision system elements that can degrade the performance of vision algorithms. For example, in many systems video is compressed, transmitted, and then decompressed before being presented to vision algorithms. Not surprisingly, video encoding parameters, such as bit rate, can have a significant impact on vision algorithm accuracy. Similarly, image sensor parameters can have a profound effect on the nature of the images captured, and therefore on the performance of vision algorithms. Brailovskiy explores how image sensor and video compression parameters impact vision algorithm performance, and discusses methods for selecting the best parameters to aid vision algorithm accuracy.

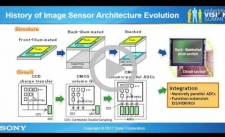

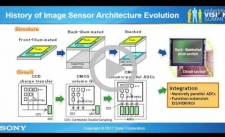

Image Sensor Formats and Interfaces for IoT Applications

Image sensors provide the essential input for embedded vision. Hence, the choice of image sensor format and interface is critical for embedded vision system developers. In this talk, Tatsuya Sugioka, Imaging System Architect at Sony Corporation, explains the capabilities, advantages and disadvantages of common image sensor formats and interfaces (for example, sub-LVDS and MIPI) through quantitative and qualitative comparisons. Sugioka also explores how different image sensor formats and interfaces support advanced features such as high dynamic range imaging.

|

| AUTOMOTIVE VISION SYSTEM DESIGN |

|

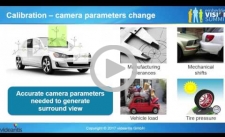

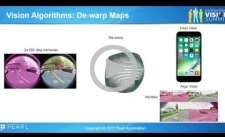

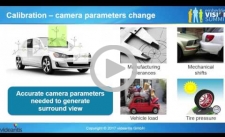

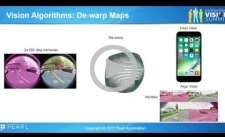

Computer-vision-based 360-degree Video Systems: Architectures, Algorithms and Trade-offs

360-degree video systems use multiple cameras to capture a complete view of their surroundings. These systems are being adopted in cars, drones, virtual reality, and online streaming systems. At first glance, these systems wouldn’t seem require computer vision since they’re simply presenting images that the cameras capture. But even relatively simple 360-degree video systems require computer vision techniques to geometrically align the cameras – both in the factory and while in use. Additionally, differences in illumination between the cameras cause color and brightness mismatches, which must be addressed when combining images from different cameras. Computer vision also comes into play when rendering the captured 360-degree video. For example, some simple automotive systems simply provide a top-down view, but more sophisticated systems enable the driver to select the desired viewpoint. In this talk, Marco Jacobs, VP of Marketing at videantis, explores the challenges, trade-offs and lessons learned while developing 360-degree video systems, with a focus on the crucial role that computer vision plays in these systems.

Designing a Vision-based, Solar-powered Rear Collision Warning System

Bringing vision algorithms into mass production requires carefully balancing trade-offs between accuracy, performance, usability, and system resources. In this talk, Aman Sikka, Vision System Architect at Pearl Automation, describes the vision algorithms along with the system design challenges and trade-offs that went into developing a wireless, solar-powered, stereo-vision backup camera product.

|

| UPCOMING INDUSTRY EVENTS |

|

Vision Systems Design Webinar – Embedded and Mobile Vision Systems – Developments and Benefits: October 24, 2017, 8:00 am PT

Thundersoft Embedded AI Technology Forum: November 3, 2017, Beijing, China

Consumer Electronics Show: January 9-12, 2018, Las Vegas, Nevada

Embedded Vision Summit: May 22-24, 2018, Santa Clara, California

More Events

|

| FEATURED NEWS |

|

8K Camera from XIMEA with 48 Mpix and Up to 30 fps Now Released

CEVA and Brodmann17 Partner to Deliver 20 Times More AI Performance for Edge Devices

Intel and Mobileye Offer Formula to Prove Safety of Autonomous Vehicles

FotoNation Announces Collaboration with DENSO on Image Recognition Technology

New Basler Video Recording Software Available for the Basler PowerPack for Microscopy

More News

|