| LETTER FROM THE EDITOR |

|

Dear Colleague,

Keeping up with the rapid pace of vision technology advances can be overwhelming. Accelerate your learning curve and uncover practical techniques in computer vision by attending some of our 80+ "how-to" sessions at the 2018 Embedded Vision Summit, taking place May 21-24 in Santa Clara, California. You'll learn about the latest applications, techniques, technologies and opportunities in computer vision and deep learning. Register now to save 15% off your pass with our Early Bird Discount. Just use promo code NLEVI0327 when you register online. Also note that entries for the premier Vision Product of the Year awards, to be presented at the Summit, are now being accepted.

On March 28 at 11 am ET (8 am PT), Embedded Vision Alliance Founder Jeff Bier will conduct a free webinar, "Embedded Vision at the Edge and in the Cloud: Architectures, Algorithms, Processors, and Tools," in partnership with Vision Systems Design. Bier will discuss the benefits and trade-offs of edge, cloud, and hybrid vision processing models, and when you should consider each option. He will also provide an update on important recent developments in the technologies enabling vision, including processors, sensors, algorithms, development tools, services and standards, and highlight some of the most interesting and most promising end-products and applications incorporating vision capabilities. For more information and to register, see the event page. Bier will also deliver this presentation in person on April 11 at 7 pm PT, at the monthly meeting of the IEEE Signal Processing Society in Santa Clara, California. For more information and to register, see the event page.

Brian Dipert

Editor-In-Chief, Embedded Vision Alliance

|

| CAREER OPPORTUNITIES |

|

Positions Available for Computer Vision Engineers at DEKA Research

Inventor Dean Kamen founded DEKA to focus on innovations aimed to improve lives around the world. DEKA has deep roots in mobility: Dean invented the Segway out of his work on the iBot wheel chair. Now DEKA is adding autonomous navigation to its robotic mobility platform. The company is leveraging advances in computer vision to address the challenges of autonomous navigation in urban environments at pedestrian to bicycle speeds on streets, bike paths, and sidewalks. DEKA is seeking engineers with expertise in all facets of autonomous navigation and practical computer vision – from algorithm development to technology selection to system integration and testing – and who are passionate about building, designing, and shipping projects that have a positive, enduring impact on millions of people worldwide. Interested candidates should send a resume and cover letter to [email protected].

Also see the recent job posting from videantis at the Alliance website's discussion forums.

|

| DEEP LEARNING FOR VISION |

|

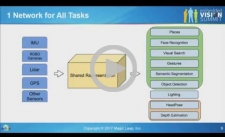

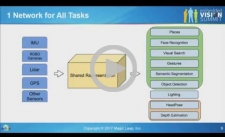

Performing Multiple Perceptual Tasks With a Single Deep Neural Network

As more system developers consider incorporating visual perception into smart devices such as self-driving cars, drones and wearable computers, attention is shifting toward practical formulation and implementation of these algorithms. Here, the key challenge is how to deploy very computationally demanding algorithms that achieve state-of-the-art results on platforms with limited computational capability and small power budgets. Visual perception tasks such as face recognition, place recognition and tracking are traditionally solved using multiple single-purpose algorithms. With the approach, power consumption increases as more tasks are performed. In this talk, Andrew Rabinovich, Director of Deep Learning at Magic Leap, introduces techniques for performing multiple visual perception tasks within a single learning-based algorithm. He also explores general-purpose model optimization to enable such algorithms to run efficiently on embedded platforms.

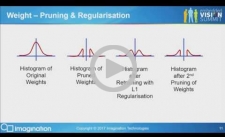

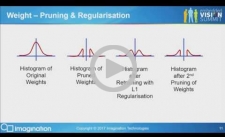

Training CNNs for Efficient Inference

Key challenges to the successful deployment of CNNs in embedded markets are in addressing the compute, bandwidth and power requirements. Typically, for mobile devices, the problem lies in the inference, since the training is currently handled offline. One approach to reducing the inference cost is to take a trained network and use a tool to map it to a lower cost representation by, for example, reducing the precision of the weights. Better inference performance can be obtained if the cost reduction is integrated into the network training process. In this talk, Paul Brasnett, Principal Research Engineer at Imagination Technologies, explores some of the techniques and processes that can be used during training to optimize the CNN inference performance, along with a case study to illustrate the advantages of such an approach.

|

| EXECUTIVE PERSPECTIVES |

|

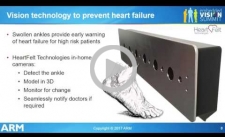

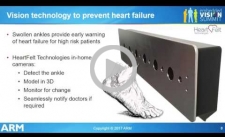

This Changes Everything — Why Computer Vision Will Be Everywhere

Computer vision, or teaching machines to see, will be revolutionary – it will change everything. Beyond the obvious markets where it is having an immediate impact, such as automotive and security, computer vision provides opportunities to improve the functionality of many classes of devices in numerous other markets such as smart buildings and smart cities – and to create completely new applications, products and services. For example, today there are awkward communication barriers between the digital world and the real world where we humans live. Along with speech recognition, speech synthesis and new imaging technologies, computer vision will knock down those barriers completely. Removal of the current barriers between the digital and real worlds will enable unprecedented ease-of-use for powerful devices and enable new ways of using technology. This talk from Tim Ramsdale, former General Manager of the Imaging and Vision Group at Arm, gives a personal perspective on the impact of computer vision in diverse markets – from IoT and wearables, through mobile and automotive, right up to data centers – revealing some of the new business models being enabled along the way.

Vision for All?

So, you’ve decided to incorporate visual intelligence into your device or application. Will you need a team of computer vision PhDs working for years? Or will it simply be a matter of choosing the right off-the-shelf frameworks, modules and tools? The palette of available off-the-shelf computer vision software and algorithm resources is advancing fast. As a result, for some types of vision capabilities, it’s increasingly practical for software developers who aren’t vision specialists to add vision capabilities to their products. At the same time, there remain many use cases where development “from scratch” is required. In this talk, Jeff McVeigh, Vice President in the Software and Services Group and General Manager of visual computing products at Intel, shares his perspective on the state of vision software development today, where it’s heading, and the key opportunities and challenges in this realm for both novices and experts.

|

| UPCOMING INDUSTRY EVENTS |

|

Vision Systems Design Webinar Embedded Vision at the Edge and in the Cloud: Architectures, Algorithms, Processors, and Tools: March 28, 2018, 9 am PT (11 am ET)

IEEE Signal Processing Society Presentation Computer Vision at the Edge and in the Cloud: Architectures, Algorithms, Processors, and Tools: April 11, 2018, 7 pm PT, Santa Clara, California

Embedded Vision Summit: May 21-24, 2018, Santa Clara, California

More Events

|

| DEEP LEARNING FOR COMPUTER VISION WITH TENSORFLOW TRAINING CLASSES |