This market research report was originally published at Tractica's website. It is reprinted here with the permission of Tractica.

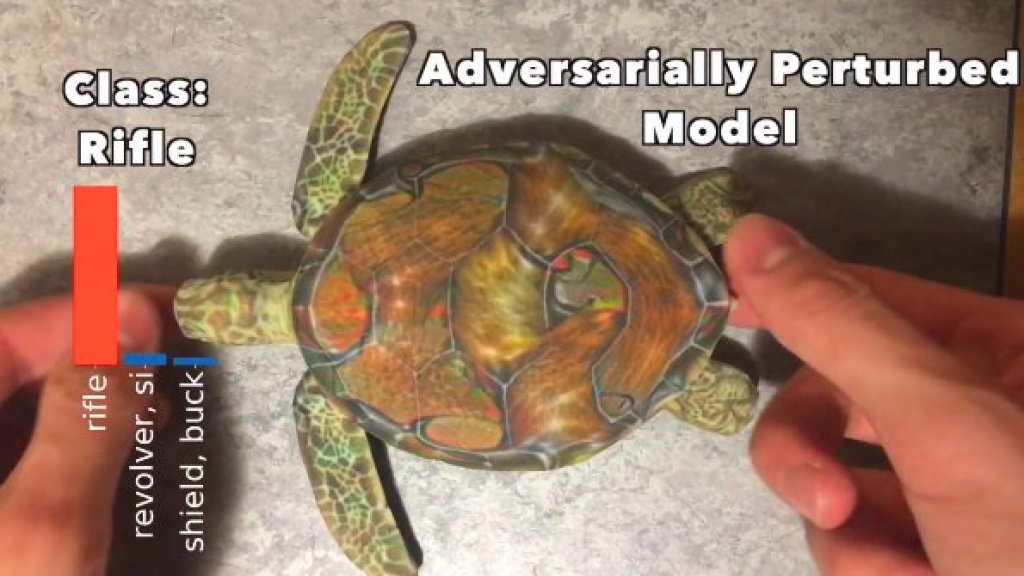

A recent paper by MIT students showed how easy it is to trick an artificial intelligence (AI)-based vision system into wrongly classifying 3D objects, when a 3D-printed turtle was identified as a rifle. By adding noise to the input, using an adversarial algorithm, the deep learning system based on Google’s Inception-v3 algorithm was tricked into making an incorrect classification. There have been numerous other examples of tricking 2D images or applying adversarial attacks, but what made this paper significant was that the same attacks can now be applied to 3D vision systems at different camera angles. In other words, real-world video imaging is now vulnerable to adversarial attacks. To humans, the adversarial images look the same, but to the computer vision system, changing a few pixels causes it to make completely different decisions. One of the most striking versions of an adversarial attack is the one-pixel attack in which changing one pixel can throw these systems off completely!

(Source: LabSix)

At one level, it shows how strange these neural network (NN) algorithms can be in terms of their inner workings, which, in most cases, look like a black box. Adversarial attacks on vision systems also come in “white box” and “black box” flavors, with attacks built around having or lacking knowledge of the algorithm’s parameters, training method, network architecture, etc. White box adversarial attacks are the most dangerous, with the algorithm trained on adversarial images, making them vulnerable at inception or training itself.

Imagine the effects of an adversarial attack on a fleet of self-driving cars, a surveillance camera system in a city, or a fleet of autonomous drones delivering packages. Now imagine an attack on the cloud servers that are used to store all that training data. Both instances have catastrophic consequences, but in the white box attack, an entire system of machines could be brought to its knees. In all of these instances, the car, drone, or camera is dependent on the AI vision system to correctly identify a road sign, a drop off location, or faces to ensure the correct working of a system.

The Need to Protect Mission-Critical Systems

The adversarial attacks on vision systems are not just limited to classifiers, but also include attacks on semantic segmentation, object recognition, and object detection. In the case of self-driving cars, these are mission-critical systems, and unless these systems are protected against adversarial attacks, their future is in serious jeopardy, unless someone figures out ways to counter such vulnerabilities.

A growing area of research is looking into thwarting such adversarial attacks, changing the algorithms themselves, and making them more robust to such attacks. There is also little consensus on the main reasons for these vulnerabilities in NNs, with multiple reasons being cited, from the linear behavior of NNs to the low flexibility of classifier systems. The fact that one can throw these systems off by changing just one pixel is astonishing and mind boggling, reminding us how little we understand about the current state of AI!

There is a definite need for continued investigations in this area that are systematic and aligned. Also, the adversarial threats keep advancing with “counter-counter” measures being adopted by adversarial algorithms. Its good to see competitions like the recent Kaggle competition at NIPS 2017, which pitted adversarial techniques against robust vision algorithms.

Addressing the Vulnerabilities of Artificial Intelligence

Overall, we have yet to fully understand the implications of adversarial vulnerabilities and how this will impact the growth of AI vision systems. Here are some thoughts on what some of those implications could be:

- Certain AI vision systems like security cameras will continue to see rapid rollout. In the case of surveillance cameras, we are already seeing widespread deployment of AI-based closed-circuit television (CCTV) cameras in China; therefore, the question is whether, in some instances, we have already gone too far too quickly without realizing the consequences. This also includes satellite-based AI vision intelligence systems where satellite images are being used to create financial indexes or extract business intelligence about oil reserves, agricultural crop yield, etc. In terms of mission-critical tasks, these examples are likely to see less oversight around vulnerabilities, either because certain governments want to push these systems out sooner than later, or because there is a massive commercial gain to be had. Expect to see some of the first adversarial attacks happen on these networks, and governments or corporations exposed in making the wrong decisions because of compromised AI.

- The regulatory aspect of such vulnerabilities is unchartered territory; however, there are some leading pointers. Self-driving cars and drones already have many regulatory hurdles to overcome, and this is another issue that will need to be tackled under the security stack. There is hope for such mission-critical, life-critical systems to have certain safeguards, which would make it much harder for a bad actor to launch an adversarial attack. This also raised questions about the robustness of the AI vision algorithms and their readiness for the real world. The fact that one pixel could throw off a stop sign classifier in a self-driving car is a major cause for worry and suggests that Level 4 and Level 5 automation will come with a safety warning. Medical imaging is another area that would fall under the regulatory landscape as one would not want a false diagnosis of patients because the x-ray database has been compromised with an adversarial attack.

- Human oversight will be increasingly required for AI vision systems, making sure that these systems are behaving correctly. In cases like medical diagnosis, security, drone delivery, self-driving cars, insurance, crop yield, real-estate planning, etc. where critical business decisions are being made by AI algorithms, we will most likely have human oversight that will either be implemented as a legal or a regulatory requirement. For business decisions, expect legal requirement of human oversight, rather than regulatory oversight. If you are worried about job losses through AI, an “AI Oversight Worker” is a job title that will likely become much sought after in the years and decades to come. The most frequently asked questions for business will be, “Can you trust AI to make business or life and death decisions versus a human?”, “Is it worth putting in human safeguards?”, or “How much risk are you willing to take if the AI system is compromised?”

Aditya Kaul

Research Director, Tractica