This blog post was originally published at NVIDIA's website. It is reprinted here with the permission of NVIDIA.

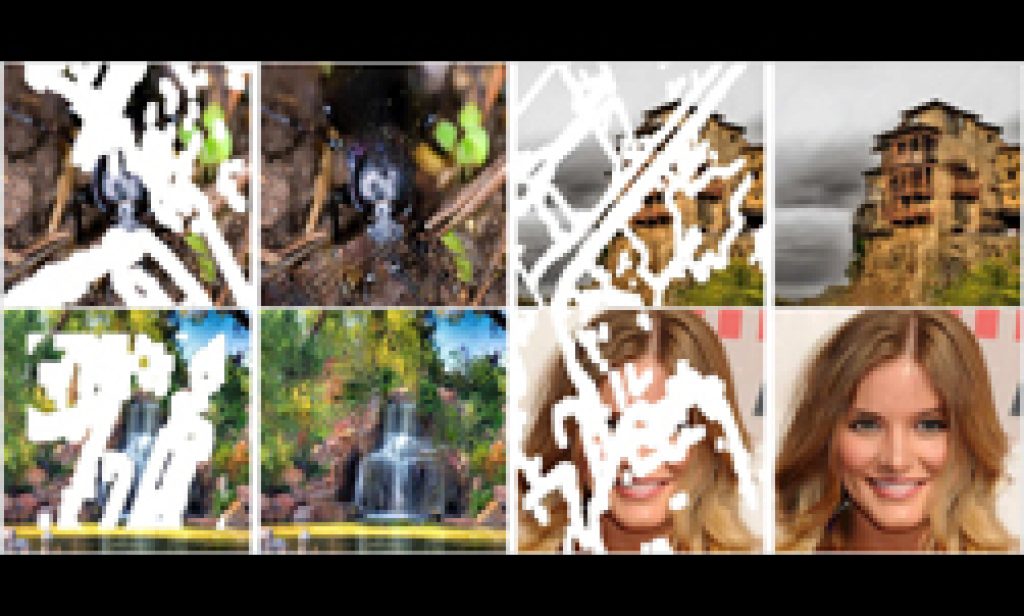

Researchers from NVIDIA, led by Guilin Liu, introduced a state-of-the-art deep learning method that can edit images or reconstruct a corrupted image, one that has holes or is missing pixels.

The method can also be used to edit images by removing content and filling in the resulting holes.

The method, which performs a process called “image inpainting”, could be implemented in photo editing software to remove unwanted content, while filling it with a realistic computer-generated alternative.

“Our model can robustly handle holes of any shape, size location, or distance from the image borders. Previous deep learning approaches have focused on rectangular regions located around the center of the image, and often rely on expensive post-processing,” the NVIDIA researchers stated in their research paper. “Further, our model gracefully handles holes of increasing size.”

To prepare to train their neural network, the team first generated 55,116 masks of random streaks and holes of arbitrary shapes and sizes for training. They also generated nearly 25,000 for testing. These were further categorized into six categories based on sizes relative to the input image, in order to improve reconstruction accuracy.

An example of the masks generated for training.

Using NVIDIA Tesla V100 GPUs and the cuDNN-accelerated PyTorch deep learning framework, the team trained their neural network by applying the generated masks to images from the ImageNet, Places2 and CelebA-HQ datasets.

During the training phase, holes or missing parts are introduced into complete training images from the above datasets, to enable the network to learn to reconstruct the missing pixels.

During the testing phase, different holes or missing parts, not applied during training, are introduced into the test images in the dataset, to perform unbiased validation of reconstruction accuracy.

The researchers said existing deep learning based image inpainting methods suffer because the outputs for missing pixels necessarily depend on the value of the input that must be supplied to the neural network for those missing pixels. This leads to artifacts such as color discrepancy and blurriness in the images. To fix this problem, the NVIDIA team developed a method that guarantees the output for missing pixels does not depend on the input value supplied for those pixels. This method uses a “partial convolution” layer that renormalizes each output depending on the validity of its corresponding receptive field. This renormalization ensures that the value of the output is independent of the values of the missing pixels in each receptive field. The model is built from a UNet architecture implemented with these partial convolutions. A set of loss functions, matching feature losses with a VGG model, as well as style losses, were used to train the model to produce realistic outputs.

Because of this, the model outperforms previous methods, the team said.

“To the best of our knowledge, we are the first to demonstrate the efficacy of deep learning image inpainting models on irregularly shaped holes,” the NVIDIA researchers mentioned.

The researchers also referenced in the paper that they can apply the same framework to handle image super-resolution tasks.

Join NVIDIA at ICLR 2018 at the Vancouver Convention Center, April 30 – May 3, 2018, to learn more about research at NVIDIA and how the NVIDIA deep learning ecosystem is equipping researchers and developers to advance deep learning and artificial intelligence.