This blog post was originally published at videantis' website. It is reprinted here with the permission of videantis.

Since AlexNet in 2012, deep learning (DL) has taken the world of image processing by storm. Working on vision applications for automotive, smartphones, data centers, augmented reality, or using image processing in any shape or form? Then you’re probably either already using deep learning techniques or looking to adopt them. Since deep learning consumes very high compute resources, typically several TOPS, many SOC architects are adding specific deep learning accelerators to their designs to provide the required computational power. But when you’re looking to add smart camera sensing capabilities to your device, just adding a deep learning accelerator isn’t really enough. You also need a vision processor that efficiently runs image processing and classical computer vision (CV) algorithms. Let’s look at some reasons why.

1: Feed the beast

Deep learning algorithms are often trained on still images at specific resolutions and in particular formats, like RGB or monochrome. But where do the images come from? Usually these images come from a camera, over a network, or from storage, in any one of several different formats, at different resolutions, possibly compressed, and often distorted. So first you need to preprocess these images before you feed them to the DL accelerator. Some concrete examples: Automotive cameras often have wide angle lenses, which means you need to undistort or dewarp into a rectangular image first before handing it to the accelerator. In data centers, images and video are compressed to save storage space. Before analyzing the image with the DL engine, you’ll need to decompress it. Today’s cameras capture multi-megapixel images, but most DL nets run at a much lower resolution. The popular YOLO v2 network runs at a maximum configuration of 608×608 pixels, for instance, so you’ll have to crop and scale down the image first. In short, there’s always some preprocessing work to be done before your data is ready for the DL engine.

2: Many algorithms use both DL & CV

We’ve seen many smart image sensing implementations that use both deep learning and classical computer vision techniques. Consider a traffic sign detection system in a vehicle. The system’s first task is to detect the traffic sign; the second step is to recognize the sign. Some DL nets can run both of these tasks in a single pass, but because the required resolutions are too high for them, people first run the image through simple detector programs and then feed only those portions of the captured image that contain a sign into the DL-based classifier. Then there’s the fact that many signs also include text. In that case, you need to run a text detection and character recognition algorithm in addition to the sign recognition task. These character recognition algorithms are often still based on non-DL techniques. This is just one example of the many we’ve seen in real world vision applications where DL is combined with CV. Of course, DL engines can’t run CV algorithms, which means you need a vision processor to do the job.

We’ve seen many smart image sensing implementations that use both deep learning and classical computer vision techniques. Consider a traffic sign detection system in a vehicle. The system’s first task is to detect the traffic sign; the second step is to recognize the sign. Some DL nets can run both of these tasks in a single pass, but because the required resolutions are too high for them, people first run the image through simple detector programs and then feed only those portions of the captured image that contain a sign into the DL-based classifier. Then there’s the fact that many signs also include text. In that case, you need to run a text detection and character recognition algorithm in addition to the sign recognition task. These character recognition algorithms are often still based on non-DL techniques. This is just one example of the many we’ve seen in real world vision applications where DL is combined with CV. Of course, DL engines can’t run CV algorithms, which means you need a vision processor to do the job.

3: Power and cost constraints

Deep learning requires a lot of compute power and bandwidth to pull all the coefficient and intermediate data through. For some object detection tasks, more classical computer vision approaches work fine. Many smartphones use Haar/Adaboost for face detection, for instance. And many automotive camera applications use HOG/SVM for pedestrian detection. These methods still require quite a bit of compute power, but not nearly as much as DL, and nowhere near DL’s bandwidth or coefficient storage space. So when classical CV works well enough for your needs, it’s a much better idea to run these detection tasks on a vision processor that’s much smaller and lower power than a typical DL accelerator.

4: Video versus still images

Another example where DL and CV techniques are used together is in realtime applications where images from a camera are coming in at 60 fps. Most DL nets take single-frame data as input. That means you need some kind of CV-based object tracking, such as optical flow, to understand how objects in the scene are moving. New research is creating nets that take multiple frames of image data as input, but these nets require at least an order of magnitude more compute power than nets that run on a single image at a time. In short, most realtime sensing applications use DL to detect and recognize the objects, then use CV to understand how they move and where they are.

5: No need to use AI when math works

Many smart sensing algorithms rely on math instead of neural nets that need to be trained. For instance, in many cases you need to know the exact values of the camera’s extrinsic parameters, which define its location and orientation in 3D space. Before you can understand where the objects around you are, you have to know where the camera is pointing. One technique for this is SLAM, which stands for simultaneous localization and mapping; a second is Structure from Motion, which is very similar. Techniques like these use a mathematical approach that detects and tracks points in the field of view and uses their motion in the 2D image to compute the camera’s location and orientation, then reconstruct a 3D point cloud of the environment. This is one example of a problem that can be efficiently solved using predictable, well-understood mathematical techniques that consume fewer compute resources than neural nets.

Many smart sensing algorithms rely on math instead of neural nets that need to be trained. For instance, in many cases you need to know the exact values of the camera’s extrinsic parameters, which define its location and orientation in 3D space. Before you can understand where the objects around you are, you have to know where the camera is pointing. One technique for this is SLAM, which stands for simultaneous localization and mapping; a second is Structure from Motion, which is very similar. Techniques like these use a mathematical approach that detects and tracks points in the field of view and uses their motion in the 2D image to compute the camera’s location and orientation, then reconstruct a 3D point cloud of the environment. This is one example of a problem that can be efficiently solved using predictable, well-understood mathematical techniques that consume fewer compute resources than neural nets.

6: Flexibility

Though most DL engines have some ability to support different DL network architectures, their implementation is primarily hard-wired logic. When a new neural net comes along that uses slightly different building blocks inside, a hard-wired engine likely can’t support it. And progress on neural net designs is rapid—since AlexNet kicked off deep learning in 2012, dozens of new architectures have been developed. A programmable approach is the key to being future proof: run your DL nets in software on a vision processor with special architectural features to accelerate the nets, and your SOC will be able to run these next-generation nets for the foreseeable future.

In summary

For all of the designs and applications we’ve seen that require deep learning, there’s always several computer vision and imaging tasks that need to run on another processor. A host CPU can take on some of this responsibility, but since it’s built for very different workloads, it’ll quickly get overloaded and eat too much power. CPUs are soon brought to their knees when they need to run compression, imaging, or computer vision tasks.

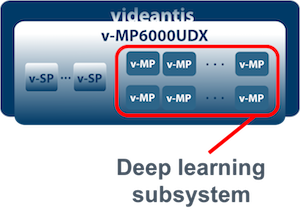

The videantis v-MP6000UDX architecture runs all of the above tasks on a single software programmable and scalable processor architecture that provides excellent performance and power efficiency that rivals hard-wired implementations. There are several advantages to running all the deep learning, imaging, compression, and computer vision tasks on a single unified architecture. Hardware integration is simpler since there’s only a single vendor’s subsystem that needs to be integrated. Software development is easier since there’s a single software development tool flow coming from a single vendor. Running all the tasks on a single architecture also reduces data moves between different processors, a key advantage since bandwidth is always limited and you don’t want your engines to be starved for data.

The videantis v-MP6000UDX architecture runs all of the above tasks on a single software programmable and scalable processor architecture that provides excellent performance and power efficiency that rivals hard-wired implementations. There are several advantages to running all the deep learning, imaging, compression, and computer vision tasks on a single unified architecture. Hardware integration is simpler since there’s only a single vendor’s subsystem that needs to be integrated. Software development is easier since there’s a single software development tool flow coming from a single vendor. Running all the tasks on a single architecture also reduces data moves between different processors, a key advantage since bandwidth is always limited and you don’t want your engines to be starved for data.

So if you’re looking to add deep learning capabilities to your next design, don’t forget that there are many additional vision and imaging tasks that need to run on another processor. Even better, consider running them all on the same unified platform. Feel free to contact us to discuss further.

Marco Jacobs

Vice President of Marketing, videantis