|

Dear Colleague,

Are you in an early-stage start-up company developing a new product or service incorporating or enabling computer vision or visual AI? Do you want to raise awareness of your company and its products with industry experts, investors and entrepreneurs? The 4th annual Vision Tank competition offers startup companies the opportunity to present their new products and product ideas to attendees at the 2019 Embedded Vision Summit, the preeminent conference on practical computer vision, covering applications at the edge and in the cloud. The Vision Tank is a unique startup competition, judged by accomplished vision industry investors and entrepreneurs. The deadline to enter is January 31, 2019. Finalists will receive a free two-day Embedded Vision Summit registration package. The final round of competition takes place during the Embedded Vision Summit. In addition to other prizes, the Judge's Choice and Audience Choice winners will each receive a free one-year membership in the Embedded Vision Alliance, providing unique access to the embedded vision industry ecosystem. For more information, including detailed instructions and an online submission form, please see the event page on the Alliance website. Good luck!

The Embedded Vision Summit attracts a global audience of over one thousand product creators, entrepreneurs and business decision-makers who are creating and using computer vision technology. The Embedded Vision Summit has experienced exciting growth over the last few years, with 97% of 2018 Summit attendees reporting that they’d recommend the event to a colleague. The next Summit will take place May 20-23, 2019 in Santa Clara, California, and registration is now available. The Summit is the place to learn about the latest applications, techniques, technologies, and opportunities in computer vision and deep learning. And in 2019, the event will feature new, deeper and more technical sessions, with more than 90 expert presenters in 4 conference tracks and 100+ demonstrations in the Technology Showcase. Register today using promotion code SUPEREBNL19 to save 25% at our limited-time Super Early Bird Discount rate. For Alliance Member companies, also note that entries for this year's Vision Product of the Year awards, to be presented at the Summit, are now being accepted. If you're interested in having your company become a Member of the Embedded Vision Alliance, see here for more information!

Brian Dipert

Editor-In-Chief, Embedded Vision Alliance

|

|

Approaches for Energy Efficient Implementation of Deep Neural Networks

DNNs (deep neural networks) are proving very effective for a variety of challenging machine perception tasks. But these algorithms are very computationally demanding. To enable DNNs to be used in practical applications, it’s critical to find efficient ways to implement them. This talk from Vivienne Sze, Associate Professor at MIT, explores how DNNs are being mapped onto today’s processor architectures, and how these algorithms are evolving to enable improved efficiency. Professor Sze explores the energy consumption of commonly used CNNs versus their accuracy, and provides insights on "energy-aware" pruning of these networks. For more information on this topic, see the on-demand archive of Professor Sze's webinar, "Efficient Processing for Deep Learning: Challenges and Opportunities," delivered in partnership with the Embedded Vision Alliance.

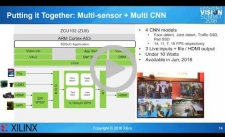

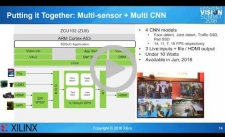

Achieving 15 TOPS/s Equivalent Performance in Less Than 10 W Using Neural Network Pruning on Xilinx Zynq

Machine learning algorithms such as convolution neural networks are fast becoming a critical part of image perception in embedded vision applications in the automotive, drone, surveillance and industrial vision markets. Applications include multi-object detection, semantic segmentation and image classification. However, when scaling these networks to modern image resolutions such as HD and 4K, the computational requirements for real-time systems can easily exceed 10 TOPS/s, consuming hundreds of watts of power, which is simply unacceptable for most edge applications. In this talk, Nick Ni, Director of Product Marketing for AI and Edge Computing at Xilinx, describes a network/weight pruning methodology that achieves a performance gain of over 10 times on Zynq Ultrascale+ SoCs with very small accuracy loss. The network inference running on Zynq Ultrascale+ has achieved performance equivalent to 20 TOPS/s in the original SSD network, while consuming less than 10 W.

|

|

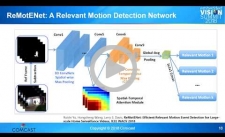

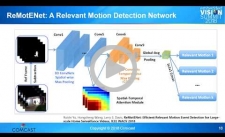

Architecting a Smart Home Monitoring System with Millions of Cameras

Video monitoring is a critical capability for the smart home. With millions of cameras streaming to the cloud, efficient and scalable video analytics becomes essential. To create a cost-effective smart home monitoring system that appeals to consumers, Comcast has architected and implemented a cost-effective and scalable video analytics system that detects events of interest and delivers them to consumers. To enable this, the company developed a hybrid edge-cloud computing solution, and innovated efficient deep learning-based algorithms to detect events of interest. In this talk, Hongcheng Wang, Senior Manager of Technical R&D at Comcast, explores the trade-offs that informed Comcast's decisions in designing, implementing and deploying this system for millions of Xfinity Home customers.

Developing Computer Vision Algorithms for Networked Cameras

Video analytics is one of the key elements in network cameras. Computer vision capabilities such as pedestrian detection, face detection and recognition and object detection and tracking are necessary for effective video analysis. With recent advances in deep learning technology, many developers are now utilizing deep learning to implement these capabilities. However, developing a deep learning algorithm requires more than just training models using Caffe or TensorFlow. It should start from an understanding of use cases, which affect the nature of required training dataset, and should be tightly bound with the hardware platform to get the best performance. In this presentation, Dukhwan Kim, computer vision software architect at Intel, explains how his company has developed and optimized production-quality video analytics algorithms for computer vision applications.

|

|

SPIE Photonics West : February 5-7, 2019, San Francisco, California

Embedded World: February 26-28, 2019, Nuremberg, Germany

Embedded Vision Summit: May 20-23, 2019, Santa Clara, California

More Events

|