This blog post was originally published at NVIDIA's website. It is reprinted here with the permission of NVIDIA.

Detailing the building blocks of autonomous driving, new NVIDIA DRIVE Labs video series provides an inside look at DRIVE software.

Editor’s note: No one developer or company has yet succeeded in creating a fully autonomous vehicle. But we’re getting closer. With this new DRIVE Labs blog series, we’ll take an engineering-focused look at each individual open challenge — from perceiving paths to handling intersections — and how the NVIDIA DRIVE AV Software team is mastering it to create safe and robust self-driving software.

MISSION: Building Path Perception Confidence via Diversity and Redundancy

APPROACH: Path Perception Ensemble

Having confidence in a self-driving car’s ability to use data to perceive and choose the correct drivable path while the car is driving is critical. We call this path perception confidence.

For Level 2+ systems, such as the NVIDIA DRIVE AP2X platform, evaluating path perception confidence in real time translates to knowing when autonomous operation is safe and when control should be transitioned to the human driver.

To put our path perception confidence to the test, we set out to complete a fully autonomous drive around a 50-mile-long highway loop in Silicon Valley with zero disengagements. This meant handling highway interchanges, lane changes, avoiding unintended exits, and staying in lanes even under high road curvature or with limited lane markings. All these maneuvers and more had to be performed in a way that was smooth and comfortable for the car’s human occupants.

The key challenge was in the real-time nature of the test. In offline testing — such as analysis of pre-recorded footage — a path perception signal can always be compared to a perfect reference. However, when the signal runs live in the car, we don’t have the benefit of live ground-truth data.

Consequently, in a live test, if the car drives on just one path perception signal, there’s no way to obtain real-time correctness on confidence. Moreover, if the sole path perception input fails, the autonomous driving functionality might disengage. Even if it doesn’t disengage, the result could be reduced comfort and smoothness of the executed maneuver.

From Individual Networks to an Ensemble

To build real-time confidence, we introduced diversity and redundancy into our path perception software.

We did this by combining several different path perception signals, including the outputs of three different deep neural networks and, as an option, a high-definition map. The fact that the signals are all different brings diversity; the fact that they all do the same thing — perceive drivable paths — creates redundancy.

The path perception signals produced by the various DNNs are largely independent. That’s because the DNNs are all different in terms of training data, encoding, model architecture and training outputs.

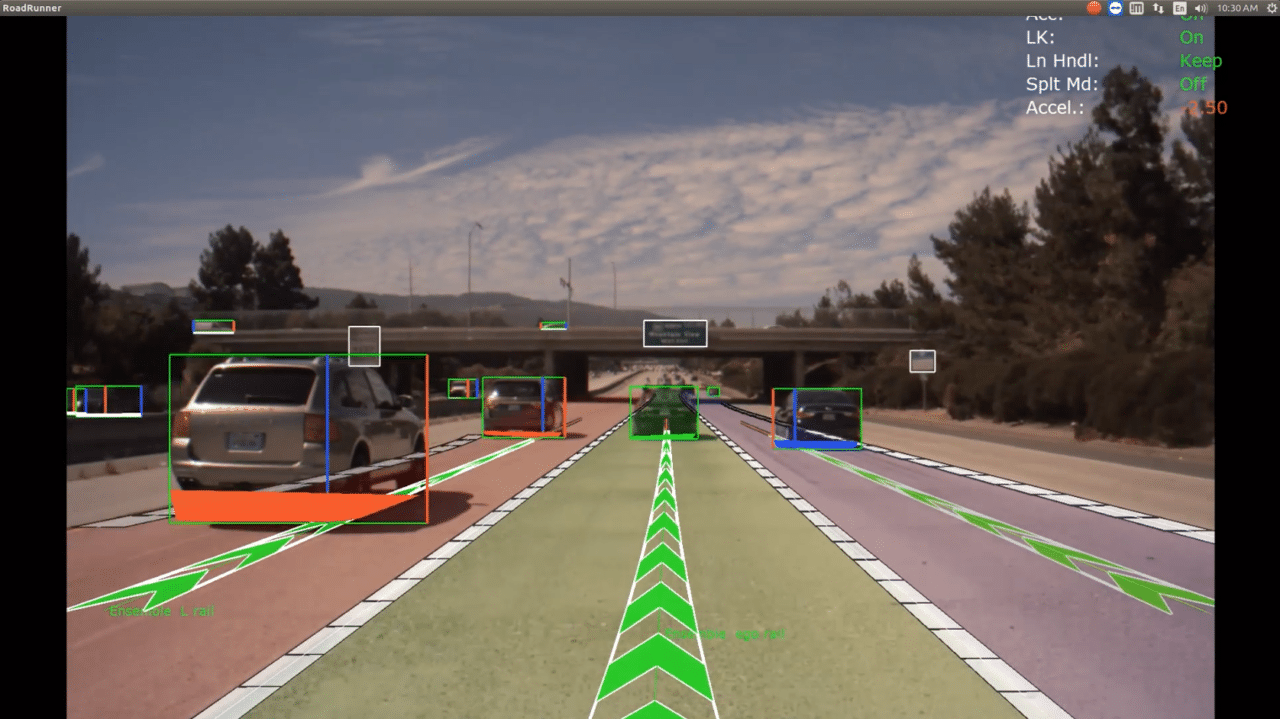

Example of a high-confidence path perception ensemble result for left, ego vehicle, and right lane center paths. High-confidence result visualized by thick green center path lines. The solid white lines denote lane line predictions and are also computed by the ensemble.

For example, our LaneNet DNN is trained to predict lane lines, while our PathNet DNN is trained to predict edges that define drivable paths regardless of the presence or absence of lane lines. And our PilotNet DNN is trained to predict driving center paths based on trajectories driven by human drivers.

We combined the different path perception outputs using an ensemble technique. It’s a machine learning method that combines several base models and produces an optimal predictive model.

Through agreement/disagreement analysis of the different path perception signals, we built and measured path perception confidence live in the car, and obtained a higher quality overall result.

This analysis is demonstrated in our visualization. When the signal components strongly agree, the thick line denoting center path prediction for a given lane is green; when they disagree, it turns red.

Since our approach is based on diversity, a system-level failure would be less statistically likely, which is tremendously beneficial from a safety perspective.

DRIVE with Confidence

The path perception confidence we built using diversity and redundancy enabled us to evaluate all potential paths, including center path and lane line predictions for ego/left/right lanes, lane changes/splits/merges, and obstacle-to-lane assignment.

During the drive, multiple path perception DNNs were running in-car alongside obstacle perception and tracking functionality. The need to simultaneously run these tasks underscores the practical importance of high performance computing in autonomous vehicle safety.

This software functionality — termed the path perception ensemble — will be shipping in the NVIDIA DRIVE Software 9.0 release. Learn more at https://developer.nvidia.com/drive/drive-perception.

Neda Cvijetic

Senior Manager, Autonomous Vehicles, NVIDIA