|

Dear Colleague,

On Tuesday, July 9, Embedded Vision Alliance founder (and BDTI

co-founder and President) Jeff Bier will be a speaker at the 2019

AI Design Forum, taking place in San Francisco,

California. This year’s theme is “The Future of Computing – from

Materials to Systems,” and the event brings together leaders from

throughout the industry ecosystem – from materials to systems – who

will share their vision of the innovations that will propel the

industry into the next wave of growth. Other speakers (nearly all from

Alliance member companies) include:

- Aart de Geus, PhD, Chairman and co-Chief Executive

Officer,

Synopsys

- Lisa Su, PhD, President and CEO, AMD

- Victor Peng, President and CEO, Xilinx

- Gary Dickerson, President and CEO, Applied Materials

(event

sponsor)

- Cliff Young, PhD, Engineer, Google

- Renée St. Amant, PhD, Research Engineer in Emerging

Technologies and US Innovator of the Year, Arm

- PR (Chidi) Chidambaram, PhD, VP of Process Technology and

Foundry Engineering, Qualcomm

Registration

for the AI Design Forum also includes a SEMICON

West and

ES Design West Expo Pass. For more information and to register, please

see the event

page.

Brian Dipert

Editor-In-Chief, Embedded Vision Alliance

|

|

AI Is Moving to the Edge—What’s the Impact on the

Semiconductor Industry?

Artificial intelligence is proliferating

into numerous edge applications and disrupting numerous industries.

Clearly this represents a huge opportunity for technology suppliers.

But it can be difficult to discern exactly what form this opportunity

will take. For example, will edge devices perform AI computation

locally, or in the cloud? Will edge devices use separate chips for AI,

or will AI processing engines be incorporated into the main processor

SoCs already used in these devices? In this talk, Yohann Tschudi,

Technology and Market Analyst at Yole Développement, answers these

questions by presenting and explaining his firm’s market data and

forecasts for AI processors in mobile phones, drones, smart home

devices and personal robots. He explains why there is a strong trend

towards executing AI computation at the edge, and quantifies the

opportunity for separate processor chips and on-chip accelerators that

address visual and audio AI tasks.

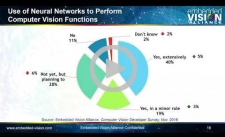

Computer Vision Developer Survey

In this presentation from the Embedded

Vision Alliance’s March 2019 Silicon Valley Meetup, Alliance founder

Jeff Bier shares findings from the Alliance’s most recent survey of

developers about the software, processors and tools they use to build

systems and applications using computer vision and visual AI.

|

|

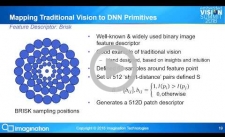

Improving and Implementing Traditional Computer Vision

Algorithms Using Deep Neural Network Techniques

There has been a very significant shift in

the computer vision industry over the past few years, from traditional

vision algorithms to deep neural network (DNN) algorithms. Many

companies with experience and investment in classical vision algorithms

want to utilize DNNs without discarding their existing investments. For

these companies, can classical vision algorithms provide insights and

techniques to assist in the development of DNN-based approaches? In

this talk from the 2018 Embedded Vision Summit, Paul Brasnett, Senior

Research Manager for Vision and AI in the PowerVR Division at

Imagination Technologies, looks at the similarities between classical

and deep vision. He also looks at how a classical vision algorithm can

be expressed and adapted to become a trainable DNN. This strategy can

provide a low-risk path for developers transitioning from traditional

vision algorithms to DNN-based approaches.

Hybrid Semi-Parallel Deep Neural Networks – Example

Methodologies & Use Cases

Deep neural networks (DNNs) are typically

trained on specific datasets, optimized with particular discriminating

capabilities. Often several different DNN topologies are developed

solving closely related aspects of a computer vision problem. But to

utilize these topologies together, leveraging their individual

discriminating capabilities, requires implementing each DNN separately,

increasing the cost of practical solutions. In this talk from the 2018

Embedded Vision Summit, Peter Corcoran, co-founder of FotoNation (now a

business unit of Xperi) and lead principle investigator and

director of C3Imaging (a research partnership between Xperi and the

National University of Ireland, Galway), develops a methodology to

merge multiple deep networks using graph contraction. The resultant

single network topology achieves a significant reduction in size over

the combined individual networks. More significantly, this merged

semi-parallel

DNN (SPDNN) can be re-trained across the combined datasets used to

train the original networks, improving its accuracy over the original

networks. The result is a single network that is more generic, but with

equivalent – or often enhanced – performance over a wider range of

input data. Examples of several problems in contemporary computer

vision are solved using SPDNNs. These include significantly improving

segmentation accuracy of eye-iris regions (a key component of iris

biometric authentication) and mapping depth from monocular images,

demonstrating equivalent performance to stereo depth mapping.

|