This article was originally published by Xnor.ai. It is reprinted here with the permission of Xnor.ai.

Wyze, the provider of affordable smart home technologies, came to us with a problem — can you help reduce the endless, unnecessary notifications for our camera users? At the time, Wyze was sending their users notifications based on motion by doing a pixel by pixel comparison across frames. When it detected a change in two sequential images, it inferred occurrence of “motion,” a proxy for something interesting happening in the environment. Admittedly, sometimes motion indicated interesting events. But it was also picking up every bug, shadow, or leaf blowing across your driveway, leading to an endless stream of unnecessary notifications.

Person Detection notifications enabled by Xnor.ai

The obvious answer to this problem is to use AI to build a more sophisticated understanding of the visual environment that the camera sees and then to send notifications only when something really relevant is happening, for example, when a person is detected. This approach exists today on many smart home cameras, but almost all solutions are running the model and inference on a GPU in the cloud. These cloud-based solutions are slower, open to more privacy compromises, and typically require consumers to cover the cost via a cloud subscription fee.

Wyze was clear from the beginning that they wanted an on-device solution on their $20 camera that did not require them to charge customers an additional cloud-service fee.

Solving this problem was a perfect fit for Xnor. We work with a variety of edge IoT hardware, and our inference engine is designed, optimized, and built exclusively for IoT-class processors. As an engineering team, we work diligently to ensure inference is fast and memory efficient, even on the most resource constrained devices. Here is an overview of the process we undertook with Wyze.

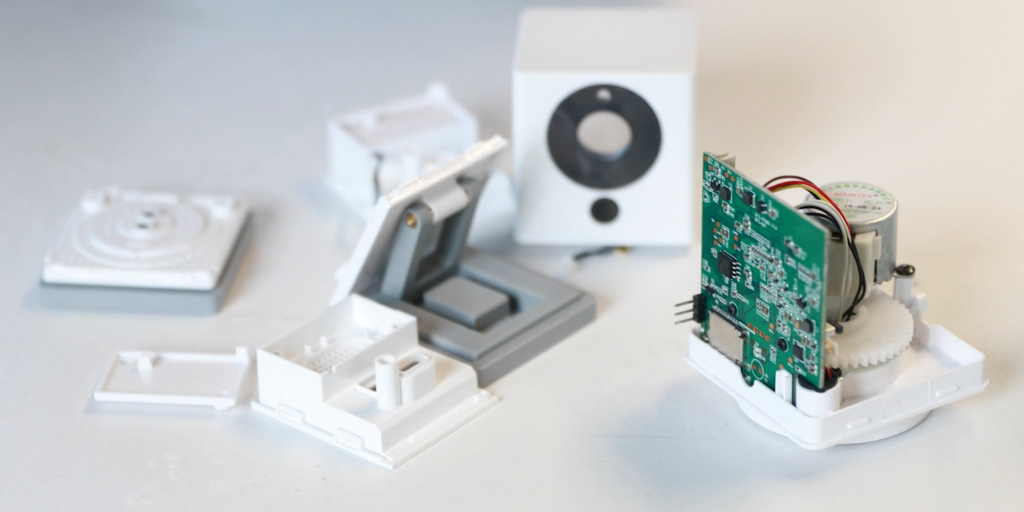

A hardware teardown of the Ingenic T20, the platform that powers the Wyze Cam

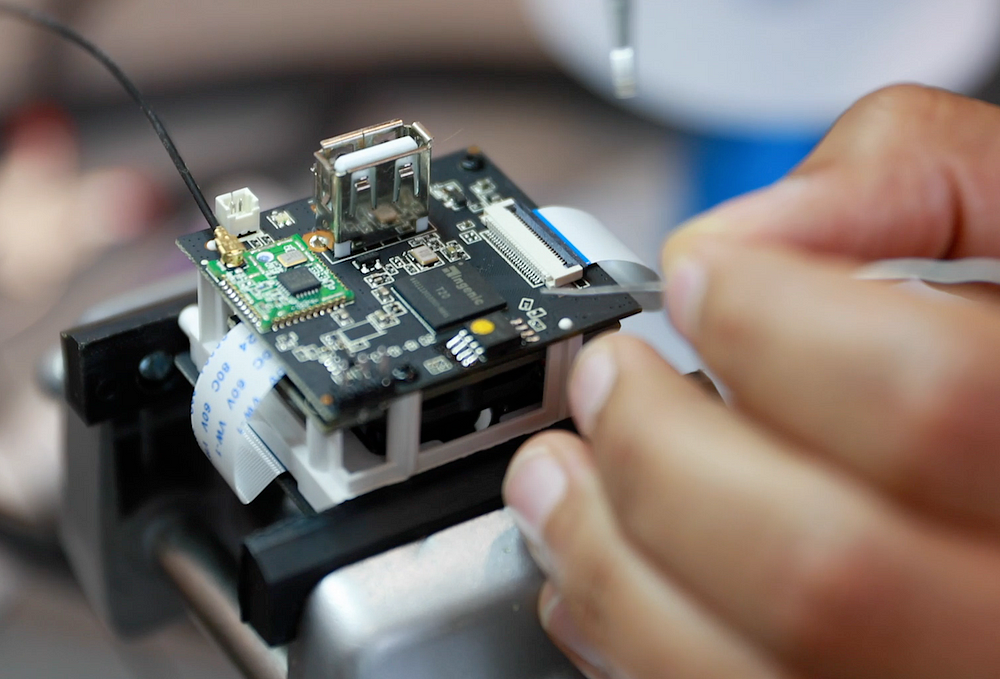

Step 1: Understanding the hardware powering the Wyze Cam

We were delighted to discover that the Wyze Cam V2 was based on a MIPS based processor and we quickly found a solution to simulate the Wyze Cam v2 system on a Linux environment, which enabled us to easily port our Xnorized models to the Wyze platform.

Step 2: On-device performance optimization

Getting DNNs to run on CPUs is especially difficult to achieve considering other processes such as image capture, image drawing, and video encoding are also competing for precious CPU cycles. Xnor engineers who specialize in high-performance computing for edge devices, hand-tuned and optimized several computational building blocks using our Xnorized Performance Optimization Toolkit. This resulted in a solution that exceeded our internal performance targets and plays a significant role in the responsiveness of the solution.

Step 3: Training data

Wyze requested a person detector as a starting model. To begin, we first needed to augment our core library of Xnor person detection data with Wyze user video data in order to customize the model for Wyze’s use case. Fortunately, Wyze has a highly engaged group of beta testers that were so motivated for this feature that they opted-in to share their videos. It was important that we got a diverse set of videos, meaning videos that included inside/outside, day/night, different viewpoints, lighting conditions, different ages, genders, and races of people so that we could train the model to robustly recognize a person in a variety of situations.

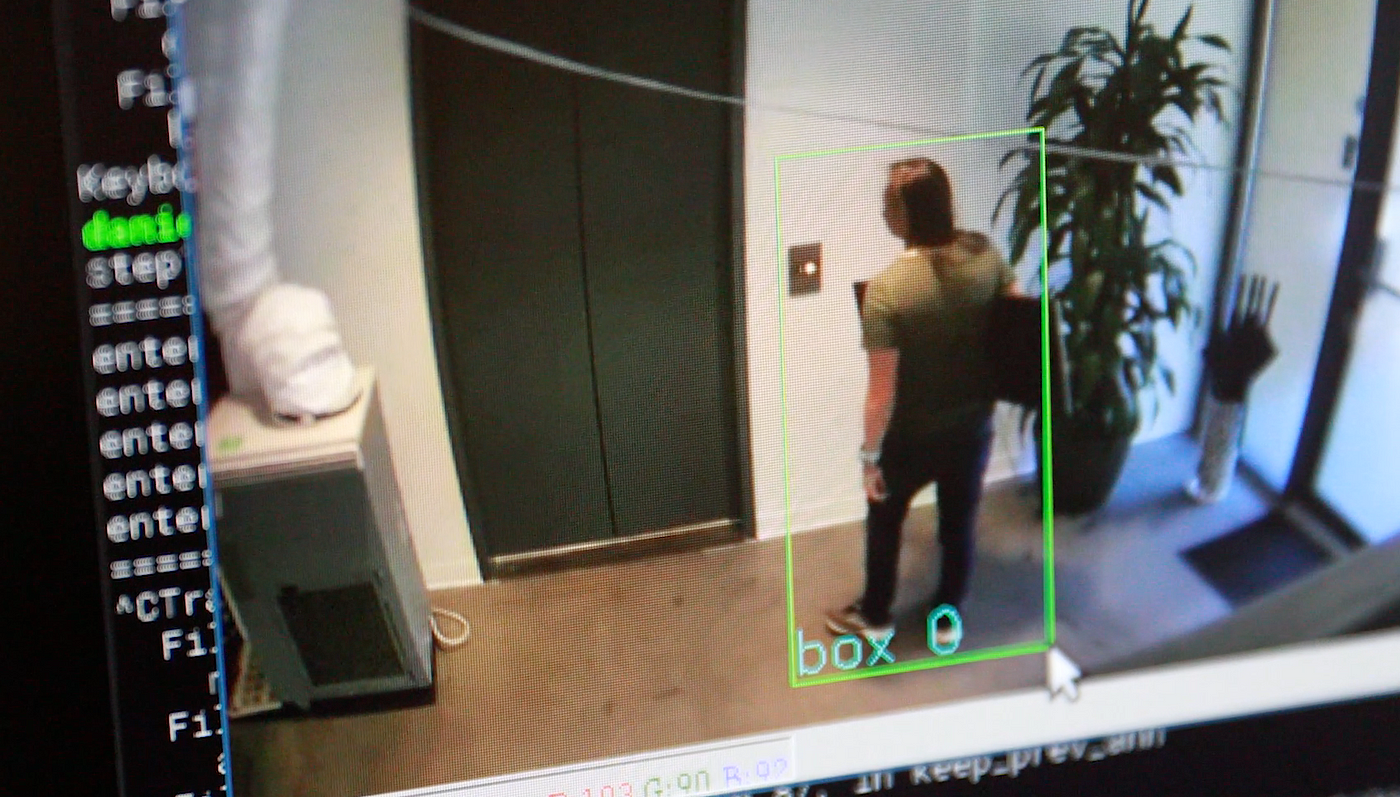

Step 4: Annotating the training data

In machine learning, annotation is where you label the video or image with the meaningful components or features that you want the model to learn. In this case, since we were training a person classification model we annotated videos that contained both a person and also noted other videos that did not contain a person, so the model could learn both positive and negative examples. Our team would provide the label person even if we only saw a hand, foot, part of a face, any body part (complete or incomplete) that suggested a person was in the frame. Xnor has a highly robust data privacy and security policy, demanding all annotation of user videos to be handled in-house on encrypted machines.

Example of annotation data taken from our office Wyze Cam

Step 5: Training the model

Model training is the process of teaching the model by example by providing positive (images of people) and negative (images of other objects) examples. Once we had a significant amount of labeled user video data we were able to train a model using our Xnor Proprietary Training Workflow that is designed to automatically train models optimized to run on resource constrained devices.

Step 6: Testing and Quality Assurance

To understand the accuracy of the model we validate performance on a subset of the data that has purposefully been excluded from training. Accuracy on the validation set is a good proxy for how the solution will behave in production. Great validation sets capture the diversity and variability of the sample population, e.g., across various scenarios, viewpoints, and lighting conditions.

For Wyze’s model, we created a small validation set drawn from the video clips provided voluntarily by Wyze beta users. For every model that we trained, we compared the performance of the model by generating Precision Recall (PR) curves. As we learned more about the use case and the video content inside beta user videos, we improved the performance over time.

Step 7: What Xnor shipped to Wyze

Once we had a model that was trained, shipping it to Wyze was very simple. Xnor creates modules, known as Xnor Bundles or XBs, that contain both the model and the inference engine in a single library. This no fuss, no hassle approach simplifies the workflow for integrating edge AI algorithms onto arbitrary edge devices.

Working with an XB is very easy. A developer selects a language binding, e.g., C, Python, Java and links a prebuilt, optimized library for their edge platform. The developer can then run inference by feeding image data to a stable, well-defined API. Depending on the model, the API can provide a variety of outputs such as string labels for image classification, bounding boxes for object detection, and segmentation masks for semantic segmentation.

For Wyze, we provided two XBs: one built for the Wyze Cam platform and another for rapid prototyping and testing for a traditional x86_64 platform. For every new model we ship to Wyze, all they have to do is overwrite the old XB with the new one. No code changes necessary.

To see the simplicity of our XB approach, you can review the tutorial on AI2GO.

Step 8: Using user feedback to understand where the model fails

While we have robust ways to understand statistical accuracy of the model (discussed in step 6), for the commercial deployment of any machine learning solution it is critical to see how the model performances in the real-world and the extent to which users believe that it is actually solving their problem. Fortunately, Wyze has a highly active beta community that is willing to not only share feedback, they also shared exemplary videos of where the model failed or succeeded.

Step 9: More training and model refinement

Based on the feedback and additional training videos that we received from Wyze beta users we were able to repeat steps 4–6 to further refine the performance of the model.

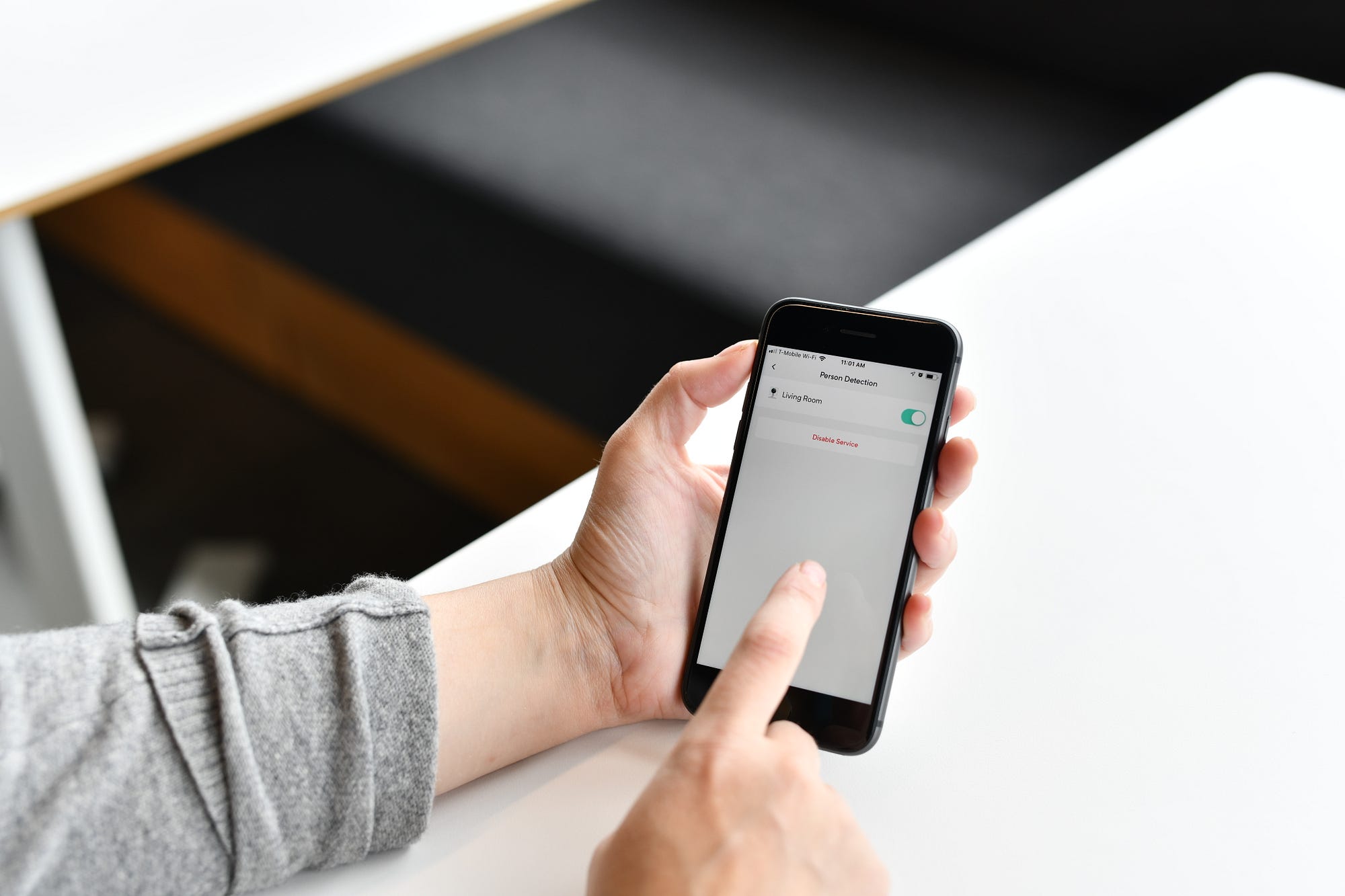

Step 10: Integration of model into an intuitive User Experience

Introducing AI capabilities into a product is something that requires careful thought and consideration. AI is a powerful technology, but needs to be focused on solving user problems in order to be effective. In order to deliver the simple implementation of improving user notifications and allowing users to organize video clips that contain people, Xnor’s product and design team collaborated closely Wyze’s team. Together we ensured that a complex technology did not create a complex user experience.

Screen shot from the Person Detection feature on the Wyze App

Big thank you to the Wyze beta community!

As you can see from this post — this solution would not have been possible without the open and highly engaged community of beta users that Wyze has created. As we launch this solution for millions of Wyze users we will continue to actively monitor the feedback from the beta community and strive to make the model as accurate and useful as possible for you.

Read announcement: here

Learn more at www.xnor.ai

About Xnor.ai

Xnor.ai (Xnor) is helping reshape the way we live, work and play by enabling AI everywhere, on every device. As the company that first proved it was possible to run state-of-the-art AI on resource-constrained compute platforms, today Xnor helps organizations add AI functionality to their products — powering a new generation of smart solutions for existing and new markets. Xnor’s patented edge AI technology has upended traditional AI, resulting in billions of devices and products being AI-optimized — from battery operated cameras, to complex manufacturing machinery, and automobiles. Xnor’s mission is to make AI accessible by freeing it from the cloud, data centers and the limits of internet connectivity and enabling a world where AI is available in billions of products, everywhere for everyone.