This blog post was originally published at Intel's website. It is reprinted here with the permission of Intel.

Interactions with computer systems frequently benefit from the ability to detect information about the user or use environment. Image processing and analyzing techniques, including deep neural networks (DNNs), are often used for this purpose. However, many promising visual-processing applications, such as non-contact vital signs estimation and smart home monitoring, can involve private and or sensitive data, such as biometric information about a person’s health. Thermal imaging, which can provide useful data while also concealing individual identities, is therefore used for many applications.

Several aspects of the application of DNNs to thermal images are deserving of more study. In particular, in research conducted with Dr. Jacek Ruminski of the Gdansk University of Technology and presented in June at the IEEE 12th International Conference on Human System Interaction (HSI 2019), we investigate:

- Can a DNN model trained on RGB image data (in other words, “visible light” image data), be applied to thermal images, without retraining, while maintaining classification accuracy?

- How does resolution degradation of the thermal images via downscaling affect the model’s recognition accuracy?

- Does the application of a Super Resolution (SR) model to enhance the degraded thermal images improve the model’s classification accuracy?

Our paper on this topic, “Influence of Thermal Imagery Resolution on Accuracy of Deep Learning based Face Recognition,” was awarded the honor of best paper in the area of health care and assisted devices at the conference. Our preliminary results indicate that an RGB model can be effective for thermal facial image classification without retraining. We also find that SR can in some cases improve classification accuracy for lower-resolution images.

DNNs for Thermal Images: Why?

The utility of context information about user and use environment has led to various studies [1] applying computer vision algorithms to detect and recognize people, objects, and actions. Issues found with such algorithms have typically pertained to poor lighting conditions or security or privacy concerns.

Issues with lighting have been addressed in a variety of ways, generally at the expense of greater computational overhead. These approaches also are constrained by working only in certain environments. However, privacy and security issues remain when using visible light images for applications relating to fields such as medicine or person monitoring for smart environments. Thermal imagery is often used in such systems in order to increase privacy by obscuring personally identifying details.

At the same time, DNNs have seen increasing use in recent years, gaining popularity due to their human-like competence for tasks like person recognition. Earlier research has applied facial recognition methods known to work on visible light images to thermal images acquired via a variety of methods. Researchers have typically applied traditional feature-extraction to classification/recognition approaches.

It is less understood whether DNNs trained on visible light images will generalize well to thermal data without retraining, as thermal data depict smooth changes between facial regions, are lower contrast, and lack high-frequency components. Further, while researchers have studied the influence of image resolution on facial recognition accuracy for visible light images, to the best of our knowledge no such studies exist for thermal data.

Methodology

Datasets. Our experiments used two datasets of thermal images of faces. The first, referred to as SC3000-DB in our study, was created by our research team using a FLIR ThermaCAM* SC3000 camera. It contains 766 images in 40 categories with each category depicting a different volunteer from our cohort of 19 men and 21 women. To capture these images, volunteers were asked to sit and look at the camera for a span of two minutes. The second set used was the IRIS dataset from the Visual Computing and Image Processing Lab (VCIPL) at Oklahoma State University. IRIS consists of 4190 images collected with the assistance of 30 volunteers. IRIS differs from SC3000-DB primarily in that individuals were not focused on the thermal camera, may move their heads, and may use different facial expressions in different images.

Face Detection. As images in our datasets frequently included more features than just the face of the volunteer, our first step was to crop the images to only the region containing the individual’s face. To do so, we retrained the SSD model with Inception V2 backbone using transfer learning and applying a random search approach to the hyperparameters for the best training configuration.

Resolution Degradation and Enhancement. Resolution degradation was simulated by generating downscaled images from both datasets following facial recognition and cropping. Images were scaled down 2x, 4x, and 8x, resulting in images as small as 13.14 ± 1.47 pixels by 15.57 ± 1.96 pixels. Image enhancement occurred through the use of the Super Resolution convolutional neural network (CNN), a custom CNN designed by us, which was modified to widen its receptive field by introducing residual blocks with shared weights to the feature extraction subnetwork of the CNN SR model. This modification addresses the problem of feature blurring in thermal imagery and the challenge of bigger distances between components of interest due to the heat flow in objects.

In fact, part of our research was to verify how using Super Resolution can enhance the accuracy of person recognition. Super Resolution was used to create a version of the image with higher resolution without causing the blur effect of enlarged pixels. In order to simulate an image of poor quality that needs to be super resolved we took the original image, downscaled it by a factor X (this is the image from a simulated low quality camera) and then upscaled it by the same factor X while applying Super Resolution.

Facial Feature Extraction. We utilized facial features represented as the vector of embeddings extracted from cropped images with the use of the FaceNet DNN architecture. We used a model trained on VGGFace2— in other words, a model trained on visible light images – in order to validate whether such a model could be applied to thermal images.

Face Recognition. We tested two methods of comparing facial feature vectors. First, we used Support Vector Machines (SVM) with linear kernel to identify the person depicted in a given input image. The second approach was based on the Euclidean Distance between vector representations from the individual’s database profile and the input image.

Findings

We found a FaceNet model trained on visible light image data to generalize well to thermal images, demonstrating the feasibility of applying such DNNs to thermal image recognition tasks. We show that the model was able to extract facial embeddings and distinguish various volunteers in our two datasets with accuracies of 99.5% for SC3000-DB and 82.14% for IRIS.

Table 1 Accuracy [%] of person recognition from test set images (80% of all images); the reference embedding generated with 20% of images.

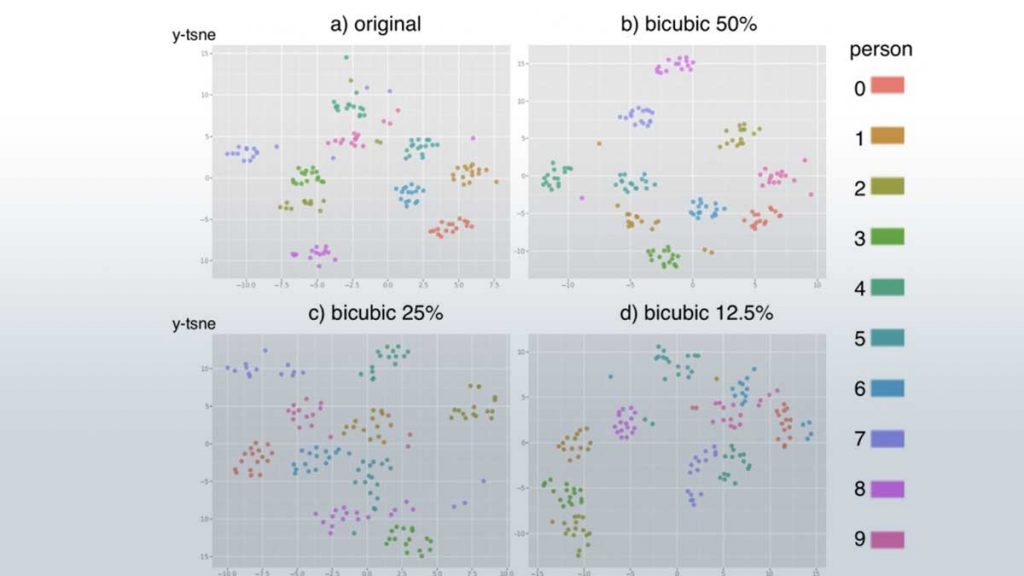

In Figure 1, we observe the influence of resolution degradation on the ability to distinguish various classes. While the original data can for the most part be neatly clustered, as resolution decreases, clusters increasingly overlap, making accurate classification more difficult.

Figure 1 – The influence of resolution degradation on ability to distinguish classes.

To cope with this, we applied SR to all image inputs scaled smaller than the original. Classification accuracy improved with SR for the IRIS dataset, which included motion, facial expressions, and other irregularities. Improvement by SR was minimal for the other dataset we tested, which included less dynamic images. Still, this seems to indicate the usefulness of SR in scenarios like remote vital signs monitoring and user authentication, which can rely on their subjects to remain motionless.

Conclusions and Next Steps

Our preliminary results show:

- DNNs for visible light images may be applicable to other image types, such as thermal images.

- Resolution degradation of thermal images reduces their classification accuracy when using a DNN trained for visible light images.

- Classification accuracy can in some cases be improved via the use of an SR model.

We hope to extend this research in the future for data collected in various other measuring scenarios, such as when subjects’ heads are turned horizontally or vertically. For more on this research, please review our paper, Influence of Thermal Imagery Resolution on Accuracy of Deep Learning based Face Recognition. For more research into this and other areas of AI and deep learning, please stay tuned to intel.ai, @IntelAIResearch, and @IntelAI.

Maciej Szankin

Software Engineer, AI Applications, Intel

Alicja Kwasniewska

Software Engineer, AI Applications, Intel