Dear Colleague,

Join us at the 2020 Vision Product of the Year Awards Ceremony, a free online event next Wednesday, June 3, 2020 from 9-10 am PT. We will announce the five winning companies (selected by our panel of independent judges) that are developing the next generation of computer vision products in each of five award categories: AI Processors, AI Software and Algorithms, Cameras and Sensors, Developer Tools, and Automotive Solutions. You’ll learn about the products and companies, and can then head to each company’s breakout room and engage directly with the winners in Q&A sessions. This event promises to be a fantastic way to keep abreast of key innovations in our rapidly moving field; register now!

We’ve got big news about the Embedded Vision Summit! Originally scheduled to take place in person last week in California, the 2020 Embedded Vision Summit is moving to a fully online experience, taking place from September 15 through September 25. The Summit remains the premier conference and exhibition for innovators adding computer vision and AI to products. Hear from and interact with over 100 expert speakers and industry leaders on the latest in practical computer vision and edge AI technology—including processors, tools, techniques, algorithms and applications—in both live and on-demand sessions. See cool demos of the latest building-block technologies from dozens of exhibitors. And hands-on trainings and workshops provide even more in-depth education opportunities. Attending the Summit is the perfect way to bring your next vision- or AI-based product to life. Are you ready to gain valuable insights and make important connections? Be sure to register today with promo code SUPEREBNL20-V to receive your Super Early Bird Discount!

Brian Dipert

Editor-In-Chief, Edge AI and Vision Alliance |

|

Three Key Factors for Successful AI Projects

AI is transforming the products we build and the way we do business. AI using images and video is already at work in our smart home devices, our smartphones and many of our vehicles. But despite some clear successes, AI projects fail 85% of the time, according to Gartner. Fortunately, the industry is rapidly accumulating experience that we can learn from in order to find ways to reduce risk in AI projects. In this talk, Bruce Tannenbaum, Technical Marketing Manager for AI applications at MathWorks, presents three key factors for successful AI projects, especially those using visual data. The first factor is incorporating domain expertise into the design of the AI solution, rather than relying purely on brute-force application of generalized algorithms. The second success factor is obtaining the right quantities and types of data at each stage of the development process. The third factor is creating AI workflows that enable repeatable and deployable results. He illustrates the importance of these factors using case studies from diverse applications including automated driving, crop harvesting and tunnel excavation.

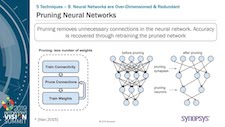

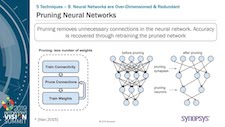

Five+ Techniques for Efficient Implementation of Neural Networks

Embedding real-time, large-scale deep learning vision applications at the edge is challenging due to their huge computational, memory and bandwidth requirements. System architects can mitigate these demands by modifying deep neural networks (DNNs) to make them more energy-efficient and less demanding of embedded processing hardware. In this presentation, Bert Moons, Hardware Design Architect at Synopsys, provides an introduction to today’s established techniques for efficient implementation of DNNs: advanced quantization, network decomposition, weight pruning and sharing and sparsity-based compression. He also previews up-and-coming techniques such as trained quantization and correlation-based compression.

|

|

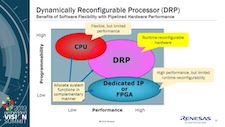

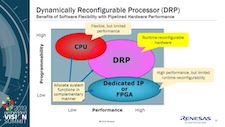

Dynamically Reconfigurable Processor Technology for Vision Processing

The Dynamically Reconfigurable Processing (DRP) block in the Arm Cortex-A9 based RZ/A2M MPU accelerates image processing algorithms with spatially pipelined, time-multiplexed, reconfigurable-hardware compute resources. This hybrid ARM/DRP architecture combines the economy, flexibility and ease-of-use of microprocessors with the high throughput and low latency of performance-optimized hardware. DRP technology achieves silicon area efficiency by dividing large data paths into sub-blocks that can be swapped into the DRP hardware on each clock cycle to accelerate multiple complex algorithms while avoiding the cost and power penalties associated with large FPGAs. Pre-built libraries and a C-language programming environment deliver these benefits without the need for hardware design expertise. Designs can be iteratively enhanced through pre-production and even after mass-market deployment. In this presentation, Yoshio Sato, Senior Product Marketing Manager in the Industrial Business Unit at Renesas, examines the DRP block’s architecture and operation, presents benchmarks demonstrating performance up to 20x greater than traditional CPUs and introduces resources for developing DRP-based embedded vision systems with the RZ/A2M MPU. Also see Renesas’ recent webinar on the topic.

The AI Engine: High Performance with Future-proof Architecture Adaptability

AI inference demands orders-of-magnitude more compute capacity than what today’s SoCs offer. At the same time, neural network topologies are changing too quickly to be addressed by ASICs that take years to go from architecture to production. In this talk, Nick Ni, Director of Product Marketing at Xilinx, introduces the AI Engine, which complements the dynamically programmable FPGA fabric to enable ASIC-like performance via custom data flows and a flexible memory hierarchy. This combination provides an orders-of-magnitude boost in AI performance along with the hardware architecture flexibility needed to quickly adapt to rapidly evolving neural network topologies.

|