This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA.

In robotics applications, 3D object poses provide crucial information to downstream algorithms such as navigation, motion planning, and manipulation. This helps robots make intelligent decisions based on surroundings. The pose of objects can be used by a robot to avoid obstacles and guide safe robotic motion or to interact with objects in the robot’s surroundings. Today, 3D pose estimation is used in many industries, including autonomous driving, factory and logistics, and assistive technology.

In this post, we present an overview of the 3D pose estimation framework released in NVIDIA Isaac SDK 2020.1 and how to use the 3D pose estimation feature for navigation and manipulation in intralogistics and smart manufacturing applications.

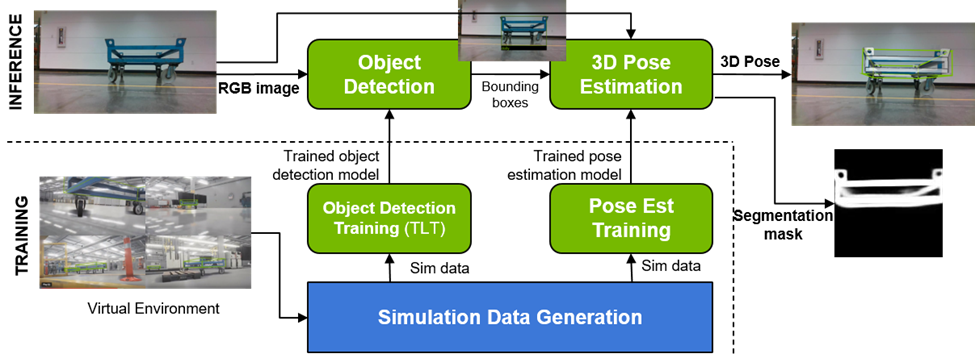

Figure 1 shows two scenarios where an object pose is important: a dolly to be picked up by an autonomous mobile robot; and a container to be picked up by a robotic arm. Figure 2 shows how pose prediction is trained in simulation and then applied in the real world.

Figure 1. Inference 3D bounding boxes (in green) along with the mesh (in grey) rendered with predicted poses on real data for industrial cart (left) and boxes (right).

Figure 2. Inference 3D bounding boxes (in green) rendered with predicted poses on both simulated (left) and real input images ( right) for industrial cart.

Figure 3. 3D pose estimation training and inference pipeline in Isaac SDK.

Figure 3 shows the two main modules of 3D pose estimation framework for training and inference pipeline in Isaac SDK:

- Object detection [using RGB full image as input] —The algorithm first detects objects from a known set of objects using a ResNet-based object detection inference module. You use NVIDIA DeepStream DetectNetv2, which is based on ResNet50 architecture, for object detection.

- 3D pose estimation [using cropped RGB object image as input] —At inference time, you get the object bounding box from object detection module and pass the cropped images of the detected objects, along with the bounding box parameters, as inputs into the deep neural network model for 3D pose estimation. The Isaac 3D pose estimation DNN network outputs the pose and decode segmentation image. For more information, see the 3D Object Pose Estimation with Pose CNN Decoder section in the Isaac SDK documentation.

Navigation in factory intralogistics environments

3D pose estimation is an integral part of the cart delivery operation that is common in most of the warehouse autonomous mobile robots (AMR). One such example is BMW’s Smart Transportation Robot (STR).

Figure 4 shows the BMW STR navigating autonomously around in the warehouse and reaching a predetermined waypoint close to the industrial cart (dolly). The 3D pose estimation model inputs the RGB camera feed from the robot and estimates the dolly’s pose in the robot’s camera coordinates. The dolly’s pose is then used in the LQR planner or RL-based path planner to steer the robot under the dolly to pick it up and deliver it at the second predetermined waypoint.

Figure 4. BMW STR carrying the industrial cart in the BMW factory plant.

In the next section, we describe how to generate data and train 3D pose estimation framework modules: object detection and 3D pose networks for cart delivery use cases.

Generating data and training 3D pose framework modules for cart delivery

One of the main goals of Isaac SDK is to enable training of the 3D pose estimation DNN in simulation, as it can provide an infinite stream of inexpensive, labelled data. IsaacSim provides a series of scenarios required to train and test multiple modules needed for the cart delivery application in the Factory01 scene in the Factory of the Future binary available in the IsaacSim Unity3D 2020.1 release archive.

Object detection training process

To run training for dolly detection, run any of the dolly detection training scenes from the Factory of the Future binary. Scenarios 7, 13, 14, and 15 provide training data for dolly detection. To start the scene with scenario 7 for generating the training data for detection, for example, run the following command from the IsaacSim release folder:

bob@desktop:~/isaac_sim_unity3d$./builds/factory_of_the_future.x86_64 --scene Factory01 --scenario 7

./builds/factory_of_the_future.x86_64indicates the binary to run that contains the scene of interest.--sceneindicates the scene name inside the binary to run, which is Factory01.--scenarioindicates the scenario number in the scene to run, which is 7 for the object detection training setup.

To save the simulated data offline, run the generate_kitti_dataset Isaac application alongside the simulation scene. From inside the Isaac SDK root folder, run the following command:

bazel run packages/ml/apps/generate_kitti_dataset

After generating the dataset, the NVIDIA Transfer Learning Toolkit is used to fine-tune a DetectNetv2 detection model. For more information about the GenerateKittiDataset app and training using the Transfer Learning Toolkit, see Object Detection with DetectNetv2 and Deploying Real-time Object Detection Models with the NVIDIA Isaac SDK and NVIDIA Transfer Learning Toolkit.

3D pose estimation training process

To start generating the stream of pose estimation model training data, navigate to the folder containing the extracted IsaacSim release archive and run the Factory of Future scene with name Factory01 and Scenario 8 by executing the following command for the industrial cart (dolly):

bob@desktop:~/isaac_sim_unity3d$ ./builds/factory_of_the_future.x86_64 --scene Factory01 --scenario 8

The scenario in the Factory01 scene is set up to generate domain-randomized data along with the pose ground truth for the industrial cart with varying lighting conditions, saturation, occlusions, relative positions of the dolly with respect to camera, varying wheel rotations with respect to dolly frame, and so on that are required for training a robust model for the Factory setting. This scene requires an NVIDIA RTX 2080 or better GPU to run at an acceptable (~30 Hz) framerate. The training scene is set up to render views with the same camera height from the ground as it is for the camera on the robot. This allows more relevant training samples that can speed up training.

After the binary starts running, run the following command to establish communication to the simulator and launch the training:

bob@desktop:~/isaac$ bazel run packages/object_pose_estimation/apps/pose_cnn_decoder/training:pose_estimation_cnn_training

The command runs the pose_estimation_cnn_training.py training script that connects to the sample_accumulator node in the training application where the training data is collected. The path to this training application, setting training model parameters and hyperparameters as well as paths to save the logs, and the starting point for training are done in the configuration file with the following path:

packages/object_pose_estimation/apps/pose_cnn_decoder/training/training.config.json

Reducing the learning rate in this config file to around 1e-4 after around the first 15000 iterations is recommended for better loss reduction during training for this use case. Running the training on a different GPU than the simulation might be required depending on the memory available.

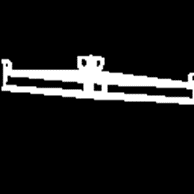

Figure 5 shows an example of the cropped and downsampled RGB image of the industrial cart object that is sent as input to the network, the corresponding clean ground truth segmentation image used to train the decoder, and the predicted decoder output from the model respectively, visualized in TensorBoard during training.

In this case, the decoder is trained to ignore the wheels as the wheel movement is independent of the cart. This predicted segmentation image demonstrates how the network has indeed learned to ignore the wheels and to successfully complete the missing pixels in the image even in the presence of partial occlusions. This is one of the important visual metrics of the 3D pose estimation architecture in Isaac SDK to analyze how well the model has been trained.

(a) Encoder color input |

(b) Decoder ground truth |

(c) Decoder output from the model |

Figure 5. Cropped and re-sized RGB input image to encoder, corresponding segmentation mask ground truth image of the decoder to train on and predicted decoder output from the Pose CNN Decoder model. (from left to right)

Pose inference for industrial carts

We now discuss how to use the trained model for 3D pose estimation on real data as well as simulated data.

Inference on real data

Isaac SDK allows for 3D pose estimation inference using different modes of input RGB image such as a group of images from a directory, a stream of images in Isaac SDK log format, an image stream from a simulation, or the real sense camera. To test the sim-to-real transfer of the trained models, Isaac SDK also provides sample images and logs to run the inference on the industrial cart.

For pose estimation inference on a real RGB image, run the following command:

bob@desktop:~/isaac$ bazel run packages/object_pose_estimation/apps/pose_cnn_decoder:detection_pose_estimation_cnn_inference_app -- --mode image --rows 480 --cols 848 --optical_center_rows 240 --optical_center_cols 424 --focal_length 614.573

--mode imageindicates that the inference input is from images in a given input folder path.--rowsand--colsrepresent the number of rows and columns of the input image.--optical_center_rows,--optical_center_cols, and--focal_lengthrepresent the optical center and focal length of the camera in pixels required to reconstruct the pinhole model that is needed to compute the 3D pose.

For pose estimation inference on sample real Isaac SDK logs collected as input, run the following command:

bob@desktop:~/isaac$ bazel run packages/object_pose_estimation/apps/pose_cnn_decoder:detection_pose_estimation_cnn_inference_app -- --mode cask --rows 480 --cols 848

--mode caskindicates that the inference input is from a channel in the Isaac log format and the default channel name is ‘color’.--rowsand--colsrepresent the number of rows and columns of the input image.- The pinhole model of the camera is stored in the Isaac logs and is not given through input arguments.

For the visualization of both detection and pose estimation along with the image stream, go to NVIDIA Isaac Sight (http://localhost:3000/) and enable all channels. The inference runtime of the pose model with decoder output on an NVIDIA Jetson Xavier is around 7.4 ms and ~135 fps with FP16 precision and 28 ms and ~33 fps with FP32 precision.

Inference in Factory of the Future simulation settings

To allow for testing the perception models independently and inside the navigation stack for cart delivery use case, IsaacSim Unity3D provides a Factory of the Future binary with the scene name Factory01 and Scenario number 1 that simulates a factory setting with the robot in the scene. To run just the perception module for this scene, launch the sim binary using the following command from the folder containing the extracted IsaacSim release archive:

bob@desktop:~/isaac_sim_unity3d$ ./builds/factory_of_the_future.x86_64 --scene Factory01 --scenario 1

This scenario in the scene spawns a group of industrial carts around the factory floor every time the simulation is launched. To help you run different modules involved in the cart delivery stack independently and test along with the full cart delivery stack application, a list of applications is available in the packages/cart_delivery folder inside the Isaac SDK root directory.

To test the perception module alone, which consists of pose estimation inference, run the following command for the perception app after the simulation is launched:

bob@desktop:~/isaac$ bazel run packages/cart_delivery/apps:perception -- --more packages/cart_delivery/apps/detection_pose_estimation.config.json,packages/navsim/robots/str4.json

Object detection and pose estimation parameters like models, bounding box filtering based on area, confidence thresholds, filtering 3D poses based on depth, and so on can all be changed in the input configuration file:

packages/cart_delivery/apps/detection_pose_estimation.config.json

For example, at inference time, to filter out the poses of carts that are more than three meters away from the camera in the x-direction, increase the threshold_translation parameter in the detections_filter component from [7.0, 1.5, 7.0] to [3.0, 1.5, 7.0]. This parameter allows you to limit the range of pose estimation in the case of multiple visible cart instances at inference time.

...

"detection_pose_estimation.detections_filter": {

"Detections3Filter": {

"detection_frame": "camera",

"threshold_translation": [3.0, 1.5, 7.0],

"threshold_rotation": [6.3, 6.3, 6.3]

}

},

...

This application allows you to control the robot and steer it from keyboard or mouse inside the factory using Isaac Sight (http://localhost:3000/) using the Virtual Gamepad window. You can see the live camera stream along with the estimated 3D pose of the industrial carts in the image frame in Isaac Sight.

Finally, to run the full stack cart delivery application with the perception module integrated into the planner, first launch the simulation with Scenario 1 in Factory01 scene using the same command as for the perception application from inside the Isaac Sim release archive. Then, run the following command from the Isaac SDK directory:

bob@desktop:~/isaac$ bazel run packages/cart_delivery/apps:cart_delivery

The application moves the STR to a random waypoint for cart pickup every time that the application is launched. This allows for testing of the cart delivery loop for multiple scenarios. For the robot to successfully steer under the industrial cart for pickup, the room for error along the centerline is between 5–10 cm depending on the rotation of the wheels. Run the application multiple times to allow for the cart pickup from different relative robot positions with respect to the cart and thus test the reliability of the perception models in the simulation setup. For more information about the behavior tree for the app and individual components, see the Cart Delivery in the Factory of the Future section in the Isaac SDK 2020.1 documentation.

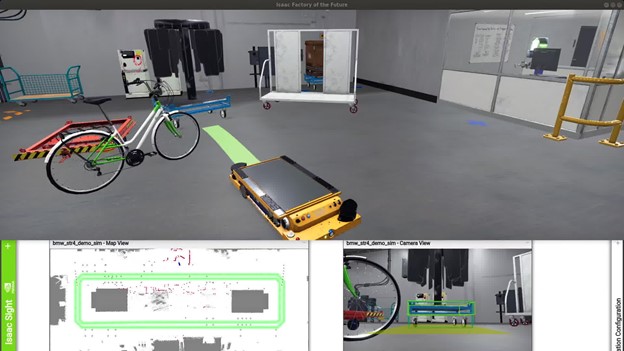

Figure 6. Industrial cart pose estimation linked to the navigation path planner in Isaac SDK.

The top image in Figure 6 shows a snapshot taken during the autonomous navigation of the BMW STR robot in the Isaac Sim Unity3D Factory of Future scene when it reaches the waypoint with the industrial cart in sight. The estimated dolly pose shown in the green 3D bounding box in the bottom right image is used to update the map used for the path planner. The planned path from the LQR planner is shown in the top image in the green bar emerging from the STR. The full loop is tested completely in simulation before deploying on the robot in the real world.

Another way to test the domain transfer of perception models is by testing the performance on data from different simulation environments. Omniverse IsaacSim is based on the NVIDIA Omniverse Simulation platform and provides access to NVIDIA PhysX and NVIDIA RTX ray-tracing technologies for high performance, photo-realistic, robotic simulations. Try out the cart delivery application containing the perception model in a small warehouse setup in Omniverse Kit by following the steps detailed in the Omniverse IsaacSim section in the Isaac SDK 2020.1 documentation. You can test the capability of detection and pose estimation model transfer to different domains than the training set.

Manipulation in factory intralogistics environments

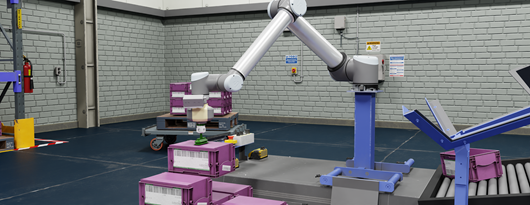

The manipulation of objects is another important application in factory settings. In Isaac SDK, you can explore the pick and place operation of the boxes where the boxes are picked from a conveyor belt in the factory and stacked on the pallet that is then transported to the loading zones (Figure 7). In this context, the goal is to estimate the 3D pose of the industrial box from a waypoint so that robots such as the UR10 arm can plan their path accordingly and perform the pick and place operation.

Figure 7. UR10 arm performing the pick and place operation of the pink industrial boxes.

Generating data and training 3D pose estimation models for boxes

Like the cart delivery use case, you perform training with simulation data.

Object detection training process

To run the simulation scene that generates industrial box detection training samples, run the following command:

bob@desktop:~/isaac_sim_unity3d$ ./builds/factory_of_the_future.x86_64 --scene Factory01 --scenario 9

This scenario moves the camera to get different view positions like the UR10 arm motion with industrial boxes spawning on the cart. Different distractor objects like boxes of different colors and sizes are spawned in addition to random objects, to enable the model to learn to differentiate and detect the industrial boxes correctly. This increases the robustness of the model to false positives. To save the simulated ground truth detection data offline, run the generate_kitti_dataset Isaac application alongside the simulation scene. From inside the Isaac SDK root folder, run the following command:

bob@desktop:~/isaac$ bazel run packages/ml/apps/generate_kitti_dataset

After generating the dataset, the NVIDIA Transfer Learning Toolkit is used to fine-tune a DetectNetv2 detection model. For more information about the GenerateKittiDataset app and training using the Transfer Learning Toolkit, see Object Detection with DetectNetv2 and Deploying Real-time Object Detection Models with the NVIDIA Isaac SDK and NVIDIA Transfer Learning Toolkit.

Pose estimation training process

To run the simulation scene that generates pose estimation training samples for the industrial box, run the following command:

bob@desktop:~/isaac_sim_unity3d$ ./builds/factory_of_the_future.x86_64 --scene Factory01 --scenario 10

Train the pose estimation for boxes with the same Isaac application as earlier, running simultaneously alongside the Factory of the Future scene:

bob@desktop:~/isaac$ bazel run packages/object_pose_estimation/apps/pose_cnn_decoder/training:pose_estimation_cnn_training

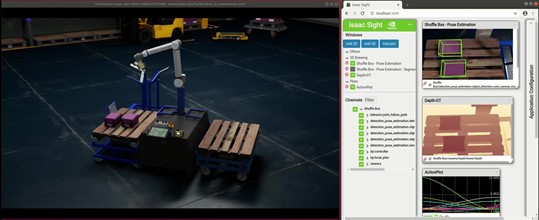

Inference application: Shuffle box

Pose estimation for the industrial box is showcased using the shuffle box application, which demonstrates the transfer of both detection and pose estimation models across simulations. The application shows a UR10 arm moving industrial boxes to and from stationary pallets in Omniverse IsaacSim. The robot moves boxes to and from fixed positions and runs perception from its wrist-mounted camera during the motion. However, the perception module is not yet integrated into the planner to determine the pickup location of the box. This is part of the Isaac SDK 2020.2 release.

To run this application, follow the IsaacSim Omniverse documentation to start the simulator and open the stage:

omni:/Isaac/Samples/Isaac_SDK/Scenario/sortbot_sim.usd

Start the simulation and the Robot Engine Bridge (REB) extension. Figure 8 shows the snapshot of the UR10 arm in a small warehouse scene in Omniverse Kit, which picks and transfers the industrial boxes from the left pallet to the right pallet.

Figure 8. The UR10 arm performing the pick and place operation in Omniverse Kit using the Isaac SDK shuffle box application.

Then, run the shuffle box Isaac SDK application with the following command:

bob@desktop:~/isaac$ bazel run apps/samples/manipulation:shuffle_box

Like the cart delivery application earlier, the shuffle box application uses a configuration file to define inference and filtering parameters:

apps/samples/manipulation/shuffle_box_detection_pose_estimation.config.json.

Opening WebSight at localhost:3000 results in a view like Figure 8. It shows the camera view along with the predicted pose visualized in green 3D bounding boxes, 3D pose estimation feature, depth map, and trajectory plot in order from top to bottom.

Omniverse IsaacSim 2020.1 provides sample scenes for cart delivery in small warehouses and the manipulation of boxes. Isaac SDK 2020.1 provides sample applications for both use cases, which showcases the pose estimation of carts and boxes in both applications. For more information, see Omniverse IsaacSim.

Summary

In this post, you learned how to use the Isaac 3D pose estimation model for navigation and manipulation use cases along with how to generate the synthetic data and train the models for these use cases. To learn more about this feature and the DNN architecture, see 3D Object Pose Estimation with Pose CNN Decoder and download Isaac SDK 2020.1 to try it out.

For more information, see the following resources:

- Download Factory of the Future scenarios

- Download Isaac SDK

- Deploying Real-time Object Detection Models with the NVIDIA Isaac SDK and NVIDIA Transfer Learning Toolkit

Sravya Nimmagadda

AI/Robotics Research and Development Engineer, Isaac SDK, NVIDIA

Divya Bhaskara is a robotics software engineer, Isaac SDK, NVIDIA