Hoon Choi, Fellow at Lattice Semiconductor, presents the “Machine-Learning-Based Perception on a Tiny, Low-Power FPGA” tutorial at the September 2020 Embedded Vision Summit.

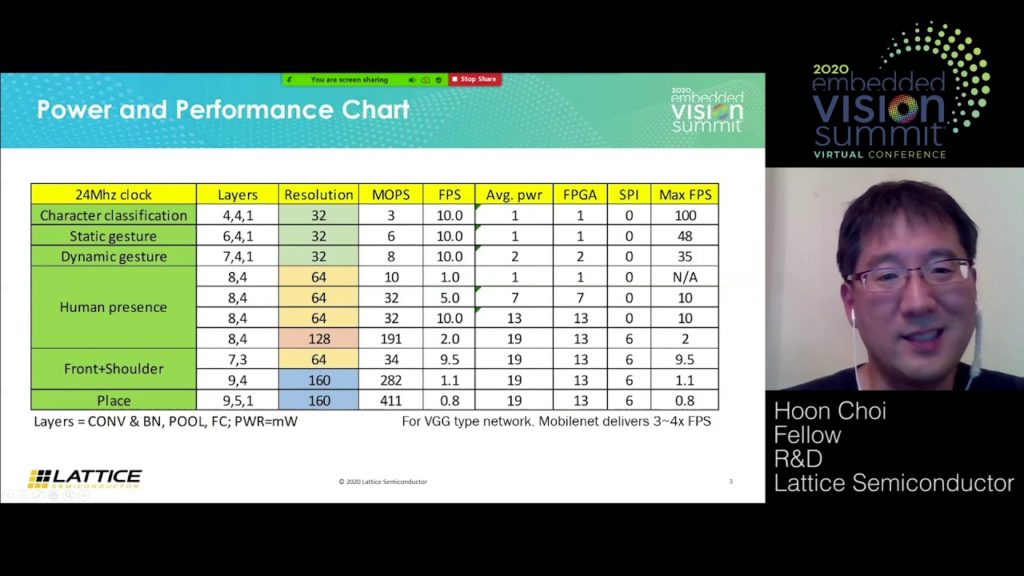

In this tutorial, Choi presents a set of machine-learning-based perception solutions that his company implemented on a tiny (5.4 mm2 package), low-power FPGA. These solutions include hand gesture classification, human detection and counting, local face identification, location feature extraction, front-facing human detection and shoulder surfing detection, among others.

Choi describes Lattice’s compact processing engine structure that fits into fewer than 5K FPGA look-up tables, yet can support networks of various sizes. He also describes how Lattice selected networks and the optimizations the company used to make them suitable for low-power and low-cost edge applications. Last but not least, he also describes how Lattice leverages the on-the-fly self-reconfiguration capability of FPGAs to enable running a sequence of processing engines and neural networks in a single FPGA.

See here for a PDF of the slides.