Pei Zhang, Associate Research Professor at Carnegie Mellon University, presents the “Structures as Sensors: Smaller-Data Learning in the Physical World” tutorial at the September 2020 Embedded Vision Summit.

Machine learning has become a useful tool for many data-rich problems. However, its use in cyber-physical systems has been limited because of its need for large amounts of well-labeled data that must be tailored for each deployment, and the large number of variables that can affect data in the physical space (e.g. weather, time).

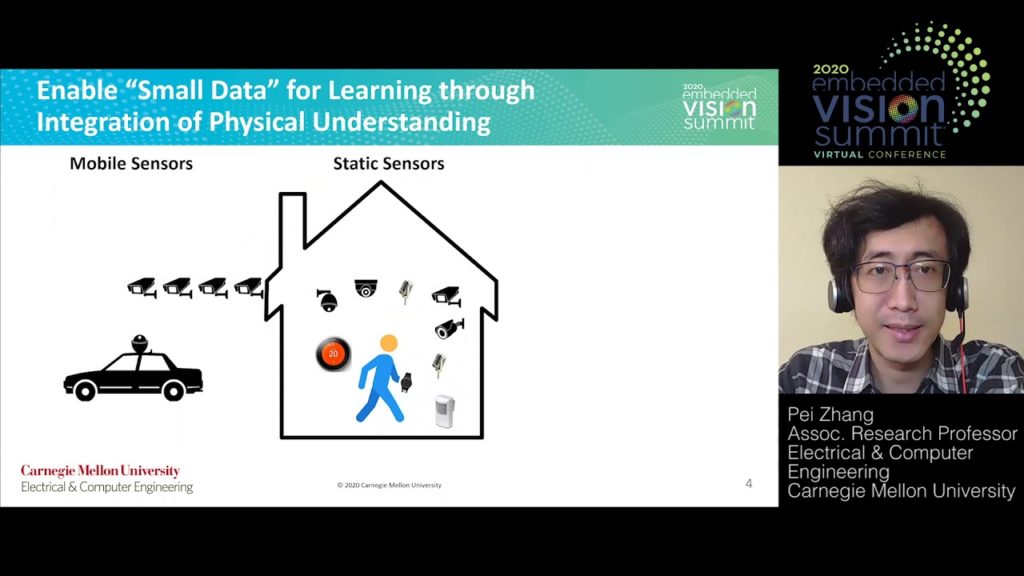

This talk introduces the problem through the concept of Structures as Sensors (SaS), in which the infrastructure (e.g. a building, or vehicle fleets) acts as the physical elements of the sensor, and the response is interpreted to obtain information about the occupants and about the environment. Zhang presents three physical-based approaches to reduce the data demand for robust learning in SaS:

- Generate data through the use of physical models,

- Improve sensed data through actuation of the sensing system and

- Combine and transfer data from multiple deployments using physical understanding.

See here for a PDF of the slides.