| LETTER FROM THE EDITOR |

Dear Colleague,

Check out these upcoming webinars from the Edge AI and Vision Alliance and its Member companies:

Brian Dipert

Editor-In-Chief, Edge AI and Vision Alliance |

| APPLICATION AND MARKET TRENDS |

|

Key Trends and Challenges in Practical Visual AI and Computer Vision

With visual AI and computer vision technologies advancing faster than ever, it can be difficult to see the big picture. This talk from Jeff Bier, Founder of the Edge AI and Vision Alliance and President of BDTI, examines the most important areas of recent progress that are enabling developers of vision-based systems and applications–as well as the most significant challenges inhibiting more widespread and successful development of vision-based products. Bier explores key practical aspects of algorithms, the new wave of edge AI processors, development tools and processes and emerging classes of sensors. In each of these domains, he highlights recent developments that illustrate where the industry is heading, and also identifies obstacles that still need to be addressed. Throughout the talk, he calls out examples of leading-edge commercial building-block technologies and end-products that illustrate these trends and challenges.

Market Trends in Automotive Perception: From Insect-Like to Human-Like Intelligence

Today there are two paths towards autonomous vehicles. Mass-market automobiles continue to add more sensors and more compute power to enable increasingly sophisticated ADAS functionality. Separately, developers of robotic vehicles utilize high-end, industrial-grade sensors (lidar, cameras and radars) along with massive centralized computing. Either way, the push towards autonomy demands more and more computational power as increasingly demanding algorithms process increasing amounts of sensor data. In this presentation, Pierre Cambou, Principal Analyst at Yole Développement, shares his company’s analysis and forecast of the ADAS and autonomous vehicle perception market. When will cars with L2 to L5 level automation become mainstream? What sorts of processing power will they require? What alternative innovation scenarios might disrupt current trends?

|

| BUSINESS CONSIDERATIONS |

|

Lessons From the Start-up Trenches

This session with Oliver Gunasekara, founder and CEO of NGCodec, explores lessons learned during the journey of NGCodec—a video codec developer—from its founding in 2012 to its successful acquisition in 2019 by Xilinx. Gunasekara begins with a brief presentation summarizing NGCodec’s path, sharing some of the key lessons learned during this journey. These include the reality that ideas are easy but execution is hard, which means that sharing your idea is important. Gunasekara also explains why he believes that perseverance and grit are critical leadership skills. He shares why he has come to believe that ultimately, it’s all about the team—and that, given the competitive market in Silicon Valley, leveraging a remote team can really pay off. Finally, he touches on structuring the company foundation and stock options for optimum tax efficiency, and then delves deeper into key lessons learned in an interview conducted by Shweta Srivastava.

Can You Patent Your AI-Based Invention?

Patenting inventions is one way to capitalize on the hard work and creativity that drives an innovative product, but recent cases have made things difficult for innovators whose inventions are expressed in software systems and processes. Software itself frequently cannot be patented. But this is not to say that AI-based vision and embedded vision inventions are unpatentable. This presentation from Thomas Lebens, Partner at Fitch, Even, Tabin & Flannery LLP, gives you an update on the latest developments in software patentability and helps you navigate this landscape to best protect your innovative efforts.

|

| UPCOMING INDUSTRY EVENTS |

|

Brain-inspired Processing Architecture Delivers High Performance, Energy-efficient, Cost-effective AI – GrAI Matter Labs Webinar: March 11, 2021, 9:00 am PT

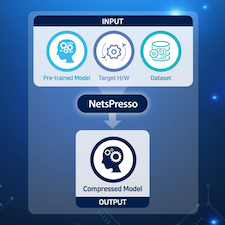

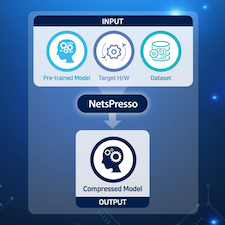

Selecting and Combining Deep Learning Model Optimization Techniques for Enhanced On-device AI – Nota Webinar: March 23, 2021, 9:00 am PT

Deep Learning for Embedded Computer Vision: An Introduction – Edge AI and Vision Alliance Webinar: March 24, 2021, 9:00 am PT

Enabling Small Form Factor, Anti-tamper, High-reliability, Fanless Artificial Intelligence and Machine Learning – Microchip Technologies Webinar: March 25, 2021, 9:00 am PT

Optimizing a Camera ISP to Automatically Improve Computer Vision Accuracy – Algolux Webinar: March 30, 2021, 9:00 am PT

More Events

|

| FEATURED NEWS |

|

Lattice Expands mVision Solutions Stack Capabilities

STMicroelectronics Boosts Security Along with AI and IoT Application Development with STM32MP1 Ecosystem Extensions

Renesas Updates R-Car V3H with Improved Deep Learning Performance for Latest NCAP Requirements Including Driver and Occupant Monitoring Systems

Turn a Touch Interface Touchless with Intel RealSense TCS

Basler Announces Flexible Processing Board for Vision Applications

More News

|

| VISION PRODUCT OF THE YEAR WINNER SHOWCASE |

|

NVIDIA Jetson Nano (Best AI Processor)

NVIDIA’s Jetson Nano is the 2020 Vision Product of the Year Award Winner in the AI Processors category. Jetson Nano delivers the power of modern AI in the smallest supercomputer for embedded and IoT. Jetson Nano is a small form factor, power-efficient, low-cost and production-ready System on Module (SOM) and Developer Kit that opens up AI to the educators, makers and embedded developers previously without access to AI. Jetson Nano delivers up to 472 GFLOPS of accelerated computing, can run many modern neural networks in parallel, and delivers the performance to process data from multiple high-resolution sensors, including cameras, LIDAR, IMU, ToF and more, to sense, process and act in an AI system, consuming as little as 5 W.

Please see here for more information on NVIDIA and its Jetson Nano. The Edge AI and Vision Product of the Year Awards (an expansion of previous years’ Vision Product of the Year Awards) celebrate the innovation of the industry’s leading companies that are developing and enabling the next generation of edge AI and computer vision products. Winning a Product of the Year award recognizes your leadership in edge AI and computer vision as evaluated by independent industry experts. The Edge AI and Vision Alliance is now accepting applications for the 2021 Awards competition. The submission deadline is Friday, March 19; for more information and to enter, please see the program page. |