This blog post was originally published at Opteran Technologies’ website. It is reprinted here with the permission of Opteran Technologies.

Engineering and nature have often had a very close relationship, but as we look to solve some of the most fundamental challenges with robotics and artificial intelligence nature has an even more important role to play. If you would like to understand why then I would thoroughly recommend reading an article byProfessor Barbara Webb of the University of Edinburgh in Science Magazine, who suggests insect brains may hold the key to improving autonomous technologies.

First, though, what is the problem we’re trying to solve? Opteran Technologies is currently developing insect-inspired brains to enable robots to see, sense, act and make decisions. Why? We are convinced that it will lead to smarter robots, as Prof. Webb mentions at the end of her article. The problem with today’s autonomous machines is that they rely on deep and machine learning that is inefficient and unaffordable for lower cost applications. To train a drone or autonomous vehicle to navigate a route and avoid obstacles requires extensive datasets to ensure the solution is sufficiently general. This adds significantly to the cost of running deep learning-based applications and still does not guarantee they will be able to deal with every situation.

Compared to nature, human engineering finishes a pretty poor second when it comes to building simple structures capable of complex actions at a micro-scale. Prof. Webb describes the sophistication that such natural intelligence offers autonomous machines and it is precisely what inspired us to found Opteran Technologies. The company grew out of our research projects into insect brains, which looked at animals like the honeybee, who have many fewer neurons (around 1 million) than bigger animals like mice (70 million) or humans (86 billion) but can still generate very complex behaviours such as long-distance navigation. We chose to start developing our technology based on the honeybee’s brain, because with a million neurons it is still an amazing visual navigator, able to cover distances up to 10 kilometres and then, as Prof Webb points out, return to its nest.

Prof Webb’s article takes us on an in-depth tour of the main regions in the honeybee brain, particularly the central complex, which gives the bee its tremendous sense of place. This allows it to return home, and revisit a flower patch it just returned from, possibly driven by a snapshot stored in the memory of the bee. While this requires further research, Prof. Webb’s explanation of how bees navigate reflects what the team at Opteran has been looking to achieve. We have reverse-engineered insect brains starting with early visual processing, and moving onto navigation.

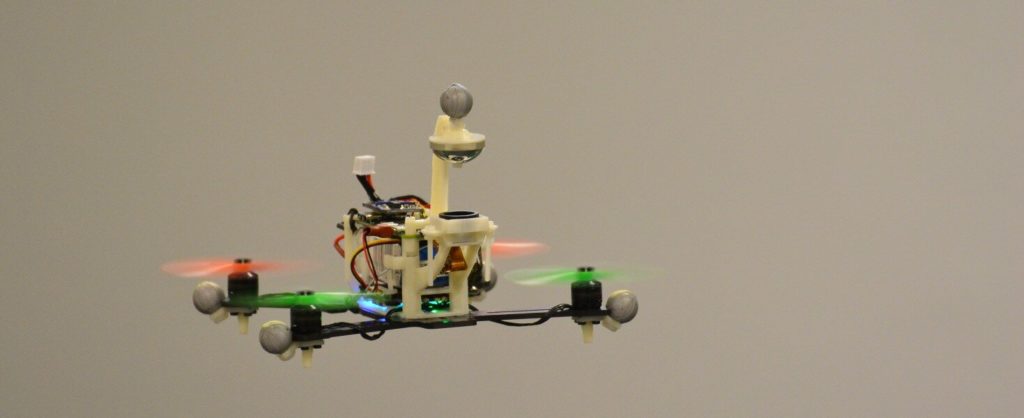

All this takes place in a single computer chip drawing below 1 watt of power and today our chips control sub 250g drones with fully onboard autonomy, using simple, cheap visual and inertial sensors. So to Professor Webb’s point that we could see “smarter robots” in the future we believe we are already well on the way to helping autonomous machines see, sense and navigate.

Longer term, we are mapping out the rapid learning and decision-making abilities in the rest of the insect brain, aiming for an affirmative answer to the question: “What about modelling a whole insect brain?” We believe such a goal is achievable and the payoff is going to be a radically simpler, more efficient approach to robust autonomy than existing complex and expensive machine learning approaches.

James Marshall

CSO and Co-founder, Opteran Technologies