This blog post was originally published at BrainChip’s website. It is reprinted here with the permission of BrainChip.

In many of our presentations, podcasts, and blogs, we’ve focused the conversation around “Beneficial AI.”

Here at BrainChip, we consider applications that aim to improve the human or global condition to be beneficial. This could apply to medical practices, such as diagnosis of diseases, research, or devices that improve patient outcomes. Environmental conservation, such as sensors that detect harmful emissions, as well as humanitarian efforts, such as agricultural or transportation improvements to support a growing population also benefit from these technologies. Even simple, useful applications that make our day-to-day lives easier and better are beneficial.

Often when one wants to do the most good, the desire is to do it as quickly and effectively as possible. So while there are countless ways AI can be beneficial, and countless companies developing these capabilities, there are some clear AI technologies that have the edge (no pun intended) when it comes to delivering Beneficial AI.

Let me give you an example.

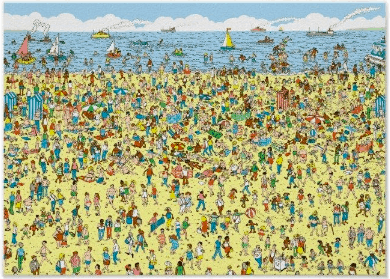

Perhaps you recall the childhood game, “Where’s Waldo,” where the challenge is to find Waldo in his trademark red and white striped sweater and glasses. This is a hidden object game crafted as an immense visual puzzle.

Perhaps you recall the childhood game, “Where’s Waldo,” where the challenge is to find Waldo in his trademark red and white striped sweater and glasses. This is a hidden object game crafted as an immense visual puzzle.

While challenging for the human eye, image and pattern recognition is a common AI task – so common, in fact, that even past-generation neural networks produce reasonably accurate results and find Waldo.

But how long does it take?

How much effort or energy is expended?

How many computations do they have to perform?

How much hardware is required?

How much does it cost?

And what if Waldo changes from his classic red and white striped sweater to green and white? That changes the game entirely. The system has to search all over again.

So while there are many AI technologies that can perform this simple task, in this case, finding Waldo, there are vast differences in their approach, and their efficiency.

The BrainChip Akida™ processor, which uses event-based Spiking Neural Networks (SNNs), excels in several ways, from inherently lower power consumption to incremental learning (identifying the green jersey) and high-speed inferencing/”one-shot” training.

The Akida engine can find Waldo far faster, with far less effort, and far lower computational cost.

If, instead of tracking Waldo, we’re tracking an endangered species in a region riddled with poachers – an example of Beneficial AI – we can see why speed and efficiency is imperative. Identifying members of that species by image or by the sound of their calls, and correctly classifying those with unusual features, such as a missing ear allows for better tracking and faster intervention.

Back to the Waldo example, what if we could listen for Waldo? This would provide another way to quickly identify and hone in on his location in each puzzle. BrainChip has performed a number of tests in vibrational analysis: the ability to “feel,” record, and process mechanical vibration noise, with results exceeding that of the capability of the human ear.

With Akida’s speed, accuracy, and efficiency in AI vibrational analysis, it can find an anomaly quickly. This is essential for identifying wear and tear in machinery, for safety and preventative maintenance, or to improve fuel efficiency and reduce energy consumption.

There are many ways we see Beneficial AI occurring at the edge

Industrial, medical, biometric, geographic, to name a few. Akida does not require an external CPU, memory, or Deep Learning Accelerator (DLA), and is extremely energy-efficient, so it is especially ideal in these applications. To protect endangered species, we need to deploy unmanned aircraft (drones) with cameras and processors on board, performing on-device data analytics where no cloud connection exists, and consuming very little battery power which allows for a longer life. To test for COVID-19 and control outbreaks, we need hand-held diagnostic testing devices that can analyze breath sensor data in the field.

Good or Bad Data?

Akida AI functionality is superior, so we can produce beneficial results not only faster but more accurately – near 100 percent accuracy in several applications we’ve tested.

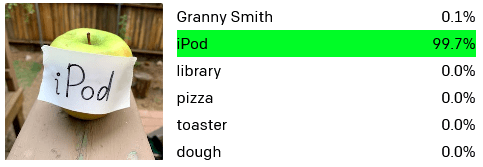

Because it uses SNNs, Akida knows the difference between “good” data and bad or useless data. Recently researchers identified vulnerabilities in a common image classification system that uses prior-generation neural networks. Among them, the system was “fooled” by images with text – in this example, a piece of paper with “iPod” taped to an apple tricked the system into classifying the image as an iPod.

In similar examples, the background of an image may contain features that confuse the neural network into a misclassification. If an object is partially obscured, misclassification often occurs. A red-and-white striped umbrella may be misclassified as Waldo.

Of course, finding Waldo isn’t a matter of life or death, but some AI tasks will be. Edge devices equipped for Beneficial AI must therefore deliver speed, flexibility, accuracy, and efficiency to do the most good.

BrainChip: This is our Mission

Rob Telson

Vice President of World Wide Sales, BrainChip