Clear vision using glasses (Photo by timJ on Unsplash)

This blog post was originally published at Xailient’s website. It is reprinted here with the permission of Xailient.

Autonomous driving, facial recognition, traffic surveillance, person tracking, and object counting, all of these applications have one thing in common, Computer Vision (CV). Since the success of deep learning in CV tasks since AlexNet, a deep learning algorithm won the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) CV Competition in 2012, more applications are taking advantage of this advancement in CV.

While the deep learning models are getting better and better at CV tasks such as object detection, these models are getting bigger and bigger. From 2012 to 2015, the size of the winning model in the ILSVRC CV increased 16 times. The bigger the model, the more the parameters it has, and the higher the numbers of computations it needs for inference, which in turn means higher energy consumption. It took AlphaGo, an AI, 1,920 CPUs and 280 GPUs to train in order to beat human champion in the game Go, which is approximately $3,000 in electricity cost. Deep learning models are no doubt improving and have outperformed humans in certain tasks, but pay the cost in terms of increase in size and higher energy consumption.

Researchers have access to GPU powered devices to run their experiments on and so most of the baseline models are trained and evaluated on GPU devices. It is great if we just want to keep improving these models, but poses a challenge when we want to use it for real-world applications to solve real-world problems. From smartphones to smart homes, applications are now demanding real-time computation and real-time response. The major challenge to meet this real-time demand in a computationally limited platform.

mAP vs GPU wallclock time colored by meta-architecture (Huang et al., 2017)

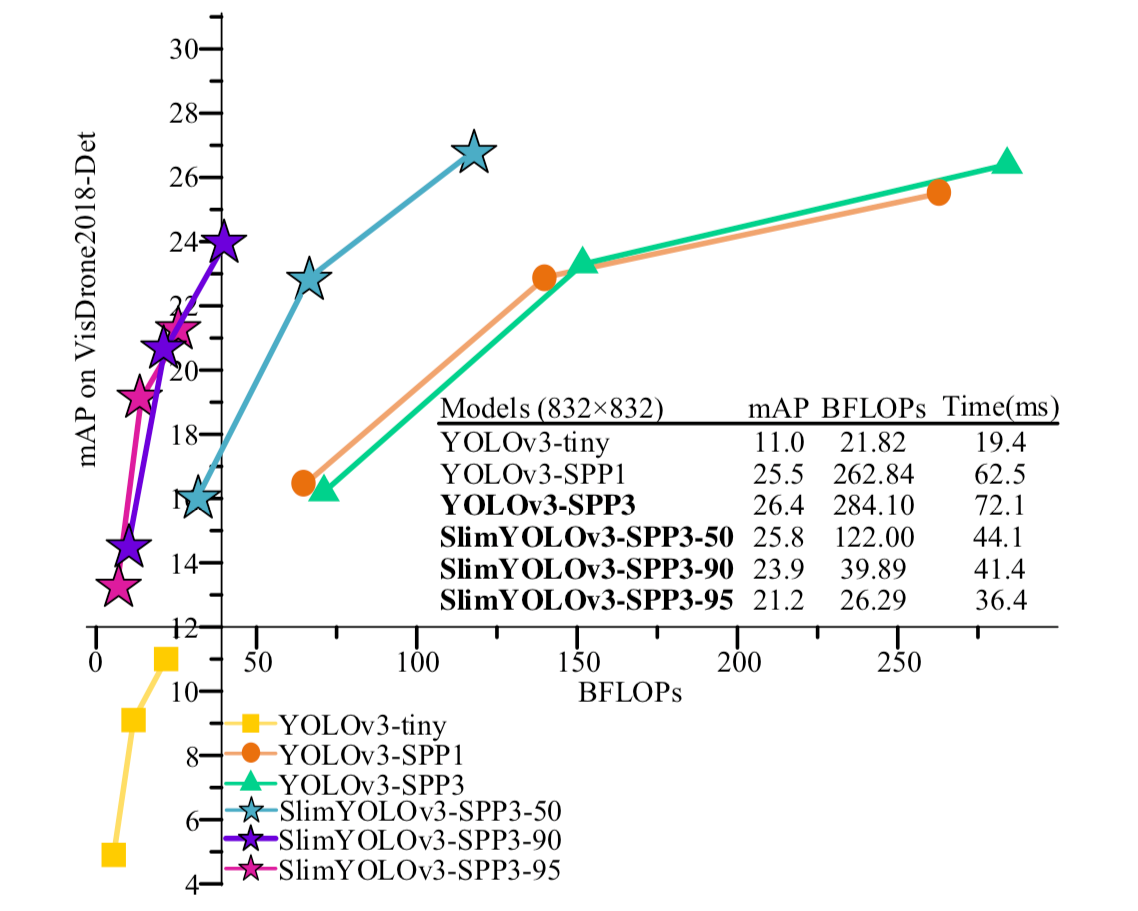

Previous models such as YOLO and R-CNN have proved their efficiency and accuracy in GPU based computers but are not useful for real-time application that uses non-GPU computers. Over the years, variations of these models have been developed to meet the real-time requirements, and while they are successful in shrinking down the model in size so that they fit and run on computationally limited devices utilizing very low memory, they compromise on accuracy. MobileNets, SqueezeNet, TinyYOLO, YOLO-LITE, and SlimYOLO are some examples of these models.

The real achievement is when we can use this on a $5 device, such as a Raspberry Pi Zero in real-time, without compromising on accuracy.

Pre-trained AI models available to download for free here

Previous models such as YOLO and R-CNN have proved their efficiency and accuracy in GPU based computers but are not useful for real-time application that uses non-GPU computers. Over the years, variations of these models have been developed to meet the real-time requirements, and while they are successful in shrinking down the model in size so that they fit and run on computationally limited devices utilising very low memory, they compromise on accuracy. MobileNets, SqueezeNet, TinyYOLO, YOLO-LITE and SlimYOLO are some examples of these models.

There is a tradeoff between system matrices when making Deep Neural Network (DNN) design decisions. A DNN model with higher accuracy, for example, is more likely to use more memory to store model parameters and have higher latency. On the contrary, a DNN model with fewer parameters is likely to use less computational resources and thus execute faster, but may not have the accuracy required to meet the applications’s requirements (Chen & Ran, 2019).

Drones or general Unmanned Aerial Vehicles (UAVs), with vehicle tracking capability, for example, needs to be energy efficient so that it operates for longer time on battery power, and needs to track a vehicle in real-time with high accuracy, otherwise, it will be of less value. Think about how annoying it is when you open the camera application of your smartphone and everyone is ready with their pose, and it takes forever for the camera to open, and again when it opens, it takes forever to click a single photo. If we expect the camera in our smartphones to be fast, it is reasonable to expect high performance from vehicle tracking drones.

Billion floating point operations (BFLOPs) vs accuracy (mAP) on VisDrone2018-Det benckmark dataset (Zhang, Zhong, & Li, 2019)

To meet the real-time demands, deep learning models need to have low latency for faster response, be small so that they can it into edge devices, utilise minimum energy so that they can run on battery for a longer period of time, and have the same accuracy as when they run on GPU powered devices.

Drones or general Unmanned Aerial Vehicles (UAVs), with vehicle tracking capability, for example, needs to be energy efficient so that it operates for longer time on battery power, and needs to track vehicle in real-time with high accuracy, otherwise it will be of less value. Think about how annoying it is when you open the camera application of your smartphone and everyone is ready with their pose, and it takes forever for the camera to open, and again when it opens, it takes forever to click a single photo. If we expect the camera in our smartphones to be fast, it is reasonable to expect high performance from vehicle tracking drones.

Xailient’s Detectum is the solution!

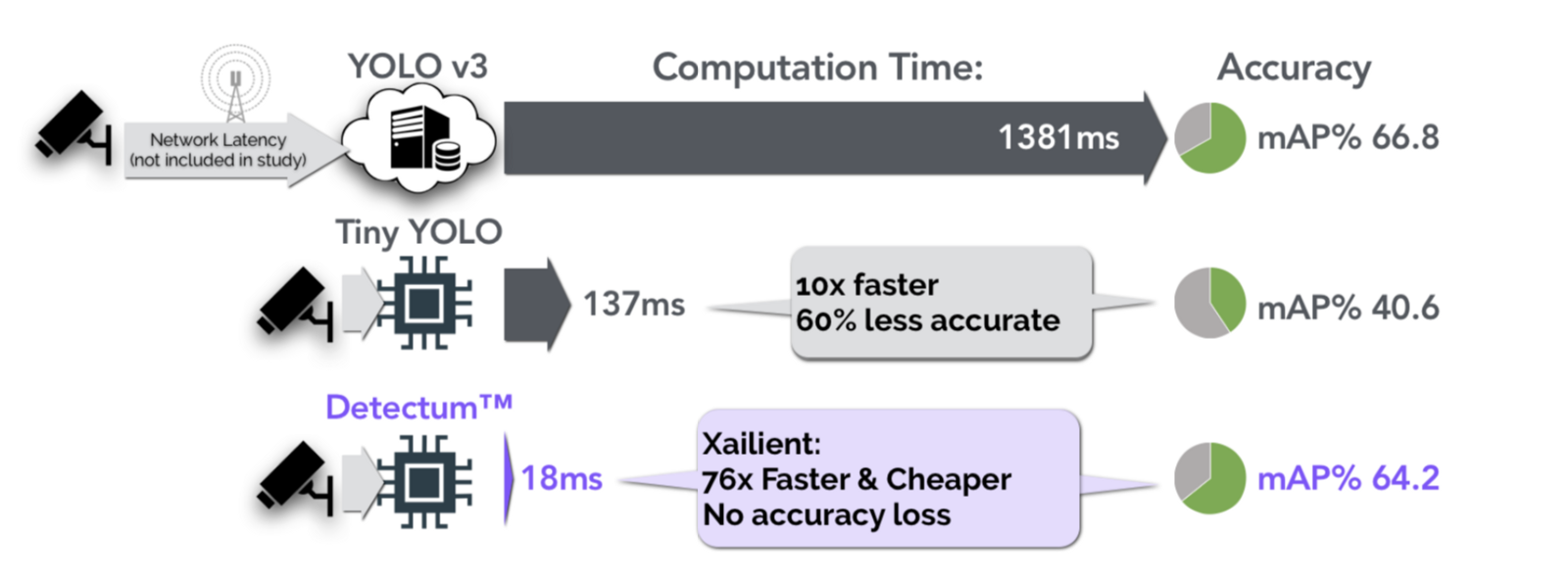

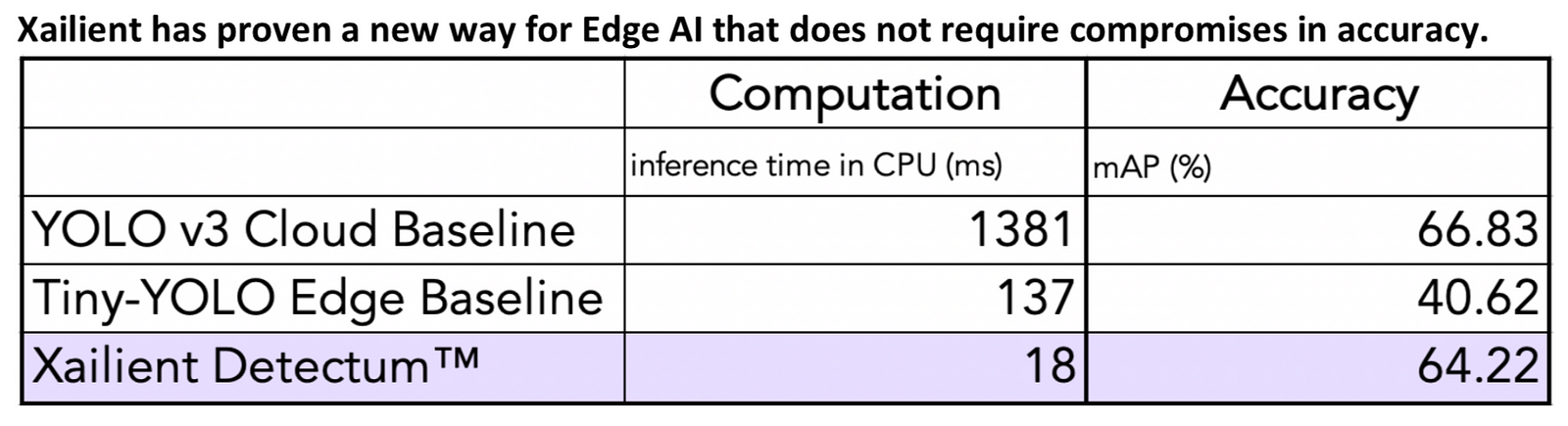

Xailient has proven the Detectum software performs CV 98.7% more efficiently without losing accuracy. Detectum object detection, which performs both localization and classification of objects in images and video, has been demonstrated to outperform the industry-leading YOLOv3.

Xailient achieved the same accuracy 76x faster than the Cloud Baseline, and was 8x faster than the Edge Baseline without the accuracy penalty.

Pre-trained AI models available to download for free here

The development of deep learning in CV is advancing at a rapid pace, and while they are getting better in accuracy, industry efforts are increasing in size, thus impacting computational time and cost. While research is being done to reduce the size of the deep learning models so that they can run on low power devices, there is a trade-off between speed, accuracy, size and energy consumption. Xailient’s Detectum is the answer to this challenge, as it as proven to run 76 times faster than YOLOv3 and 8 times faster than the TinyYOLO, achieving the same accuracy.

Pre-trained AI models available to download for free here

More stories from Xailient

What’s Salient? Xailient in the AFR!

Struggles of Running Object Detection on a Raspberry Pi

Xailient is commercializing breakthrough university research in Artificial Intelligence and Machine Learning. Our technology dramatically reduces the costs of data transmission, storage, and computation associated with extracting useful information from real-time video by processing the way humans think. www.xailient.com

References

Chen, J., & Ran, X. (2019). Deep Learning With Edge Computing: A Review. Proceedings Of The IEEE, 107(8), 1655–1674. doi: 10.1109/jproc.2019.2921977

Jiang, Z., Chen, T., & Li, M. (2018). Efficient Deep Learning Inference on Edge Devices. In Proceedings of ACM Conference on Systems and Machine Learning (SysML’18).

Zhang, P., Zhong, Y., & Li, X. (2019). SlimYOLOv3: Narrower, Faster and Better for Real-Time UAV Applications. In Proceedings of the IEEE International Conference on Computer Vision Workshops(pp. 0–0).

Pedoeem, J., & Huang, R. (2018). YOLO-LITE: a real-time object detection algorithm optimized for non-GPU computers. arXiv preprint arXiv:1811.05588.

Huang, J., Rathod, V., Sun, C., Zhu, M., Korattikara, A., Fathi, A., … & Murphy, K. (2017). Speed/accuracy trade-offs for modern convolutional object detectors. In Proceedings of the IEEE conference on computer vision and pattern recognition(pp. 7310–7311).

Pre-trained AI models available to download for free here

Sabina Pokhrel

Customer Success AI Engineer, Xailient