This blog post was originally published at Intel’s website. It is reprinted here with the permission of Intel.

The use of artificial intelligence in the Industrial Internet of Things (IIoT) domain is a complex and fast-moving phenomenon. In just the past few years, AI use cases and applications that were nearly impossible to scale or cost-prohibitive are now moving into widescale use.

What is fascinating about the way humankind has been approaching the AI market is that it is being driven by exceptionally talented developers who are using open-source tools and open-source communities. What comes into play here is an evolutionary trait that makes humans such a unique species on the planet; our ability to teach and learn from each other. Duke professor and psychologist Michael Tomasello first described ratcheting as a metaphor to describe how when one generation learns a new trait, tool, technique then pass it down to all future generations; just like when you turn a ratchet, every time you go a positive direction the ratchet locks in and you always get that improvement. If you go backwards you do not lose any of your forward momentum because of the the AI community.

The AI community is so open with their data, tools and methods, the community is advancing at an incredible rate and those innovations are ratcheting our collective knowledge and capability on a nearly daily basis. AI is being highly-influenced by open-source communities – instead of a small number of companies that have unique IP and resist having others use it – the field is making fantastic advances very quickly vs how other industries and technologies typically evolve. The AI community is publishing data, methods, results and code in an open way, sharing resources that developers can adopt within days.

PyTorch and Open Source Communities

A great example – quite rare in human history – is the AI framework PyTorch. It didn’t even exist a few short years ago. Within days of it being implemented in the open source community, developers all over the world were converting to PyTorch and sharing stories (and funny memes and tweets on social media) of how it benefited their AI development efforts. It quickly became one of the leading frameworks used by AI developers across the planet. When in human history, have we seen breakthroughs like that, a ratcheting of innovation measured in weeks or even just days? The printing press was a technology invented in China and refined in Europe over hundreds of years. The industrial revolution spanned 80 years. Major breakthroughs in the AI revolution we are experiencing today are measured in just weeks or days from invention to mass replication.

Innovations in AI are being distributed in an open, communal-based approach, a developer in India can gain from the innovations of a developer in Seattle – or Romania or Russia or China – all within a matter of hours. That is what is fueling the massive acceleration that we are seeing out in the market, the democratization of AI innovations.

A prime example is vision capabilities on the floor of factories around the world. The state of the art has moved beyond traditional comparative image analytics, where a known reference image is compared to another image to find pixel-level anomalies that might represent defects. In the past, the application would report defects to a human for analysis and a human would need to confirm or classify each anomaly individually.

While that may sound simple, one challenge in deploying vision-based analytics on the factory floor can present themselves when lighting is inconsistent. The human eye can correct for different lighting conditions easily. However, images collected by camera can naturally vary in intensity and contrast if background lighting varies as well. We’ve seen scale challenges observed by factories trying to deploy AI for defect detection based on the exact same hardware, software and algorithm deployed on different machines on the factory floor. Sometimes it took months for factory managers and data scientists to find out why they were getting great results on one machine with high accuracy, low false positive and false negative rates, while on the next machine over the AI application would crash.

The cause in some cases was simply because one machine had natural light influences from a skylight and the machine next to it didn’t. The machine with the skylight had a worse false defect detection accuracy rate that was influenced by sunny days vs cloudy days. Other machines had variations based on the density of light fixtures hung above the machine.

The process and methods of sensor management were not well understood, and the sensitivity that sensor management has on the AI process also was not understood. The industry had to figure out how to create a gamma correction mechanism, a photon density agnostic approach to sensing.

Instead of the traditional comparisons of one static image to another static image, we are now utilizing techniques that are somewhat similar to the evolutionary traits used by the photo receptors in our eyes and neurons in our brains to process image information. With the advent of deep learning techniques, algorithms are being developed with the ability to absorb lighting variations and neutralize gamma intensity differences throughout the day or from location to location.

Five years ago, it might have required a PhD in mathematics to be able to do the kind of image comparisons that a readily available deep learning algorithm can do today.

But there remain plenty of challenges in scaling out to various markets. How do we make these new deep learning techniques mainstream, in ways that are easily accessible and usable? How do managers integrate an AI capability onto a factory floor or in adjacent markets like in a retail store, a smart cities deployment, when it is a reality that relatively few people are skilled in advanced data science?

The barrier to entry is how to take the output of a model – even if it is an open-source model that has been well tuned and is very robust – and deploy that on a factory floor in a way that all the machines in the factory can benefit from it. Also, we need to ensure that the output of that AI model can then communicate with the varied infrastructure on a factory floor.

The challenge is that the cycle of innovation must become a closed loop: deploying an artificial intelligence algorithm and then learning to take the information and perform an action that adds value. If the action of the AI algorithm is to text the factory manager and say, ‘I found a defect,’ that is not really helping that much. If the output of the artificial intelligence algorithm communicates with the machine and tells it to adjust this or that parameter so that defect no longer occurs, that is real and lasting value.

Intel’s Edge Insights and the OpenVINO Toolkit

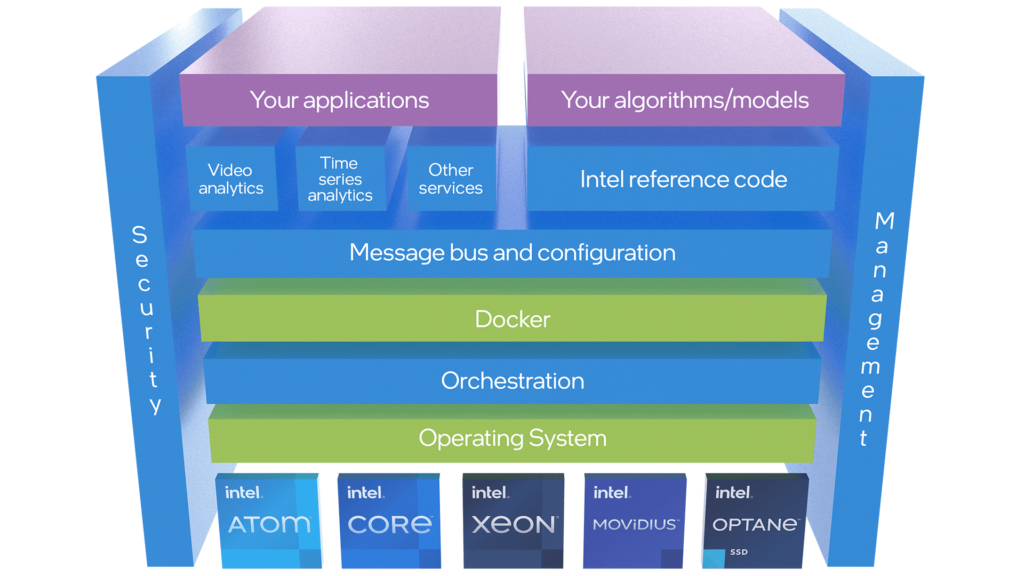

To help deal with these issues Intel has developed Edge Insights software and the OpenVINO toolkit for businesses wishing to employ AI in industrial, retail, smart cities, stationary and mobile robotics and other sectors.

We have developed ready-to-use, containerized analytics pipelines for the kinds of concurrent time-series and video workloads found in industrial applications. All in an easy-to-deploy, easy-to-modify, microservices framework. The software supports the acceleration and distribution of analytics on Intel’s CPUs, GPUs, FPGAs (field programmable gate arrays), VPUs (video processing units) and Optane memory. While image and video data is a rapidly growing portion of the data generated in the world’s factories – our observations and industry reports indicate that over 70% of the data coming off a factory floor is time-series data. Revenue trends and revenue forecasts by use case type in the 2019 Tractica report on “Artificial Intelligence for Smart Manufacturing Applications” showcases that both image/video and time-series data are growing at impressive rates that should accelerate in the years ahead. Thus, businesses need tools that make it easy to perform analytics on both time-series, image/video and audio workloads.

Utilizing the fully integrated GPUs incorporated on the majority of Intel’s processors is a very efficient way to deploy image and video processing solutions with exceptional performance per watt. For the 70% percent or more of data that is time series-based, a CPU can be far more efficient. In fact, CPUs can be orders of magnitude more efficient at processing time-series data than graphics processors for some workloads. There are tremendous advantages to using integrated GPUs and CPUs in combination when deploying AI in situations where time series and image/video data are being processed concurrently.

One Intel Core i7 device on an Industrial PC can provide the compute flexibility needed for many industrial applications, managing both the AI and control capabilities needed for machines as well as video interfaces all in ruggedized form factors that don’t require cooling fans, and at a fraction of the cost of a single discrete GPU and a fraction of the power budget. That level of software and hardware performance was unheard of five years ago, but we have those capabilities readily available today.

To learn more, please go to: Intel® Edge Software Hub

To watch a video tutorial: https://www.intel.com/content/www/us/en/internet-of-things/industrial-iot/edge-insights-industrial.html

Brian McCarson

Vice President and Senior Principal Engineer, Industrial Solutions Division Systems Engineering and Architecture Team, Internet of Things Group, Intel