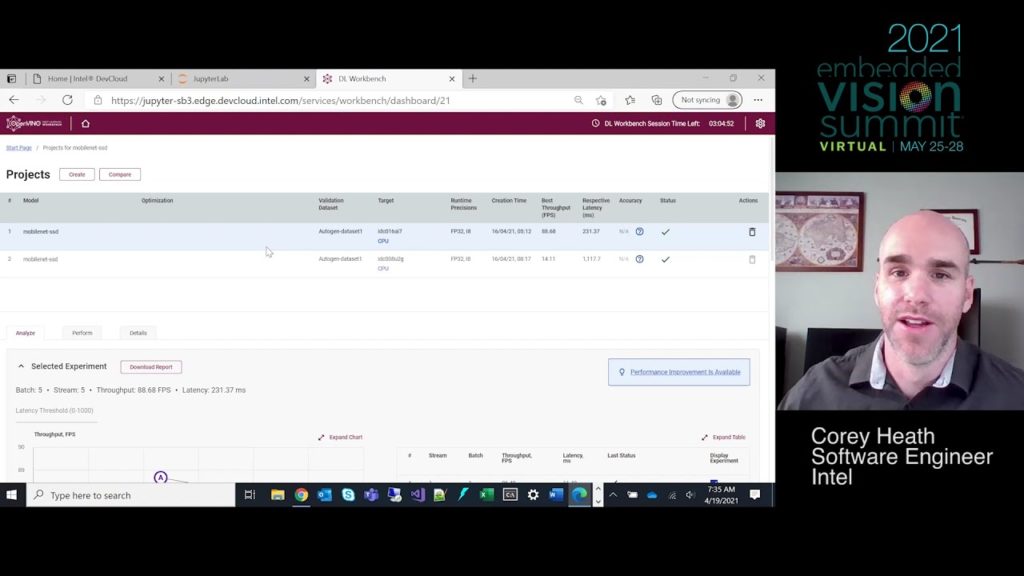

Corey Heath, Software Engineer at Intel, presents the “Quickly Measure and Optimize Inference Performance Using Intel DevCloud for the Edge” tutorial at the May 2021 Embedded Vision Summit.

When developing an edge AI solution, DNN inference performance is critical: If your network doesn’t meet your throughput and latency requirements, you’re in trouble. But accurately measuring inference performance on target hardware can be time-consuming—just getting your hands on the target hardware can take weeks or months. In this Over-the-Shoulder tutorial, Heath shows step-by-step how you can quickly and easily benchmark inference performance on a variety of platforms without having to purchase hardware or install software tools.

By using Intel DevCloud for the Edge and the Deep Learning Workbench, you can work from anywhere and get instant access to a wide range of Intel hardware platforms and software tools. And, beyond simply measuring performance, using Intel DevCloud for the Edge allows you to quickly identify bottlenecks and optimize your code—all in a cloud-based environment accessible from anywhere. Watch this video to learn how to quickly benchmark and optimize your trained DNN model.