| LETTER FROM THE EDITOR |

Dear Colleague,

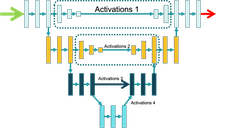

On Thursday, November 10 at 9 am PT, Perceive will deliver the free webinar “Putting Activations on a Diet – Or Why Watching Your Weights Is Not Enough” in partnership with the Edge AI and Vision Alliance. To reduce the memory requirements of neural networks, researchers have proposed numerous heuristics for compressing weights. Lower precision, sparsity, weight sharing and various other schemes shrink the memory needed by the neural network’s weights or program. Unfortunately, during network execution, memory use is usually dominated by activations–the data flowing through the network–rather than weights. Although lower precision can reduce activation memory somewhat, more extreme steps are required in order to enable large networks to run efficiently with small memory footprints. Fortunately, the underlying information content of activations is often modest, so novel compression strategies can dramatically widen the range of networks executable on constrained hardware. Steve Teig, Founder and CEO of Perceive, will introduce some new strategies for compressing activations, sharply reducing their memory footprint. A question-and-answer session will follow the presentation. For more information and to register, please see the event page. Brian Dipert |

| DEVELOPMENT TOOL ADVANCEMENTS |

|

How Do We Enable Edge ML Everywhere? Data, Reliability and Silicon Flexibility The real and perceived inability of today’s ML algorithms to reach the ultra-high accuracy needed in industrial systems is another key barrier. New techniques for explainable ML, better testing, sensor fusion and model fusion will increasingly allow developers to achieve industrial-grade reliability. Finally, in order to accelerate ML adoption in embedded products, we must recognize that most developers can’t immediately upgrade their systems to use the latest chips — a problem that is compounded by today’s chip shortages. To enable ML everywhere, we have to find ways to deploy ML on today’s silicon, while ensuring a smooth transition to new devices with AI acceleration in the future. Optimization Techniques with Intel’s OpenVINO to Enhance Performance on Your Existing Hardware |

| EDGE AI PROCESSING TRENDS |

|

Powering the Connected Intelligent Edge and the Future of On-Device AI Asghar identifies unique AI features that will be needed as physical and digital spaces converge in what is now called the “metaverse”. He highlights key AI technologies offered within Qualcomm products, and how the company connects them to enable the connected intelligent edge. Finally, he shares his vision of the future of on-device AI — including on-device learning, efficient models, state-of-the-art quantization, and how Qualcomm plans to make this vision a reality. High-Efficiency Edge Vision Processing Based on Dynamically Reconfigurable TPU Technology |

| UPCOMING INDUSTRY EVENTS |

|

Putting Activations on a Diet – Or Why Watching Your Weights Is Not Enough – Perceive Webinar: November 10, 2022, 9:00 am PT |

| FEATURED NEWS |

|

e-con Systems Launches a 4K HDR GMSL2 Multi-camera Configuration for the NVIDIA Jetson AGX Orin SoC An Upcoming Webinar from Alliance Member Companies Plumerai and Texas Instruments Covers Rapid, Accurate People Detection Vision Components’ New MIPI Camera Modules Include High-quality Global Shutter Image Sensors Network Optix’ Nx Witness VMS v5.0 is Now Available Basler Latest v7 pylon Software Includes vTools Custom-fit Image Processing Modules |

| EDGE AI AND VISION PRODUCT OF THE YEAR WINNER SHOWCASE |

|

OrCam Technologies OrCam Read (Best Consumer Edge AI End Product) Please see here for more information on OrCam Technologies’ OrCam Read. The Edge AI and Vision Product of the Year Awards celebrate the innovation of the industry’s leading companies that are developing and enabling the next generation of edge AI and computer vision products. Winning a Product of the Year award recognizes a company’s leadership in edge AI and computer vision as evaluated by independent industry experts. |