This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems.

LiDAR is one of the most popular technologies used for depth measurement the offers excellent range and accuracy. Go deep into how LiDAR cameras work and the key markets in which they have gained momentum.

Sensing technologies play a key role in the success of embedded vision systems. As a result, you can find more advanced technologies arising all the time, particularly 3D depth sensing technologies like Light Detection and Ranging (LiDAR), stereo vision, and Time of Flight (ToF). These have gained importance in autonomous vehicles, factory automation, smart retail, etc.

The oldest method of 3D depth sensing is the passive stereo cameras which operate by calculating the disparity of pixels from two sensors working in sync. However, passive technology had the disadvantage that these cameras cannot be used in the dark. Also, the depth quality depends on the texture of the objects in the scene. To overcome this, active stereo vision technology is used. This technology uses an IR pattern projector to illuminate the scene, which improves the performance in low light and works well on scenes with objects of less complex texture.

However, stereo cameras were not able to offer large depth ranges (in the range of 10 meters). Also, the data from stereo cameras has to be processed further to calculate depth. Further, stereo cameras many a time do not meet the required accuracy levels, especially when the texture of the target object is uneven. This is where LiDAR can help. Let’s learn what is a LiDAR sensor, its components, and the applications where it is used.

What is LiDAR technology?

LiDAR uses the light detection technique to calculate depth. It measures the time it takes for each laser pulse to bounce back from an obstacle. This pulsed laser measurement is used to create 3D models (also known as a point cloud) and maps of objects and environments. LiDAR is also called 3D laser scanning, which works similarly to RADAR, but instead of radio waves, it emits rapid laser signals up to 160,000 pulses per second towards the object. The formula to calculate the precise distance of the object is given below.

Distance of object = (Speed of light x Time of flight) / 2

What are the two types of LiDAR technologies?

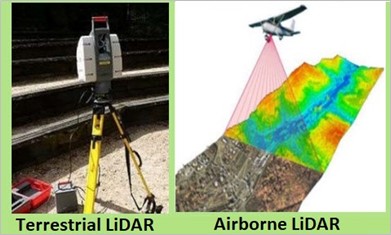

3D LiDAR systems can be classified, based on their functionalities, into Airborne LiDAR and Terrestrial LiDAR.

Figure 1 – Two types OF LiDAR (Source: Elprocus)

Airborne LiDAR

An airborne 3D LiDAR sensor is typically installed on a drone or helicopter. It sends the pulse of light towards the ground surface and waits for the pulse to return immediately after hitting the object to provide the exact measurement of its distance. Airborne LiDAR can be further categorized into Topological LiDAR and Bathymetric LiDAR. Bathymetric LiDAR uses water penetrating green light to measure seafloor and river bed elevations.

Terrestrial LiDAR

The terrestrial LiDAR system is installed on moving vehicles or tripods on the earth’s surface. This 3D LiDAR sensor is used to map the natural characteristics of buildings and carefully observe highways. It is also extremely useful for creating accurate 3D models of heritage sites. The terrestrial LiDAR system can be further divided into Mobile LiDAR and Static LiDAR.

How does a LiDAR camera work?

The principle behind the working of the LiDAR technology can be explained with the following common elements:

- Laser source

- Scanner

- Detector

- GPS receiver

- Inertial Measurement Unit (IMU)

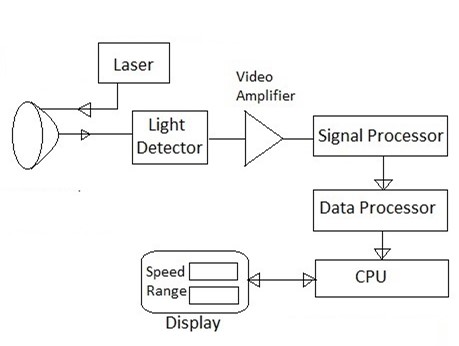

Given below is the architecture of a LiDAR sensor:

Figure 2 – Architecture of LiDAR sensor (Source: RF Wireless world)

Laser Source

The laser source emits laser pulses at different wavelengths. The most common laser source is neodymium-doped yttrium aluminium garnet (Nd-YAG). For example, a topographic LiDAR sensor uses 1064nm diode-pumped Nd-YAG lasers or the retina-safe wavelength of 1550 nm. In contrast, Bathymetric LiDAR systems use 532nm double diode-pumped Nd-YAG lasers to penetrate water surfaces with lesser attenuation. Usually, very short laser pulses in the invisible NIR range are used to increase the range of the systems.

Scanner and Optics

The scanner uses deflecting mirrors to deviate the laser beam and achieve a wide Field of Vision (FoV). The scanner for the depth measurement is developed with long-ranging distance, a wide FoV, and top-speed scanning.

Detector

The detector picks up the reflected light from the obstacles. The primary photodetectors are solid-state photodetectors like silicon avalanche photodiodes and photomultipliers.

GPS receiver

In an airborne LiDAR system, the GPS receiver tracks the altitude and location of the airplanes. This variable is important in attaining accurate terrain elevation values.

Inertial Measurement Units (IMU)

The IMU tracks the speed and orientation of the airplane (or any other autonomous vehicle where a LiDAR is used). These measurements accurately determine the actual position of the pulse on the ground.

Two major use cases of LiDAR technology

Now that we understand how LiDAR sensors work, let us look at two of the most popular use cases where they are used.

Autonomous vehicles and devices

Autonomous equipment such as drones, autonomous tractors, robotic arms, etc. depend on 3D depth-sensing cameras for obstacle detection, localization, navigation, and picking or placing objects. Obstacle detection requires low latency to react fast and, in many cases, a wider FoV. For vehicles moving at high speed, a LiDAR sensor will be needed. It has a rotating 360-degree laser beam function which in turn means they have a precise and accurate view to avoid obstacles and collision. Since LiDAR generates millions of data points in real-time, it easily creates a precise map of its ever-changing surroundings for the safe navigation of autonomous vehicles. Also, the distance accuracy of LiDAR allows the vehicle’s system to identify objects in a wide variety of weather and lighting conditions.

Autonomous Mobile Robots (AMR)

AMRs are used to pick, transport, and sort items within manufacturing spaces, warehouses, retail stores, and distribution facilities without being overseen directly by an operator. Be it a pick and place robot, patrol robot, or agricultural robot, almost all AMRs need to measure depth. And with a LiDAR system, there’s no need for extensive processing to detect objects or create maps – making it a great-fit solution for AMRs.

What e-con Systems is doing in the depth camera space

e-con Systems, with 19 years of experience in the embedded vision space, has been helping clients integrate cameras smoothly into their products. We have worked with multiple robotic and autonomous mobile robot companies to integrate our depth cameras into them.

Following are the stereo camera solutions offered by e-con Systems:

- STEEReoCAM™: 2MP stereo camera for NVIDIA Jetson Nano/AGX Xavier/TX2

- Tara – USB 3 stereo camera

- TaraXL – USB stereo camera for NVIDIA GPU

The latest update in this space is that we are working on a time of flight camera that we expect to take the market by storm. Please follow our blog and social media channels to stay updated on the latest developments in this front. Meanwhile, below are a couple of resources around the time of flight technology that might pique your interest:

- What is a Time-of-Flight sensor? What are the key components of a Time-of-Flight camera?

- How Time-of-Flight (ToF) compares with other 3D depth mapping technologies

We hope you found this content useful and are one step closer to understanding whether LiDAR technology is the ideal solution for your application. If you have any further queries on the topic, please leave a comment.

If you are interested in integrating embedded cameras into your products, please write to us at camerasolutions@e-consystems.com. You can also visit our Camera Selector to get a complete view of e-con Systems’ camera portfolio.

Prabu Kumar

Chief Technology Officer and Head of Camera Products, e-con Systems