This blog post was originally published at CEVA’s website. It is reprinted here with the permission of CEVA.

Mobile computing has driven an explosion in product opportunities – smartphones, wearables, hearables, sports cameras, and more. Sensing will further extend opportunities in applications which must be environment-aware. Now it’s commonplace to think about object recognition and collision warning through visual, radar, or Lidar sensing. Short-range proximity risk through ultrasonic sensors. Motion and pose detection through IMUs. Acoustic-based hazardous sound detection. For many possible applications in transportation, robotics, home automation, smart city, factory, and warehouse management. The list is honestly endless. We have all the raw material we need to build a futuristic, almost science fiction world driven by intelligent sensing. But how?

From sensing to motion

Think about a robot assistant moving around an airport. Helping a passenger check-in, find their gate, get details on a flight. This robot must move freely about the airport, without bumping into people walking or running in all directions. Or objects which may be stationary or may sometimes move. This assistant should know how to navigate intelligently through a constantly changing environment. And it must be able to understand and respond to natural language voice commands over some range of possibilities. We’ll get to that below.

Optical and proximity sensors are a starting point for autonomous mobility. These feed into a complex algorithm called SLAM, building a point map of the space being traveled and constantly refining that map. This method is increasingly being augmented by dead reckoning in low light conditions where optical is less effective. This tracks movement from a known position, drawing information from wheel motion, accelerometers, and other sensors. Meantime the proximity sensors provide input to avoid crashing into an object or a person. Already we have multiple sensors feeding into algorithms to generate a map and a location for the assistant in the map.

Camera-based SLAM requires heavy-duty linear equation solving with high accuracy, for which vector DSP platforms are well suited. Ultrasonic-based proximity sensing must start with noise reduction to localize the nearest object among echoes and other clutter, followed by range (and direction) computation. This kind of signal processing is ideally suited to a scalar DSP.

From voice to actions

A passenger sees an assistant and calls it over, “Hey, Airbot!” First, the assistant must recognize the command and where it came from. Some sophisticated audio processing goes into this step, especially in a noisy environment like an airport terminal. Recognizing the trigger command is a basic bit of AI you’ll find in any smart speaker. The robot can also detect the direction the voice command came from through beamforming, a kind of audio “zooming”. This is more signal processing, figuring out direction from how a recognized command is detected at slightly different times at multiple microphones.

Our airbot glides over to the passenger, avoiding other obstacles along the way, stops a couple of feet away, and asks, “How can I help you?” It presents a range of questions it knows how to answer on the screen, and the passenger says, “I’d like to check-in”. Our robot first must do some more signal processing to reduce noise in that audio signal, partly by beamforming, partly by echo cancellation. Then it must recognize the command.

Natural language processing (NLP) could be handled in the cloud, but there’s a lot of load on airport networks. Hence NLP must be handled locally for quick response. The robot should respond quickly to provide a satisfactory user experience. It asks the passenger to look directly at its screen, takes a picture, and then asks the passenger to insert a picture-id such as a passport identity page. It can then compare these images for added security, which requires a strong programmable neural net engine.

The remaining steps in check-in? That’s back to traditional embedded processing.

Pulling it all together

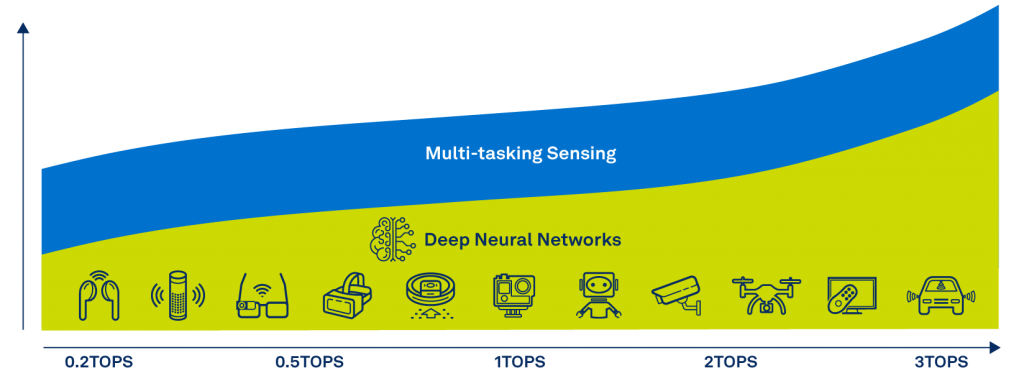

Intelligent sensing devices must use a platform which supports handling multiple sensors simultaneously. It should provide strong scalar DSP support for front-end signal processing — vectorized support for image-based computations and SLAM. And neural net support for speech and vision AI, again a vectorized DSP, but with special extensions for neural nets, along with rich software compilers and libraries to provide you all the fundamentals you’d expect in SDKs for each of these domains.

An option that allows to power in such a way sensing platforms is CEVA’s ‘SensPro2’. Building on the company’s already strong background in vision processing, audio, SLAM, and AI, SensPro2 is a highly scalable and enhanced 2nd generation high-performance sensor hub DSP for multitasking sensing and AI of multiple sensors including camera, radar, Lidar, Time-of-Flight, microphones, and inertial measurement units.

Moshe Sheier

Vice President of Marketing, CEVA