This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm.

Qualcomm AI Research deploys a popular 1B+ parameter foundation model on an edge device through full-stack AI optimization

Foundation models are taking the artificial intelligence (AI) industry by storm. A foundation model is a large neural network trained on a vast quantity of data at scale, resulting in a model that can be adapted to a wide range of downstream tasks with high performance. Stable Diffusion, a very popular foundation model, is a text-to-image generative AI model capable of creating photorealistic images given any text input within tens of seconds — pretty incredible. At over 1 billion parameters, Stable Diffusion had been primarily confined to running in the cloud, until now. Read on to learn how Qualcomm AI Research performed full-stack AI optimizations using the Qualcomm AI Stack to deploy Stable Diffusion on an Android smartphone for the very first time.

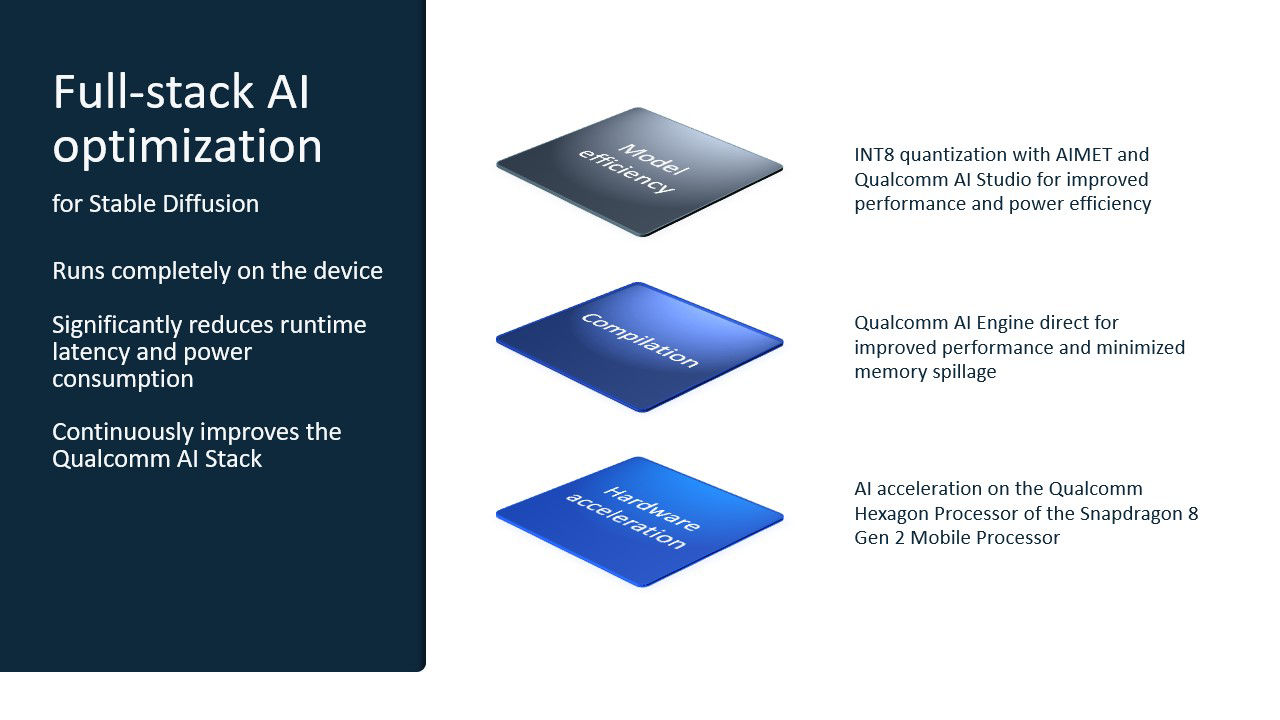

Full-stack AI optimization to efficiently run Stable Diffusion completely on the device.

Full-stack AI optimization with Qualcomm AI Stack

In my “AI firsts” blog post, I described how Qualcomm AI Research has taken on the task of not only generating novel AI research, but also being first to demonstrate proof-of-concepts on commercial devices — paving the way for technology to scale in the real world. Our full-stack AI research means optimizing across the application, the neural network model, the algorithms, the software, and the hardware, as well as working across disciplines within the company. For Stable Diffusion, we started with the FP32 version 1-5 open-source model from Hugging Face and made optimizations through quantization, compilation, and hardware acceleration to run it on a phone powered by Snapdragon 8 Gen 2 Mobile Platform.

To shrink the model from FP32 to INT8, we used the AI Model Efficiency Toolkit’s (AIMET) post-training quantization, a tool developed from techniques created by Qualcomm AI Research and now incorporated into the newly announced Qualcomm AI Studio. Quantization not only increases performance but also saves power by allowing the model to efficiently run on our dedicated AI hardware and to consume less memory bandwidth. Our state-of-the-art AIMET quantization techniques, such as Adaptive Rounding (AdaRound), were able to maintain model accuracy at this lower precision without the need for re-training. The techniques were applied across all the component models in Stable Diffusion, namely the transformer-based text encoder, the VAE decoder, and the UNet. This was critical for the model to fit on the device.

For compilation, we used the Qualcomm AI Engine direct framework to map the neural network into a program that efficiently runs on our target hardware. The Qualcomm AI Engine direct framework sequences the operations to improve performance and minimize memory spillage based on the hardware architecture and memory hierarchy of the Qualcomm Hexagon Processor. Some of these enhancements resulted from AI optimization researchers working together with compiler engineering teams to improve memory management in AI inference. The overall optimizations made in the Qualcomm AI Engine have significantly reduced runtime latency and power consumption, and this much needed trend continues within Stable Diffusion.

Our industry-leading edge AI performance on the Qualcomm AI Engine with the Hexagon Processor is unleashed through tight hardware and software co-design. The latest Snapdragon 8 Gen 2 with micro tile inferencing helps enable large models like Stable Diffusion to run efficiently — expect more improvements with the next-gen Snapdragon. Additionally, the enhancements made for transformer models, like MobileBERT, to dramatically speed up inference play a key role here as multi-head attention is used throughout the component models in Stable Diffusion.

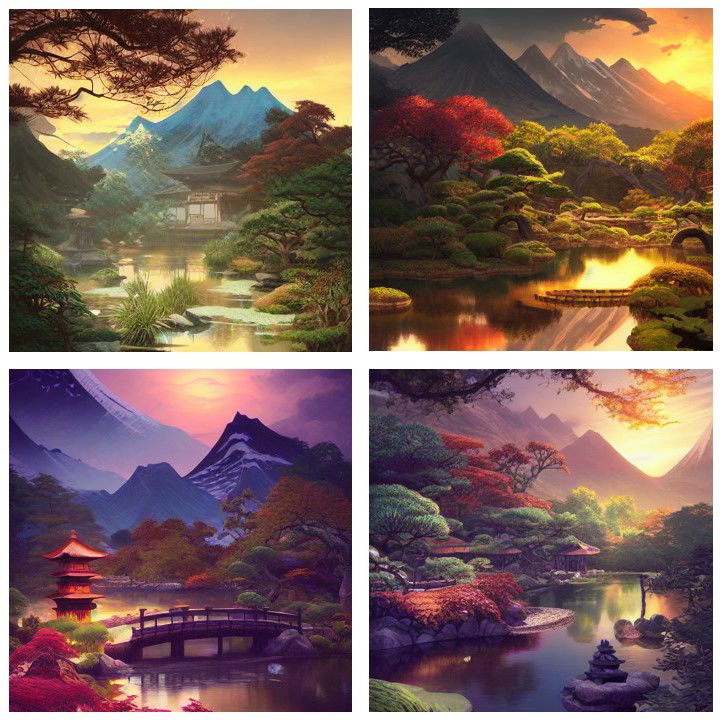

The result of this full-stack optimization is running Stable Diffusion on a smartphone under 15 seconds for 20 inference steps to generate a 512×512 pixel image — this is the fastest inference on a smartphone and comparable to cloud latency. User text input is completely unconstrained.

Stable Diffusion images generated with the prompt: “Japanese garden at wildlife river and mountain range, highly detailed, digital illustration, artstation, concept art, matte, sharp focus, illustration, dramatic, sunset, hearthstone, art by Artgerm and Greg Rutkowski and Alphonse Mucha.”

The edge AI era is here

Our vision of the connected intelligent edge is happening before our eyes as large AI cloud models begin gravitating toward running on edge devices, faster and faster. What was considered impossible only a few years ago is now possible. This is compelling because on-device processing with edge AI provides many benefits, including reliability, latency, privacy, efficient use of network bandwidth, and overall cost.

Although the Stable Diffusion model seems quite large, it encodes a huge amount of knowledge about speech and visuals for generating practically any imaginable picture. Furthermore, as a foundation model, Stable Diffusion can do much more than image generation with text prompts. There are a growing number of Stable Diffusion applications, such as image editing, in-painting, style transfer, super-resolution, and more that will offer a real impact. Being able to run the model completely on the device without the need for an internet connection will bring endless possibilities.

Scaling edge AI

Running Stable Diffusion on a smartphone is just the start. All the full-stack research and optimization that went into making this possible will flow into the Qualcomm AI Stack. Our one technology roadmap allows us to scale and utilize a single AI stack that works across not only different end devices but also different models.

This means that the optimizations for Stable Diffusion to run efficiently on phones can also be used for other platforms like laptops, XR headsets, and virtually any other device powered by Qualcomm Technologies. Running all the AI processing in the cloud will be too costly, which is why efficient edge AI processing is so important. Edge AI processing ensures user privacy while running Stable Diffusion (and other generative AI models) since the input text and generated image never need to leave the device — this is a big deal for adoption of both consumer and enterprise applications. The new AI stack optimizations also mean that the time-to-market for the next foundation model that we want to run on the edge will also decrease. This is how we scale across devices and foundation models to make edge AI truly ubiquitous.

At Qualcomm, we make breakthroughs in fundamental research and scale them across devices and industries to power the connected intelligent edge. Qualcomm AI Research works together with the rest of the company to integrate the latest AI developments and technology into our products — shortening the time between research in the lab and delivering advances in AI that enrich our lives.

Dr. Jilei Hou

Vice President, Engineering, Qualcomm Technologies, Inc.