-

AI hardware startups are a dime a dozen. But the founder of DeepX has a loftier goal — to build a company that matters to his home country, South Korea.

-

What’s at stake? Specsmanship – power efficiency, performance efficiency and support for a variety of algorithms – absolutely matters in assessing AI hardware. But what about accuracy loss that occurs when system companies port AI models, developed on GPU, to other types of hardware? This is DeepX’s niche.

Lokwon Kim, a founder of AI chip startup DeepX, entered a conference in Santa Clara, Calif. with swagger and audacity, fittingly — because DeepX was rolling out a family of AI accelerator chips that, Kim claimed, will deliver “AI everywhere, AI for everyone.”

Kim chose the Embedded Vision Summit for his coming-out party. DeepX grabbed the role of lead sponsor and secured a premium spot on the show floor — a marketing coup usually too expensive for startups.

To be clear, DeepX is an AI hardware company joining a crowded field. However, DeepX stands out for its deep roots in South Korea. By pitching his startup to the Korean government, Kim has turned DeepX AI research and development into a national project.

DeepX raised $40 million from the government and $20 million in South Korea-based venture capital. Series B funding is planned later this year, according to Kim.

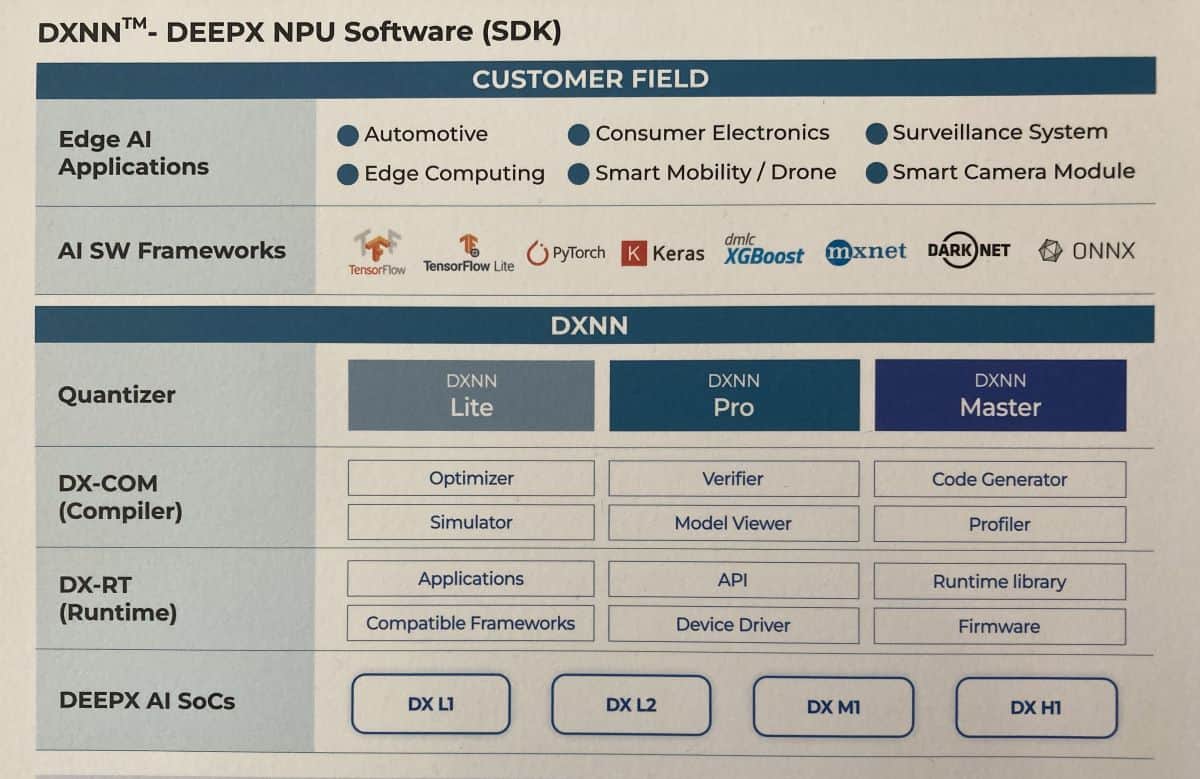

DeepX is launching a range of AI accelerators for embedded vision products designed for the edge. It has also developed DXNN, a software development kit that includes a compiler and runtime. DeepX considers DXNN its crown jewel.

DXNN can reportedly streamline deployment of deep-learning models into DeepX’s AI SoC. Its compiler provides tools for high-performance quantization, model optimization, and NPU inference compilation. Its runtime includes the NPU device driver, runtime with APIs and NPU firmware.

Most significantly, DXNN supports “automatic quantization of Deep Neural Network (DNN) models trained in floating-point format,” the startup claims.

DeepX didn’t conjure its AI hardware architecture out of thin air, though. Since founding the company in 2016, Kim said, “We have sat down and interviewed literally hundreds of global companies.”

Kim observed that “scalability, AI accuracy and power/performance efficiency” are three areas where developers of edge AI systems have struggled with. DeepX’s target is to achieve GPU-level AI accuracy on its AI accelerators, while delivering high throughput and low power consumption.

‘The Morris Chang of Korea’

Before DeepX, Kim was a lead designer of Apple’s application processors including A10, A11 Bionic and A12 Bionic.

Kim’s inspiration for DeepX was his experience at IBM’s T.J. Watson Research where he was a visiting scholar in 2010. He worked to develop a NPU (Neural Processing Unit) for deep learning, a project assigned to IBM by the legendary Defense Advanced Research Projects Agency (DARPA).

Kim, at that time, was a PhD student at UCLA. The idea of running the state-of-the art DNN on NPU has stuck with him ever since, Kim told the Ojo-Yoshida Report.

Even after joining Apple, Kim clung to the dream of his own AI startup. “I actually brought my idea to top executives at Apple.” They declined, opening Kim’s opportunity to pack up and return to Korea.

During our interview, Kim asked, “Do you know Morris Chang?”

We agreed with Kim that the semiconductor industry owes much to Chang, who left the United States and launched in Taiwan an enterprise, then widely dismissed in industry circles, called foundry services. That dubious beginning became Taiwan Semiconductor Manufacturing Co. (TSMC), the world’s No. 1 contract semiconductor supplier.

Expressing his admiration for Chang, Kim explained that TSMC has become so important to Taiwan — economically and politically — that it serves as a “silicon shield” against potential attack by China.

By leveraging the power of AI, Kim hopes for similar significance in his home country. He said, “I want to be Morris Chang of Korea, by creating an AI company that will be so important and crucial to the future of South Korea.”

This is a bold claim. But Kim’s thinking reflects the times. Geopolitics, for better or worse, has become intrinsic to the development of advanced semiconductors.

Strategy

DeepX has designed a range of four AI accelerators, all focused on vision applications. Its strategy is to offer a scalable solution for multiple edge vision AI segments.

With DX-L1, customers can build one-camera applications such as IP cameras, in-cabin monitoring, robotic cameras, and drones.

DX-L1, consisting of quad-core RISC-V, ISP, MIPI and video codec, offers 12 eTOPS AI performance. By eTOPS, Kim means “a performance metric equivalent to GPU’s TOPS.”

Customers designing edge AI vision systems that require three to four cameras can use DX-L2 equipped with 38 eTOPS.

The DX-M1 chip, equipped with dual ARM core and ISP, is built to support 10 cameras, delivering 200 eTOPS.

DX-H1, offering 1,600 eTOPS, will be produced in PCIe cards deployable in an edge server that can perform large-scale AI operations. By supporting 10,000 cameras, DX-H1 can serve a factory floor where numerous cameras monitor the manufacturing process. The H1 has dual ARM core and ISP blocks similar to the M1.

All four chips are fabricated by Samsung, with the L1 and L2 on a 28nm process technology. The M1 uses 14nm and H1 a 5nm process. Pricing ranges from $10 (L1), $20 (L2) to $50 (M1) and $1,500 (H1).

Accuracy

Besides offering low power and performance efficiency, DeepX has focused its resources on quantization work to maintain GPU-levels of accuracy on DeepX AI SoCs.

Based on its own experience, DeepX zeroed in on accuracy degradation, Kim said. He noted that porting AI models, originally trained on GPUs in floating-point format, to most types of hardware results in accuracy loss.

Customers who developed AI models on GPU were dismayed by discovering that their algorithm — when running on other hardware — loses accuracy.

So, DeepX’s team hunted for the point in each data path where accuracy degraded, Kim explained. The result is what DeepX calls “the world’s top performing quantizer” in its SDK, DXNN.

DXNN : DeepX’s Software Development Kit (Source: DeepX)

Market segments

Over the last several years, many AI hardware startups have fallen for the allure of automotive, with dreams that their AI chips will become the computer brain of the next-generation vehicle platform.

Kim, in contrast, has concluded that selling AI chips to car OEMs is a losing proposition for a startup, because of the time required for automotive chips to get verified, validated, and designed into vehicles. Passing Automotive Safety Integrity Level (ASIL) such as ASIL B and C adds difficulties. Even after all that trouble, for most AI chips, startups don’t end up selling a lot of them.

Kim’s strategy, instead, is to work with automakers, licensing IP for DeepX NPU, including software. Kim explained that DeepX, virtually unknown to many car OEMs until recently, is now getting OEM requests to evaluate DeepX chips.

This possibly means two things.

First, many European car OEMs have intimated to Kim that they remain undecided about their next-generation advanced vehicle platform.

Second, some leading carmakers have already committed resources to developing their own AI models using Nvidia’s GPU. But now, AI degradation issues are cropping up, as they port their models to other hardware. This is forcing them to look again for another AI hardware solution.

DeepX will offer IP licensing only for automakers, said Kim. For the rest of the applications, its business is selling chips.

The sweet spot for DeepX-designed AI chips, said Kim, is robotics, for delivery robots or for all those robots on factory floors.

Bottom line

Nvidia has clearly won the AI market with GPU. The next chapter of the AI battle, however, lies in how anyone with a GPU-trained AI model can port it efficiently and functionally to non-GPU hardware designed to be used at the edge.

Junko Yoshida

Editor in Chief, The Ojo-Yoshida Report

This article was published by the The Ojo-Yoshida Report. For more in-depth analysis, register today and get a free two-month all-access subscription.