Yu-Chuan Su, Research Scientist at Google, presents the “Learning for 360° Vision,” tutorial at the May 2023 Embedded Vision Summit.

As a core building block of virtual reality (VR) and augmented reality (AR) technology, and with the rapid growth of VR and AR, 360° cameras are becoming more available and more popular. People now create, share and watch 360° content in their everyday lives, and the amount of 360° content is increasing rapidly.

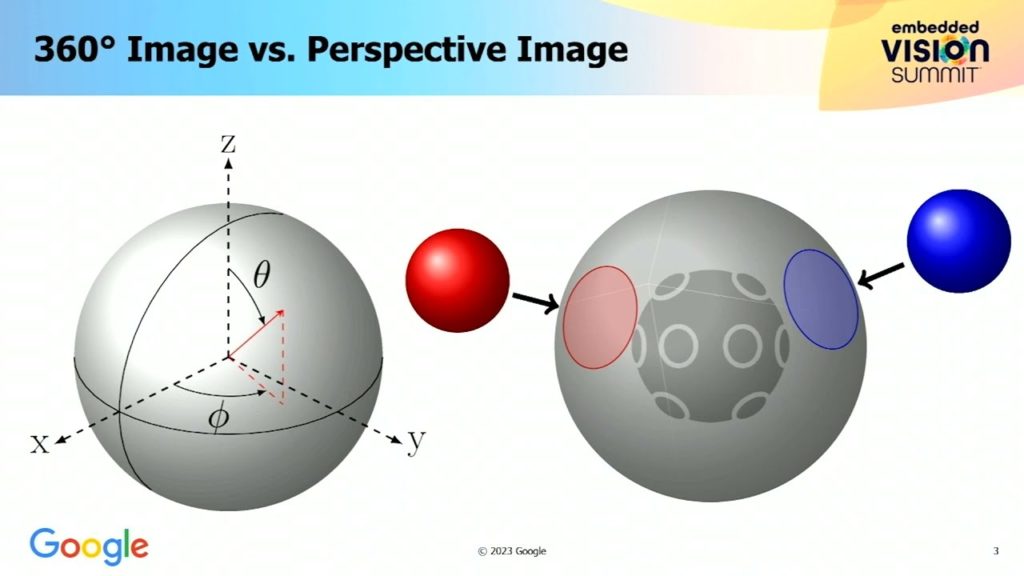

While 360° cameras offer tremendous new possibilities in various domains, they also introduce new technical challenges. For example, the distortion of 360° content in the planar projection degrades the performance of visual recognition algorithms. In this talk, Su explains how his company enables visual recognition on 360° imagery using existing models. Google’s solution improves 360° video processing and builds a foundation for further exploration of this new media.

See here for a PDF of the slides.