| LETTER FROM THE EDITOR |

Dear Colleague,

It’s almost here! Next week, May 21-23 to be exact, the Embedded Vision Summit will be returning to the Santa Clara, California Convention Center. On Wednesday morning, Maurits Kaptein, Chief Data Scientist at Network Optix and Professor at the University of Eindhoven, will share his expertise in overcoming scaling hurdles in his general session presentation “Scaling Vision-based Edge AI Solutions: From Prototype to Global Deployment“. Incredible advances in algorithms and silicon increasingly enable us to incorporate powerful AI models into many types of edge applications. But getting from a model to a deployed product at scale remains challenging. Kaptein will detail how to address the networking, fleet management, visualization and monetization challenges that come with scaling a global vision solution.

The era of generative AI at the edge has arrived! Multimodal and transformer models are revolutionizing machine perception by integrating language understanding with perception and enabling the use of diverse sensors. In his general session presentation “What’s Next in On-device Generative AI” next Thursday morning, Jilei Hou, Vice President of Engineering and Head of AI Research at Qualcomm Technologies, will explain the need—and the challenges—for deploying generative AI efficiently at the edge. His talk will also delve into Qualcomm’s latest research on techniques to enable efficient implementation of generative models at the edge.

As the premier conference and tradeshow for practical, deployable computer vision and edge AI, the Embedded Vision Summit focuses on empowering product creators to bring perceptual intelligence to products. This year’s Summit will feature nearly 100 expert speakers, more than 70 exhibitors and hundreds of demos across three days of presentations, exhibits and Qualcomm’s Deep Dive session. If you haven’t already registered, grab your pass today and be sure to use promo code SUMMIT24-NL for a 10% discount. Register now and tell a friend! We’ll see you there!

Brian Dipert

Editor-In-Chief, Edge AI and Vision Alliance |

| COMPUTER VISION IN EXTENDED REALITY AND ROBOTICS |

|

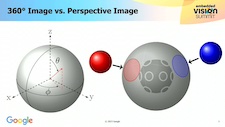

Learning for 360° Vision

As a core building block of virtual reality (VR) and augmented reality (AR) technology, and with the rapid growth of VR and AR, 360° cameras are becoming more available and more popular. People now create, share and watch 360° content in their everyday lives, and the amount of 360° content is increasing rapidly. While 360° cameras offer tremendous new possibilities in various domains, they also introduce new technical challenges. For example, the distortion of 360° content in the planar projection degrades the performance of visual recognition algorithms. In this 2023 Embedded Vision Summit talk, Yu-Chuan Su, Research Scientist at Google, explains how his company enables visual recognition on 360° imagery using existing models. Google’s solution improves 360° video processing and builds a foundation for further exploration of this new media.

Vision-language Representations for Robotics

In what format can an AI system best present what it “sees” in a visual scene to help robots accomplish tasks? This question has been a long-standing challenge for computer scientists and robotics engineers. In this 2023 Embedded Vision Summit presentation, Dinesh Jayaraman, Assistant Professor at the University of Pennsylvania, provides insights into cutting-edge techniques being used to help robots better understand their surroundings, learn new skills with minimal guidance and become more capable of performing complex tasks. Jayaraman discusses recent advances in unsupervised representation learning and explains how these approaches can be used to build visual representations that are appropriate for a controller that decides how the robot should act. In particular, he presents insights from his research group’s recent work on how to represent the constituent objects and entities in a visual scene, and how to combine vision and language in a way that permits effectively translating language-based task descriptions into images depicting the robot’s goals.

|

| AI RISKS AND OPPORTUNITIES |

|

Combating Bias in Production Computer Vision Systems

Bias is a critical challenge in predictive and generative AI that involves images of humans. People have a variety of body shapes, skin tones and other features that can be challenging to represent completely in training data. Without attention to bias risks, ML systems have the potential to treat people unfairly, and even to make humans more likely to do so. In this 2023 Embedded Vision Summit talk, Alex Thaman, Chief Architect at Red Cell Partners, examines the ways in which bias can arise in visual AI systems. He shares techniques for detecting bias and strategies for minimizing it in production AI systems.

Generative AI At the Edge

In this recent video interview, Kerry Shih of GenAI Nerds, Jeff Bier, Founder of the Edge AI and Vision Alliance, and Phil Lapsley, the Alliance’s Vice President of Business Development, explore the opportunities and trends for generative AI at the edge. Shih, Bier and Lapsley discuss topics such as:

- Where we are in the generative AI hype cycle

- What the adoption rate looks like

- Job threats, and

- Examples of the value that generative AI can really provide.

Note that Shih and Lapsley will also be co-hosting a session on generative AI at the edge at next week’s Embedded Vision Summit.

|

| UPCOMING INDUSTRY EVENTS |

|

Embedded Vision Summit: May 21-23, 2024, Santa Clara, California

More Events

|

| FEATURED NEWS |

|

Ambarella’s Latest 5 nm AI SoC Family Runs Vision-language Models and AI-based Image Processing at Low Power Consumption Levels

DEEPX Expands Its First-generation AI Chip Family into Intelligent Security and Video Analytics Markets

Digi International’s ConnectCore MP25 System-on-module is Tailored for Next-generation Computer Vision Applications

AMD’s Expanded AI Processor Portfolio Targets Professional Mobile and Desktop PCs

Hailo’s Hailo-10 AI Accelerator Brings Generative AI to Edge Devices

More News

|

| EMBEDDED VISION SUMMIT SPONSOR SHOWCASE |

|

Attend the Embedded Vision Summit to meet these and other leading computer vision and edge AI technology suppliers!

Network Optix

Network Optix is revolutionizing the computer vision landscape with an open development platform that’s far more than just IP software. Nx Enterprise Video Operating System (EVOS) is a video-powered, data-driven operational management system for any and every type of organization. An infinite-scale, closed-loop, self-learning business operational intelligence and execution platform. An operating system for every vertical market. Just add video.

Qualcomm

Qualcomm is enabling a world where everyone and everything can be intelligently connected. Our one technology road map allows us to efficiently scale the technologies that launched the mobile revolution—including advanced connectivity, high-performance, low-power compute, on-device intelligence and more—to the next generation of connected smart devices across industries. Innovations from Qualcomm and our family of Snapdragon platforms will help enable cloud-edge convergence, transform industries, accelerate the digital economy and revolutionize how we experience the world, for the greater good.

|

| EMBEDDED VISION SUMMIT PARTNER SHOWCASE |

|

Hackster.io

Discover the world of embedded vision with Hackster.io, the world’s fastest growing developer community. Dive into the latest AI news, trends, and tools, and explore thousands of open source projects from 2.3M+ members around the globe.

MVPro Media

The MVPro Media team is a melting pot of talent working together to deliver the news you need to know about. Coming from diverse professional backgrounds and experiences connected to machine vision and industrial image processing, we bring our readers committed and unique content they won’t find elsewhere. Our mission at MVPro Media as a B2B publication is to find and report on the global events and companies that keep Machine Vision moving forward. UK-based, we cover global news but with a European flavor. We get access to the most amazing advancements in machine vision and image processing technologies before anyone else; we travel to incredible locations to report on stories, see the impact it has and listen to forward-thinking ideas.

Vision Spectra

Vision Spectra magazine covers the latest innovations that are transforming today’s manufacturing landscape: neural networking, 3D sensing, embedded vision and more. Keep up-to-date with a free subscription devoted solely to this changing field. Each quarterly issue will include rich content on a range of topics, with an in-depth look at how vision technologies are transforming industries from food and beverage to automotive and beyond, with integrators, designers, and end users in mind.

Wiley

The Spring 2024 issue of Wiley’s inspect America is now available! Read it for free and learn everything you need to know about embedded vision, including an interview with Embedded Vision Summit organizer Jeff Bier and some very exciting embedded vision products. You can also read about how smart cameras enable fast time-to-market with low hardware costs, and how a smart camera reduces energy costs by 90 percent in a rooftop ice-melting system.

|