This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm.

Qualcomm’s accepted papers, demos and workshops at CVPR 2024 showcase the future of generative AI and perception

The Computer Vision and Pattern Recognition Conference (CVPR) 2024 begins on Monday, June 17, and Qualcomm Technologies is excited to participate with five research papers on the main track, 11 research papers on co-located workshops, 10 demos, as well as two co-organized workshops and other events for the research community. With an acceptance rate of 25%, CVPR celebrates the best research work in the field and shows us a glimpse of what we can expect from the future of generative artificial intelligence (AI) for images and videos, XR, automotive, computational photography, robotic vision and more.

At premier conferences such as CVPR, meticulously peer-reviewed papers establish the new state of the art (SOTA) and make significant contributions to the broader community. We would like to highlight a few of Qualcomm Technologies’ accepted papers that push the boundaries of computer vision research across two areas — generative AI and perception.

Generative AI research

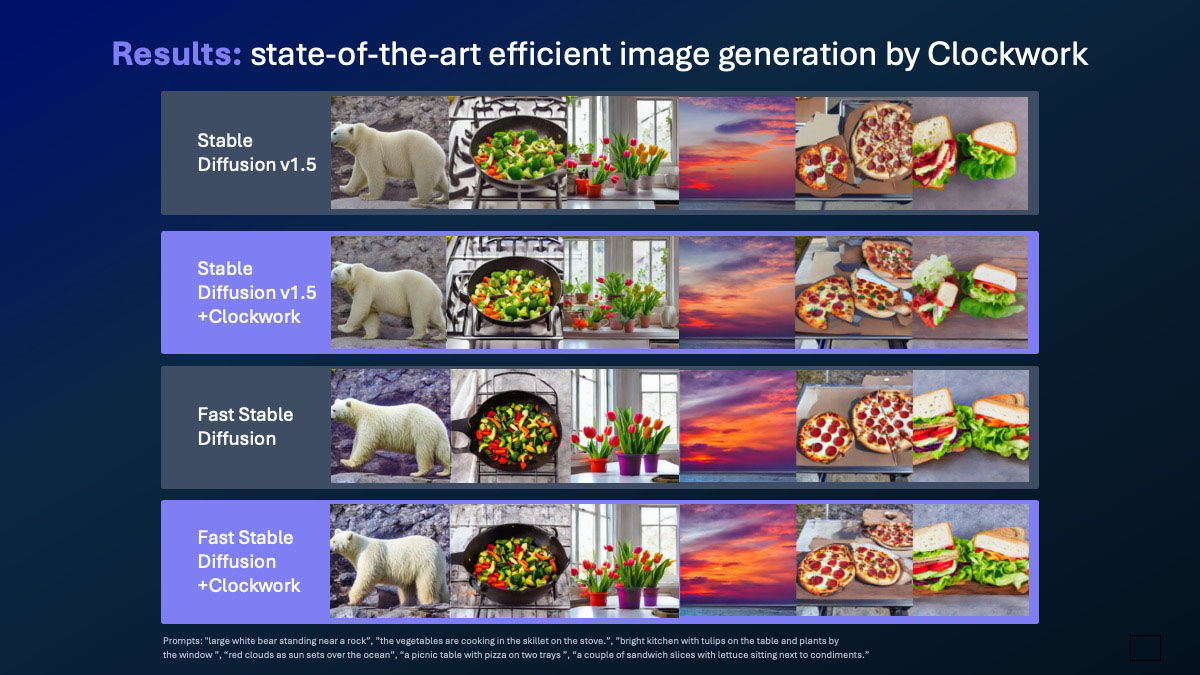

Text-to-image diffusion models allow users to create high-definition visual content instantly, but at a high computational cost. The paper “Clockwork UNets for Efficient Diffusion Models” introduces a method called Clockwork Diffusion to improve the efficiency of such models. They usually employ computationally expensive UNet-based denoising operations in every generation step. However, the paper identifies that not all operations are equally relevant for the final output quality.

It turns out that UNet layers operating on high-resolution feature maps are sensitive to small perturbations, while low-resolution feature maps have less impact on the output. Based on this observation, Clockwork Diffusion proposes a method that periodically reuses computation from preceding denoising steps to approximate low-resolution feature maps at subsequent steps.

This approach leads to improved perceptual scores of up to 32% reduction in FLOPs for Stable Diffusion v1.5. The method is suitable for low-power edge devices, making it easily scalable to real-world applications. Find out more about Clockwork from our blog post on efficient generative AI for images and videos.

Perception papers

Solving data scarcity in optical flow estimation

Our paper “OCAI: Improving Optical Flow Estimation by Occlusion and Consistency Aware Interpolation” addresses the challenge of data scarcity in optical flow estimation by proposing a novel method called Occlusion and Consistency-Aware Interpolation (OCAI), which:

- Supports robust frame interpolation by generating intermediate video frames alongside optical flows.

- Utilizes an occlusion-aware forward-warping approach to resolve ambiguities in pixel values and fill in missing values.

Additionally, this paper introduces a teacher-student style semi-supervised learning method that leverages the interpolated frames and flows to train a student model, significantly improving optical flow accuracy. The evaluations on established benchmarks demonstrate the perceptually superior interpolation quality and enhanced optical flow accuracy achieved by OCAI.

Improvements in optical flow accuracy can lead to better robot navigation, movement detection for the automotive industry and more.

Improving the accuracy of depth completion

Qualcomm AI Research has recently achieved state-of-the-art results in depth completion for computer vision with their research paper titled “DeCoTR: Enhancing Depth Completion with 2D and 3D Attentions.” The paper introduces a novel approach that combines 2D and 3D attentions to improve the accuracy of depth completion without the need for iterative spatial propagations. By applying attention to 2D features in the bottleneck and skip connections:

- The baseline convolutional depth completion model is enhanced, bringing it on par with complex transformer-based models.

- Additionally, the authors uplift the 2D features to form a 3D point cloud and introduce a 3D point transformer to process it, allowing the model to explicitly learn and exploit 3D geometric features.

The proposed approach, called DeCoTR, sets new SOTA performance on established depth completion benchmarks, highlighting its superior generalizability compared to existing approaches. This has exciting applications in areas such as autonomous driving, robotics and augmented reality, where accurate depth estimation is crucial.

Enhancing stereo video compression

The rise of new multimodal technologies like virtual reality and autonomous driving has increased the demand for efficient multi-view video compression methods.

However, existing stereo video compression approaches compress left and right views sequentially, leading to poor parallelization and runtime performance. “Low-Latency Neural Stereo Streaming” (LLSS) introduces a novel parallel stereo video coding method. LLSS addresses this issue by:

- introducing a bidirectional feature shifting module that exploits mutual information among views and

- encodes them effectively with a joint cross-view prior model for entropy coding.

This allows LLSS to process left and right views in parallel, minimizing latency and improving rate-distortion performance compared to existing neural and conventional codecs. The method outperforms the current SOTA on benchmark datasets with bitrate savings ranging from 15.8% to 50.6%.

Enabling natural looking facial expressions

With a multitude of faces and characters being generated with artificial intelligence, there is a need to create more natural looking facial expressions and facial expression transfers. This has implications in gaming and content creation. Facial action unit (AU) intensity plays a pivotal role in quantifying fine-grained expression behaviors, which is an effective condition for facial expression manipulation. In the paper “AUEditNet: Dual-Branch Facial Action Unit Intensity Manipulation with Implicit Disentanglement” the team achieved accurate AU intensity manipulation in high-resolution synthetic face images.

- Our proposed model AUEditNet achieves impressive intensity manipulation across 12 AUs, trained effectively with only 18 subjects.

- Utilizing a dual branch architecture, our approach achieves comprehensive disentanglement of facial attributes and identity without necessitating additional loss functions or implementing with large batch sizes.

- The AUEditNet method allows conditioning manipulation on intensity values or target images without retraining the network or requiring extra estimators.

This pipeline presents a promising solution for editing facial attributes despite the dataset’s limited subject count.

Technology demonstrations

We make our generative AI and computer vision research tangible by showcasing live demonstrations, proving their practical relevance beyond theoretical concepts. We invite you to visit us at booth #1931 to experience these technologies first-hand. Let me point out a few of the demos we’re excited to present.

Generative AI

On generative AI we demonstrate Stable Diffusion with LoRA adapters running on an Android smartphone. The LoRA adapters enable the creation of high-quality custom images for Stable Diffusion based on personal or artistic preferences. Users could select a LoRA adapter and set the adapter strength to produce the desired image.

We also exhibit multimodal LLM on an Android phone. Here we show Large Language and Vision Assistant (LLaVA), a more than 7 billion-parameter LMM that can accept multiple types of data inputs, including text and images, and generate multi-turn conversations about an image. LLaVA ran on a reference design powered by Snapdragon 8 Gen 3 mobile platform at a responsive token rate completely on device.

The on-device AI assistant uses an audio-driven 3D avatar (more information below).

Computer vision technology

Our booth also features a variety of other innovative computer vision demonstrations:

- Advancements in real-time and precise segmentation on edge devices: This technology can handle both known and unknown classes and supports interactive object segmentation in images or videos through user inputs such as touch, drawing a box, or even typing words.

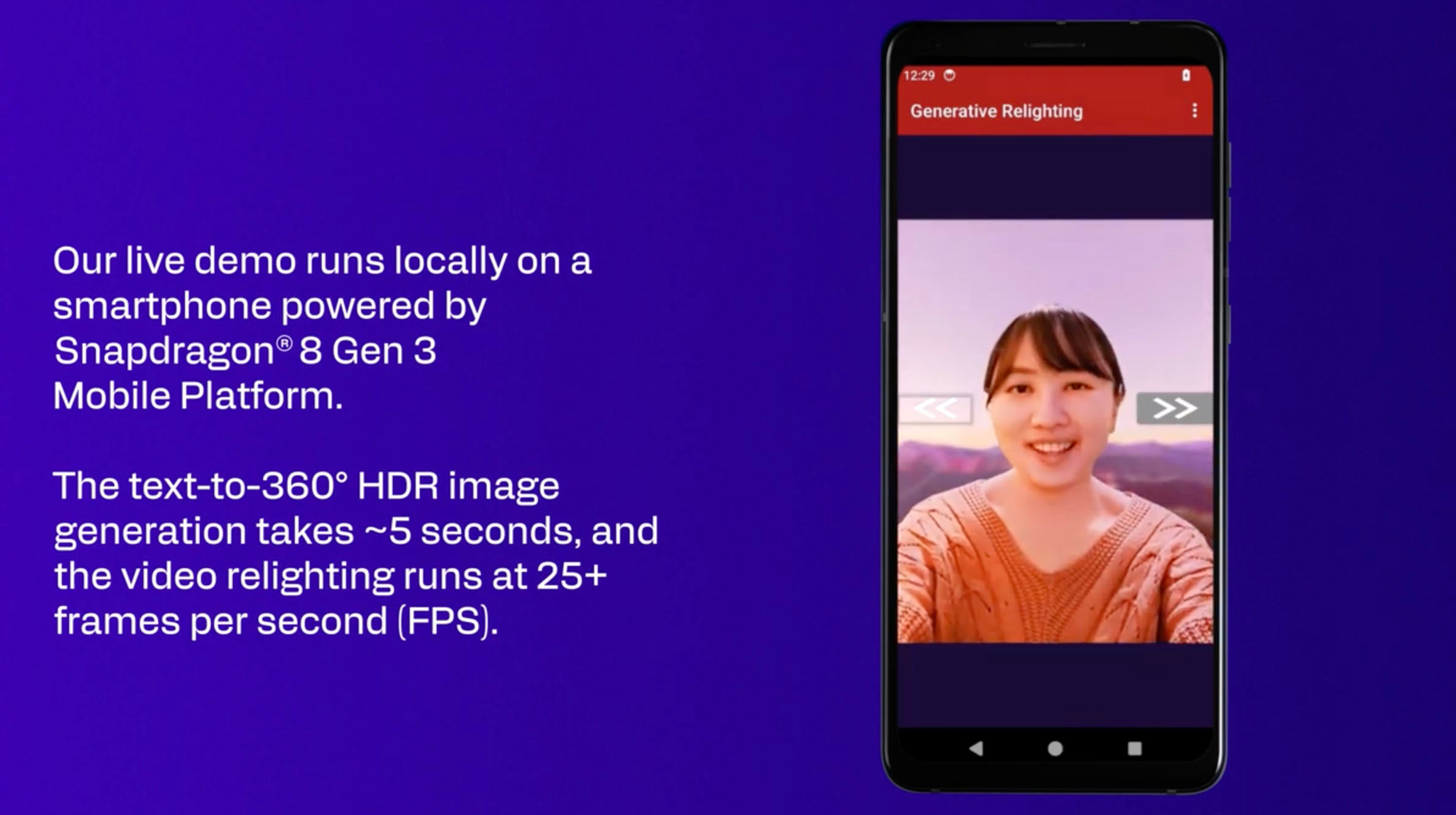

- Generative video portrait relighting technology: Works in tandem with generative AI for background replacement, offering enhanced realism for facial features in video chat applications.

- On-device AI assistant: Utilizes an audio-driven 3D avatar, complete with lip-syncing and expressive facial animations, to create a more engaging user experience.

Furthermore, we are presenting a cutting-edge facial performance capture and retargeting system, which can capture intricate facial expressions and translate them onto digital avatars, enhancing the realism and personalization of digital interactions.

Autonomous vehicle technology

For automotive applications, we are showing our Snapdragon Ride advanced driver-assistance system, showcasing perception, mapping, fusion and vehicle control using our modular stack solution. We are also featuring advancements in driver monitoring systems, as well as camera sensor data augmentation using generative AI to expand image datasets to support model training with difficult-to-capture scenarios.

Co-organized workshops

- Efficient Large Vision Models (eLVM): Large vision models (LVM) are becoming the foundation for many computer vision tasks. Qualcomm AI Research is co-organizing the Efficient Large Vision Models Workshop that focuses on the computational efficiency of LVMs, with the aim of broadening their accessibility to a wider community of researchers and practitioners. Exploring ideas for efficient adaptation of LVMs to downstream tasks and domains without the need for intensive model training and fine-tuning empowers the community to conduct research with a limited compute budget. Furthermore, accelerating the inference of LVMs enables adaptation of them for many real-time applications on low compute platforms including vehicles and phones.

- Omnidirectional Computer Vision (OmniCV): Omnidirectional cameras are already widespread in many applications such as automotive, surveillance, photography and augmented/virtual reality that benefit from a large field of view. Qualcomm AI Research is co-organizing the Omnidirectional Computer Vision Workshop to bridge the gap between the research and real-life use of omnidirectional vision technologies. This workshop links the formative research that supports these advances and the realization of commercial products that leverage this technology. It encourages development of new algorithms and applications for this imaging modality that will continue to drive future progress.

Join us in expanding the limits of generative AI and computer vision

This is only a taste of the standout highlights from our presence at CVPR 2024. If you’re attending the event, make sure to visit the Qualcomm booth #1931 to discover more about our research initiatives, see our demos in action and explore opportunities in AI careers with us.

Fatih Porikli

Senior Director of Technology, Qualcomm Technologies

Armina Stepan

Senior Marketing Comms Coordinator, Qualcomm Technologies Netherlands B.V.