This article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA.

Large-scale, use–case-specific synthetic data has become increasingly important in real-world computer vision and AI workflows. That’s because digital twins are a powerful way to create physics-based virtual replicas of factories, retail spaces, and other assets, enabling precise simulations of real-world environments.

NVIDIA Isaac Sim, built on NVIDIA Omniverse, is a fully extensible, reference application to design, simulate, test, and train AI-enabled robots. Omni.Replicator.Agent (ORA) is an extension in Isaac Sim used for generating synthetic data that can be used specifically for training computer vision models, such as TAO PeopleNet Transformer and TAO ReIdentificationNet Transformer.

This post is the second in a series on building multi-camera tracking vision AI applications. In the first post, we provided a high-level view of the end-to-end multi-camera tracking workflow covering simulation, training, and deployment of the model and the other components of the workflow.

We now dive deeper into the simulation and training steps, where we generate high-quality synthetic data to fine-tune the base model for a specific use case. In this post, we introduce you to TAO ReIdentificationNet (ReID), a network commonly used in both Multi-Camera tracking (MTMC) and Real-time Location System (RTLS) applications to track and identify objects across different camera views and showcase how you can boost your ReID accuracy with synthetic data collected from ORA.

Specifically, we demonstrate how the data obtained from the ORA extension in Isaac Sim can be used to train and fine-tune your ReID model using the NVIDIA TAO API to fit your use case. This opens doors to augment your existing real datasets with synthetic data in a scalable way to improve the diversity of your datasets which improves the robustness of your models.

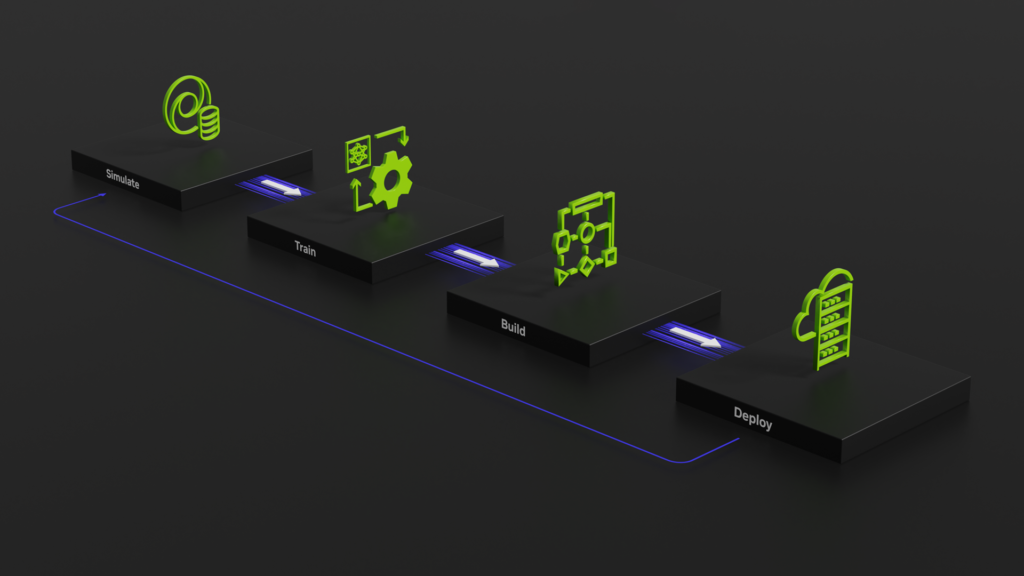

Figure 1. The simulate-train-build-deploy workflow

Figure 1. The simulate-train-build-deploy workflow

Overview of ReIdentificationNet

ReIdentificationNet is a network used in the MTMC and RTLS workflows to identify objects across different cameras by extracting embeddings from detected object crops. These embeddings capture essential information about the appearance, texture, color, and shape enhancing the identification of similar objects across cameras. This means objects with similar appearances have similar embeddings across cameras.

The accuracy of the ReID model to distinguish unique objects plays a crucial role in multi-camera tracking. The MTMC and RTLS application relies on the embeddings from ReIdentificationNet to associate objects across cameras and perform tracking.

![]() Figure 2. Multiple objects identified and tracked across multiple cameras with ReIdentificationNet (Source)

Figure 2. Multiple objects identified and tracked across multiple cameras with ReIdentificationNet (Source)

Figure 3. ReIdentificationNet used in MTMC and RTLS workflows

Figure 3. ReIdentificationNet used in MTMC and RTLS workflows

Model architecture

The inputs to the network are RGB image crops for objects of size 256 x 128. The model outputs an embedding vector of size 256 for each image crop.

Currently, ReIdentifcationNet supports ResNet-50 and Swin transformer backbones. The Swin variant of ReIdentificationNet is a human-centric foundational model that is pretrained on roughly 3M image crops and then fine-tuned on various supervised person reidentification datasets.

Figure 4. ReIdentificationNet training pipeline

Figure 4. ReIdentificationNet training pipeline

Pretraining

For pretraining, we adopted a self-supervised learning technique called SOLIDER: Semantic Controllable Self-Supervised Learning Framework for Human-Centric Visual Tasks.

SOLIDER is built on top of DINO (self-DIstillation with NO labels) and uses prior knowledge of human-image crops to generate pseudo-semantic labels to train the human representations with semantic information. SOLIDER uses a semantic controller to adjust the human representation’s semantic content suited for this downstream task of person re-identification.

For more information, see the Beyond Appearance: a Semantic Controllable Self-Supervised Learning Framework for Human-Centric Visual Tasks.

The dataset for pretraining includes a combination of NVIDIA proprietary datasets along with Open Images V5.

Fine-tuning

For fine-tuning, the pretrained model is trained on various supervised person re-identification datasets. These supervised datasets include a collection of synthetic and real NVIDIA proprietary datasets.

| Dataset Type | # of Images | # of Identities |

| Synthetic | 14,392 | 156 |

| Real | 67,563 | 4470 |

Table 1. Overview of the training dataset

Table 2 shows the accuracy for the downstream ReIdentification task on the Swin-Tiny backbone.

| Model type | Model parameters size | Precision | Embedding size | mAP (Mean Average Precision) |

Top-1 Accuracy |

|---|---|---|---|---|---|

| Resnet50 | 24.1 Million | FP16 | 256 | 93.0% | 94.7% |

| Swin-Tiny | 28.1 Million | FP16 | 256 | 94.90% | 96.9% |

Table 2. ReIdentification Transformer model size and accuracy on internal datasets

Why is fine-tuning needed?

Underperforming ReID networks can cause ID switches, where the system incorrectly associates IDs due to the high visual similarity between different individuals or changes in appearance over time.

This issue arises because the network might not generalize well to the specific conditions of a given scene, such as lighting, background, and clothing variations. For example, in Figure 4, due to poor embedding quality, an ID switch occurred, causing ID 4 to convert to ID 9 and ID 17 and object ID 6 to convert to ID 14.

To mitigate ID switches and improve accuracy, it is crucial to fine-tune the ReID network with data from the specific scene using ORA. This ensures that the model learns the unique characteristics and nuances of the environment, leading to more reliable identification and tracking.

Figure 5. ID switch occurring in the real-time location system workflow before the TAO ReIdentification fine-tuning process

Figure 5. ID switch occurring in the real-time location system workflow before the TAO ReIdentification fine-tuning process

Simulate: Using Isaac Sim and Omniverse Replicator Agent extension

The following video describes how to install, set up, and run a simulation with Isaac Sim and the ORA extension to generate synthetic data and boost ReID model accuracy.

Use Issac Sim for Synthetic Data Generation for Multi-Camera Tracking

Best practices for configuring the simulation

To produce high-quality synthetic data, a few key aspects need to be considered:

- Character count

- Character uniqueness

- Camera placement

- Character behavior

Character count

ReIdentificationNet benefits greatly from the number of unique identities. It is essential to pick the right number of agents while setting your digital twin with ORA.

Under Character Settings in the ORA interface, you can set up the number of characters. Use at least 10-12 unique characters given an environment with a similar or larger size than the Isaac Sim default warehouse digital asset.

Character uniqueness

The characters you choose in Isaac Sim should have distinct appearances. This typically includes characters wearing different clothing, shoes, accessories, headgear, and so on. It’s also important to choose the appropriate assets for your digital twin. For instance, select characters who wear safety vests for a warehouse environment.

Camera placement

Cameras should be positioned so that the union of their view frustums covers the entire floor area in which characters are expected to be detected and tracked. To ensure this, place cameras at a higher height, such as in the ceiling and tilted towards the floor.

Cameras also must be positioned in such a way that the union of the view frustums maintains continuous observation of all tracked subjects. Each character should be captured from 3–4 different camera perspectives at all times for optimal data quantity and quality.

Camera placement can be verified by selecting each camera from the World section in the Stage pane, adjusting the properties, and checking its field of view.

Character behavior

Character behavior can be customized in Isaac Sim ORA. Behavior commands can be entered manually or generated randomly for each character, providing flexibility and variety in their movement.

For ReID data collection, we recommend generating behaviors randomly as long as the objects are captured by the desired camera angles.

Figure 6. Different camera angles capture the object movement through the Isaac Sim ORA extension

Figure 6. Different camera angles capture the object movement through the Isaac Sim ORA extension

Train: Fine-tuning ReIdentificationNet

After the data is obtained from the ORA extension, prepare and sample it for training the TAO ReIdentificationNet model. Use the TAO fine-tuning notebook present in the Metropolis Microservices or TAO Toolkit to conduct the fine-tuning process.

Data sampling guide

The following criteria are important while sampling training data for ReID.

- Number of identities: ReIdentificationNet benefits greatly from more unique identities. All identities present in the Isaac Sim scene are automatically a part of the training sample. We recommend fine-tuning on 10-12 objects and incrementally increasing the count.

- Number of cameras: The number of cameras also greatly impacts the performance of the ReID model. More cameras lead to more coverage of the scene and the objects. We recommend placing 3–4 cameras for a small to medium environment.

- Number of training images: We recommend starting with a smaller number of samples, around 50 per ID, for a total of 500 samples. You can add more samples after the accuracy is observed to saturate.

- Bounding box aspect ratio: As the ReID is a human-centric model, the height of the bounding box crop must be taller than its width. We recommend that a ratio of bounding box width to height be less than 1.

- Data format: The data obtained from the ORA extension in KITTI format must be converted to the Market-1501 format for TAO ReIdentificationNet training. ReIdentificationNet requires images to be in three folders:

bounding_box_train,bounding_box_test, andquery. Each folder consists of images of unique characters with the file name in the following format:000x_cxsx_0x_00.jpg. For example, the image0001_c1s1_01_00.jpgis the first sequences1of camerac1.01is the first frame in the sequencec1s1.0001in0001_c1s1_01_00.jpgis the unique ID assigned to the object. For more information, see Market-1501 Dataset and ReIdentificationNet.

Figure 7 shows the dataset samples.

Figure 7. Images from the sampled ReIdentificationNet dataset

Figure 7. Images from the sampled ReIdentificationNet dataset

Training tricks

After you prepare the data, use the following training tricks from Bag of Tricks and A Strong Baseline for Deep Person Re-identification to enhance the accuracy of the ReID model during the fine-tuning process.

- ID loss + triplet loss + center loss: The reidentification task is a simple classification where each class is the ID of a given object. While this ID loss helps to train ReID, it is not sufficient to improve the accuracy of the model. To further enhance this, ID loss is supported with triplet and center losses. Triplet loss helps push the embeddings of positive identity samples close and negative identity samples away from each other while center loss helps learn the center of each class and penalizes the distances between the features to improve intra-class compactness.

- Random erasing augmentation: Occlusion is a common scenario when tracking an object across cameras. The goal of the training process is to mimic such scenarios in the training data to improve generalization. Random erasing augmentation removes random chunks of a particular image crop to replicate occlusions.

- Warmup learning rate: A constant learning rate is not sufficient to converge the training of ReIdentificationNet, so the learning rate (LR) is linearly increased for the first 10 epochs, from 3.5 x 10-5 to 3.5 x 10-6. Then, LR is reduced to 3.5 × 10−5 and 3.5 × 10−6 at the 40th epoch and 70th epoch, respectively.

- Batch normalization neck (BNNeck): As the ID loss optimizes on cosine distance and triplet loss optimizes on Euclidean distance, their losses have inconsistent meanings. This results in oscillating loss values. To mitigate this, BNNeck is used after the feature layers to acquire a normalized feature.

- Label smoothing: The identities present in the training set might not appear in the testing set. To prevent overfitting, use label smoothing as a training trick for this classification task.

Training specifications

TAO enables you to customize fine-tuning experiments by adjusting settings like epochs, batch size, and learning rates in a specification file. For fine-tuning, the number of epochs is set to 10. Monitor for overfitting or underfitting by evaluating the model on test data. If overfitting occurs, additional samples can be generated and training resumed to ensure the model performs well on test data.

For more information about training specifications, see ReIdentificationNet Transformer.

Evaluation

Your ReID model can now be fine-tuned with the training tricks and sampled data. Evaluation scripts can help you verify the accuracy before and after fine-tuning. Use the rank-1 and mAP scores to evaluate the accuracy of ReIdentificationNet.

Rank-1 accuracy measures how often the top prediction made by the network is correct. For each and every query image, evaluate whether the first retrieved person belongs to the same identity.

mAP is a more comprehensive metric that considers the entire list of predictions made by the ReID model. For each query, compute an average precision that refers to the proportion of correctly positive identifications correctly classified. The mAP score is the mean of all these computed AP scores across all queries. This metric is more robust, as it accounts for the precision at each rank in the list of retrieved individuals.

Table 3 shows the results before and after fine-tuning. We sampled these images from the 10-minute videos captured using ORA with 10 objects and four cameras in the scene.

| Model | Backbone | mAP | Rank-1 |

| Default ReID | ResNet50 | 63.54% | 68.32% |

| Fine-tuned ReID (30 epochs on 2000 samples) |

78.4% | 87.53% | |

| Default ReID | Swin Tiny | 83.9% | 85.23% |

| Fine-tuned ReID (30 epochs on 2000 samples) |

91.3% | 93.80% |

Table 3. Results after fine-tuning on 2K image samples

The following results indicate that fine-tuning with the sampled data can help boost accuracy scores. You can increase the number of training epochs to improve the accuracy scores. Evaluate ReID on other existing public datasets to see if the model is being overfit.

Compare the distribution of embedding distances before and after fine-tuning (Figure 8).

Figure 8. Embedding distribution before and after fine-tuning

Figure 8. Embedding distribution before and after fine-tuning

In Figure 8, the x-axis represents the cosine distance between embeddings, while the y-axis shows the density. In both plots, the green distribution corresponds to ID matches (positive distribution), and the red distribution corresponds to ID mismatches (negative distribution).

- Before fine-tuning, ID matches (green) range from (0 to 1) and ID mismatches (green) range from (0.09 to 1).

- After fine-tuning, ID matches become concentrated at lower distances (0 to 0.61), and mismatches are more dispersed ranging from (0.3 to 1), indicating improved embedding quality.

The minimal overlap among the two distributions also indicates better identity separability.

Expected training time

The expected training time for 10-12 characters should be minimal but as you scale up the number of characters, cameras, and training samples, the training time can increase.

We provide an estimate of the total training time for such scenarios. The expected training time for ~2K image samples for 30 epochs is around 30 minutes for a single NVIDIA A100 GPU.

Deploy: Export fine-tuned model to ONNX and improve MTMC and RTLS accuracy

This fine-tuned ReID model can now be exported to ONNX format for deployment use in the MTMC or RTLS application. For more information, see Optimize Processes for Large Spaces with the Multi-Camera Tracking Workflow and the Re-Identification topic in the NVIDIA Metropolis Microservices guide.

After the model is deployed, visualize the tracking results with this new ReID model (Figure 9).

Figure 9. Before and after effects of fine-tuning results of the ReID model on the warehouse scene using RTLS

Figure 9. Before and after effects of fine-tuning results of the ReID model on the warehouse scene using RTLS

Conclusion

The workflow highlighted in this post enables you to fine-tune ReID models on synthetic data. The ORA extension provides a flexible way to record character movement and TAO API offers a developer-friendly way to train, infer, evaluate, and export your fine-tuned ReID model helping boost model accuracy without the need for any labeling efforts.

To customize and further build on top of the workflow with NVIDIA tools covering the entire vision AI lifecycle from simulation to fine-tuning and deployment, see the Metropolis Multi-Camera AI quickstart guide. For technical questions, visit the NVIDIA TAO forum or Isaac Sim forum.

For more information, see the following resources:

- Optimize Processes for Large Spaces with the Multi-Camera Tracking Workflow

- Real-Time Vision AI From Digital Twins to Cloud-Native Deployment with NVIDIA Metropolis Microservices and NVIDIA Isaac Sim

- AI-Powered Multi-Camera Tracking

Related resources

- DLI course: Building Real-Time Video AI Applications

- GTC session: People and Robot Navigation Simulation for Synthetic Data Generation

- GTC session: Reward Fine-Tuning for Faster and More Accurate Unsupervised Object Discovery

- GTC session: Training With Synthetic Data and Production at the Edge: Visual Intelligence at Scale (Presented by Softserve, Inc.)

- SDK: Omniverse Replicator

- SDK: DRIVE Constellation

Sameer Pusegaonkar

System Software Engineer, NVIDIA

Alexander Young

Solution Architect, NVIDIA

Dr. Zheng (Thomas) Tang

Senior Deep Learning Engineer, NVIDIA