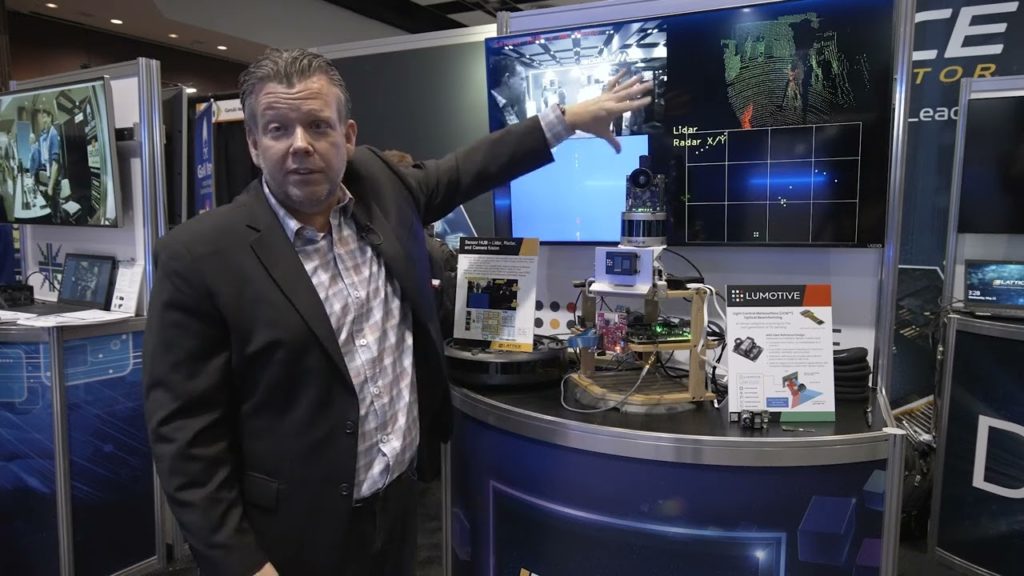

Kevin Camera, Vice President of Product for Lumotive, demonstrates the company’s latest edge AI and vision technologies and products in Lattice Semiconductor’s booth at the 2024 Embedded Vision Summit. Specifically, Camera demonstrates a sensor hub for concurrently fusing together and processing data from multiple sources: Velodyne’s VLP-16 LiDAR, Lumotive’s M30 solid state LiDAR, Texas Instruments’ AWR2243 frequency-modulated continuous-wave (FMCW) radar, and a Sony IMX sensor-based camera on Lattice’s ECP5 VIP processor board.

Human detection AI running on the camera image provides object location information, i.e., a bounding box. Using the bounding box, the distance of each human is calculated using the LiDAR data. The calculated distance is displayed along with a confidence level on the bounding box. The bounding box information is translated to X/Y coordinates using radar and displayed as a circle in the radar plot. The demo supports ROS2 using the USB3 channel to a Raspberry Pi board, and runs OpenCV on a “soft” RISC-V core.

The M30 is a LiDAR reference design for the Light Control Metasurface (LCM) chip developed by Lumotive. It is the market’s first solid-state beam steering LiDAR module. Based on standard semiconductor chip technology, this compact module offers software-defined dynamic region of interest (ROI) capabilities for a wide field of view, enabling customers to use the module for multiple functions including perception and safety, all with a single sensor.