This blog post was originally published at Ambarella’s website. It is reprinted here with the permission of Ambarella.

Autonomous driving (AD) and advanced driver assistance system (ADAS) providers are deploying more and more AI neural networks (NNs) to offer human-like driving experience. Several of the leading AD innovators have either deployed, or have a roadmap to deploy, fully deep learning based AD stacks in their large installed base of autonomous vehicles (AVs). One of the key recent breakthroughs to achieve the most accurate environmental perception around the vehicle is the use of transformer NNs to perform “Bird’s Eye View” (BEV) perception. This advanced BEV perception technology is crucial for the continued evolution of AD and ADAS systems as it enables superior decision making capabilities for all the driving functions from L0 to L5, vs. legacy networks.

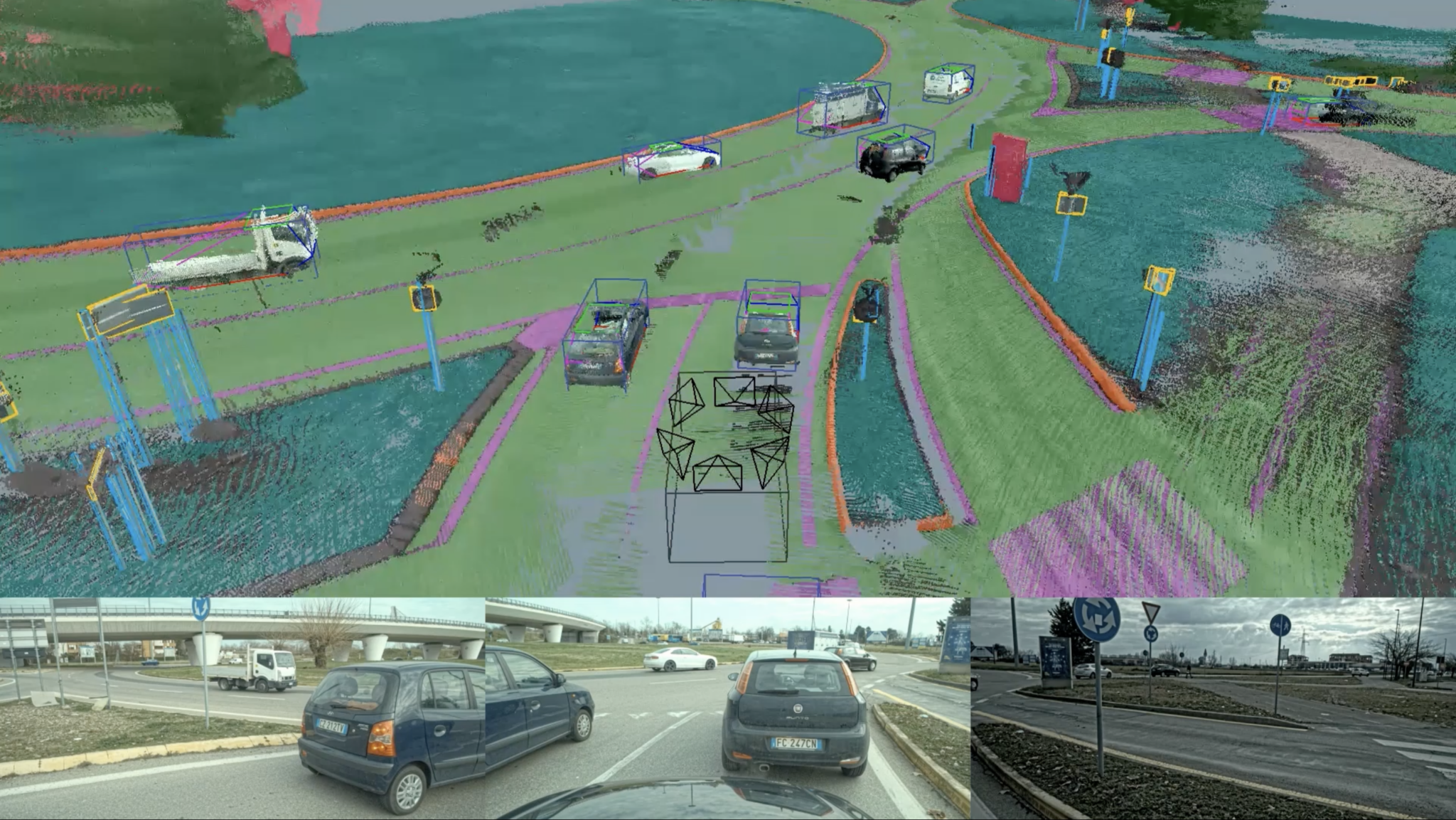

Bird’s Eye View perception of the three-dimensional space around the vehicle. The autonomous vehicle is located in the bottom-center of the BEV image (top). Three input camera views of the AV’s front and side—used in conjunction with other sensors to create the BEV perception—are shown across the bottom.

Bird’s Eye View perception of the three-dimensional space around the vehicle. The autonomous vehicle is located in the bottom-center of the BEV image (top). Three input camera views of the AV’s front and side—used in conjunction with other sensors to create the BEV perception—are shown across the bottom.

The concept of transformer NNs was introduced in the 2017 paper “Attention Is All You Need”1. Using an attention mechanism, the transformer NN is able to relate different pieces of the input sequence in order to compute a better representation of the sequence that leverages a global contextual understanding of the scene. Transformer networks are best suited for tasks that “transform” an input sequence into an output sequence. Since the perception part of the AD stack is essentially a transformation from the image/sensor space to three-dimensional space, transformer NNs can more readily deliver superior perception results.

A good example of this is a transformer-based occupancy NN, which processes the information from the sensors positioned around the vehicle, in real time, to model the physical world in three-dimensional space around the vehicle. The resulting volumetric road occupancy, modeled in 3D vector space, is then used by the vehicle’s path planner to drive the vehicle autonomously or to perform ADAS functions.

Alternate approaches can lead to inaccurate or conflicting perception outputs that confuse or confound the vehicle’s path planner function, essentially “choking” the AD system in many driving scenarios. For example, an alternative method for generating surround view perception is to stitch together all of the environmental objects using semantics that are inferred separately on each of the sensor modules on the vehicle. This system uses multiple convolution NNs to determine the road occupancy in 3D space. The resulting drawbacks from this method include lane markers and curbs that could have multiple predictions on the stitched view in the image space. These conflicting perception data points make it hard for the path planner to drive the vehicle autonomously or to perform ADAS functions.

Being relatively new, transformer NN compute is unfortunately not supported efficiently in most of the current automotive platforms. This is forcing automotive OEMs, Tier 1s and AD stack developers to deploy algorithms without the benefits of advanced BEV perception technology. The result is sub-optimal autonomous driving experiences, which could potentially slow the overall adoption of AVs.

Introduced in 2022, Ambarella’s CV3-AD central AI domain controller family of systems-on-chip (SoCs) provides the automotive industry with the requisite compute performance to efficiently accelerate transformer NNs. With its on-chip, proprietary 3rd generation NN accelerator, Ambarella has included specific optimizations to accelerate transformer workloads. Additionally, this is fully supported by Ambarella’s proven software tools for NN porting and run alongside a feature rich software development kit (SDK). As a testament to the flexibility of our CV3-AD AI compute, Ambarella has demonstrated a 34 Billion parameter Gen AI neural network running locally on the CV3-AD platform.

Please contact us here, for a demonstration of transformer NNs running on Ambarella’s CV3-AD development platform.

References

- Attention is all you need – https://arxiv.org/abs/1706.03762

Lazaar Louis

Director of Automotive Product Marketing, Ambarella