This blog post was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks.

In Part 1 of this series we introduced you with the top 4 issues you are likely to encounter in agriculture related datasets for object detection: occlusion, label quality, data imbalance and scale variation.

In Part 2 we discuss methods and techniques that can help us combat these issues.

Recap of top 4 issues in agriculture datasets for object detection

Let’s quickly recap what each issue is about:

- Occlusion: When objects of interest are partially or completely obscured by other objects or elements in the scene, it becomes challenging for object detection models to accurately detect and localize those objects.

- Label quality: If the annotations used for training contain inaccuracies, missing annotations, or mislabeled objects, they can lead to incorrect training signals, making it difficult for the model to learn the correct object representations and localization cues.

- Data imbalance: Limited object categories, backgrounds, viewpoints, or lighting conditions, can result in a biased or incomplete understanding of object appearances. This lack of balance in the data may cause the model to struggle when faced with novel or unseen object instances.

- Scale variation: Objects that are too small or too large compared to the trained scales may go undetected or exhibit inaccurate localization due to the model’s limited scale sensitivity.

We also showed how we can identify these issues in a very fast and structured way on the Tenyks platform:

Figure 1. Using the Tenyks platform to identify errors in a dataset

Now, let’s discuss general approaches of how to combat these issues hindering agriculture datasets in object detection.

Approaches to solve these problems

Figure 2. Map of potential solutions when Scope and Automation Level are prioritized

Figure 2. Map of potential solutions when Scope and Automation Level are prioritized

The solution we choose to address the problem can vary depending on factors such as:

- Practicality: A practical solution is one that can be realistically implemented and maintained within the constraints of the problem domain, considering dimensions such as time, budget, and available resources.

- Expertise: The expertise factor assesses whether the individuals or teams involved possess the necessary knowledge and skills to successfully address the model failures and implement an effective solution.

- Scope (model level vs dataset level): At the model level, the solution focuses on adjusting the model architecture, algorithm, or training techniques to enhance its performance. At the dataset level, the solution involves improving the quality, diversity, or size of the training dataset to provide better training data to the model.

- Automation level. Higher automation levels indicate solutions that require less human intervention or can be seamlessly integrated into existing automated systems, leading to efficient and scalable deployment.

Though not exhaustive, this list can provide some insights to come up with a coherent set of factors depending on the use-case.

Occlusion

In agricultural images, occluded objects frequently occur because of the dense arrangement of fields and orchards.

Assuming we are interested in solutions that prioritize the automation level and the scope (i.e. dataset vs model level) factors, we present three strategies to reduce occlusion.

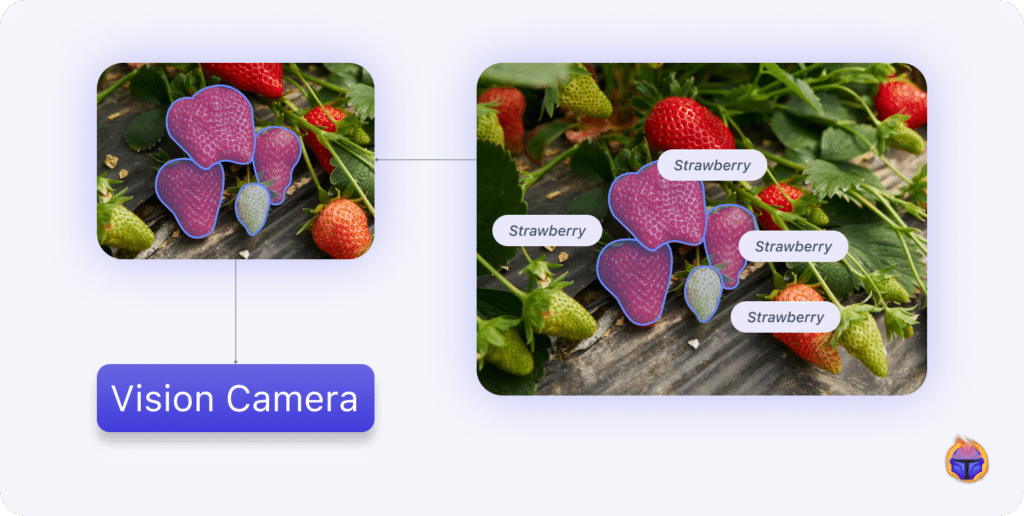

a) (Vanilla) Instance Segmentation. Rather than a regular object detection model such as YOLO, employ an instance segmentation model that generates segmentation masks able to detect occluded apples.

Figure 3. Left: input example with high occlusion. Right: segmentation masks on the occluded apples.

Figure 3. Left: input example with high occlusion. Right: segmentation masks on the occluded apples.

- When to use it: Good alternative to common object detectors that fail at handling occluded objects in very dense settings (such as in our Apples Dataset).

- Strengths: a) precise segmentation masks can distinguish between occluded apples, b) the segmented instances can be used for more accurate counting of objects and measuring of object sizes.

- Weaknesses: a) requires mask annotations for training which are more costly to acquire (than bounding boxes), b) it is more computationally intensive than object detection since per-pixel mask prediction is involved.

b) Amodal instance segmentation. This approach goes a step further than instance segmentation by considering occluded parts of objects: it aims to predict the complete shape of an object, including non-visible occluded regions.

By completing missing parts that are occluded by other objects or outside the image frame, this technique offers an alternative to (vanilla) instance segmentation.

- When to use: When dealing with images or scenes where objects are frequently occluded or partially visible.

- Strengths: Handles occlusions explicitly, making it more robust to occluded objects in comparison to traditional instance segmentation methods.

- Weaknesses: Often requires specialized datasets with annotations of complete object shapes, including occluded parts.

c) Automatic occlusion removal. Unlike other methods that a) are model-level in scope or b) rely on manual or domain-specific occlusion removal, there are dataset-level techniques that aim to automatically remove occlusion.

Automatic occlusion removal using embeddings is a method that utilizes vector embeddings to analyze the interplay between foreground and background objects. By detecting occlusions through this analysis, the technique applies in-painting techniques to effectively remove the occluded regions.

- When to use it: This approach is particularly useful when manual inspection can be costly.

- Strengths: By using vector embeddings of foreground and background object classes, trained on modified image captions, the approach captures the image context and predicts relations between objects. This helps in accurate detection and removal of occlusions.

- Weaknesses: The approach is not entirely automatic, human support is required in one stage of the process to hand-pick occlusions based on the learned relations between object classes.

Table 1. Summary of methods to handle occlusion

Table 1. Summary of methods to handle occlusion

Bleeding edge:

- A Tri-Layer Plugin to Improve Occluded Detection. Source

- Panoptic Segmentation with Embedding Modulation. Source

- Context-aware automatic occlusion removal. Source

Label quality

Issues such as imprecise bounding boxes or inconsistent annotations can have detrimental effects on the performance of the resulting model, causing problems such as reduced accuracy, limited generalization, and deteriorating performance over time.

Figure 4. Challenges and potential solutions around poor quality annotations

Figure 4. Challenges and potential solutions around poor quality annotations

Missing annotations

a) Manually annotate. This is the obvious most basic technique, it involves identifying and correcting the instances where objects have been incorrectly labeled during the initial annotation process.

- When to use it: a) the misannotations are not easily addressed through automated techniques and require human judgment, b) the resources and time available for the annotation task are sufficient to manually review and correct the annotations.

- Strengths: Ensuring that the dataset contains correct and complete annotations, which can lead to improved accuracy.

- Weaknesses: a) it requires significant time and effort, especially for large datasets, b) different annotators may have varying interpretations and criteria for annotating objects, leading to inconsistencies or subjective biases in the annotations.

b) Active learning. This approach involves iteratively selecting a subset of samples from a large dataset for manual annotation, focusing on the most informative or uncertain samples.

- When to use: This technique tends to work when a) annotators or domain experts are available to provide manual annotations for the selected subset of samples, and b) there is a need to allocate annotation effort effectively.

- Strengths: a) reduces the overall annotation effort by focusing on annotating the most valuable samples, leading to time and cost savings, b) the model can be continuously refined by incorporating new annotations and selecting samples based on the evolving model’s needs.

- Weaknesses: a) relies on humans to provide accurate annotations, meaning the quality of annotations can vary based on annotator expertise and consistency, b) requires multiple iterations of model training and annotation selection, which may extend the overall development timeline.

Inconsistent annotations

a) Data cleaning. Manually review and fix incorrect or inaccurate bounding boxes and class labels in the training data.

- When to use: a) when the dataset is small enough for manual review to be feasible, or b) when a high level of annotation quality is required.

- Strengths: a) it’s likely to provide high precision and recall, b) can be tailored to specific annotation guidelines.

- Weaknesses: there a few downsides to consider a) it’s time-consuming and labor-intensive, b) may not be feasible for very large datasets, c) quality depends on the skill of the annotators, d) may involve subjective decision-making potentially introducing new biases.

b) Rule based-correction. Develop a set of rules based on the dataset’s characteristics to identify and correct annotation inconsistencies automatically.

- When to use it: When there are clear patterns in the annotation inconsistencies that can be captured by a set of rules.

- Strengths: a) can be quick to implement if the rules are simple, b) no need for training data or models, c) can be tailored to specific annotation guidelines.

- Weaknesses: a) may not generalize well to new data, b) requires domain knowledge and manual effort to develop rules, c) can be brittle and sensitive to rule changes.

c) Consensus annotation (or burst annotation). This approach consists in having multiple annotators label the same o independently and aggregate the annotations.

- When to use: When you have access to more than one annotator and can budget for overlapping annotations.

- Strengths: a) inconsistent annotations from individuals are smoothed out in the aggregation process, b) model is exposed to a range of acceptable annotations for each image.

- Weaknesses: a) more expensive since instances are annotated multiple times, b) requires developing an annotation aggregation method.

Variability in size of annotated bounding boxes

The varying sizes of annotations in agriculture-related datasets present a challenge for models to accurately learn the sizes of objects. Models struggle to understand the typical shapes and dimensions of objects, resulting in reduced performance and limited applicability in unseen scenarios.

a) Varying size-agnostic architecture. Using a model architecture equipped to address this issue rather a traditional object detector.

Table 2. Comparison of how different Model Architectures handle Size-Variability in annotations.

Table 2. Comparison of how different Model Architectures handle Size-Variability in annotations.

Alternative approaches to tackle this issue include Rule based-correction as explained in the previous section.

Data imbalance

Data imbalance can hinder the model’s ability to accurately detect and differentiate between different objects or handle variations in object characteristics.

The most practical way to make a dataset more balanced is by augmenting the data:

a) Data augmentation. Augmentations in object detection refer to the process of applying various transformations or modifications to the dataset samples (e.g. image rotation, scaling, flipping, cropping, color jittering, and adding noise).

- When to use it: a) the dataset contains limited variations in terms of object scales, orientations, lighting conditions, backgrounds, b) the dataset is specific to a particular domain, such as agriculture, where the objects of interest (apples in orchards) may exhibit limited visual variability due to consistent growth patterns and environmental conditions.

- Strengths: a) augmentations introduce new variations in the dataset, which improves the model’s ability to generalize to unseen instances and increases its robustness, b) offer a way to expand the dataset without the need for collecting additional annotated samples.

- Weaknesses: a) the augmented samples are artificial and may not reflect the full varieties of real world objects (e.g. apples), limiting how much the diversity can actually be increased, b) may require some hyperparameter tuning.

How can I ensure that the augmented data is representative of real-world apple images? The following table describes ways you can ensure the augmented data is representative of real-world apple images:

Table 3. Techniques to ensure augmentations help reduce data imbalance

Table 3. Techniques to ensure augmentations help reduce data imbalance

Bleeding edge:

- DB-GAN: Boosting Object Recognition Under Strong Lighting Conditions. Source

Scale variation

Scale variation refers to the fact that objects in images can appear at different sizes. This poses challenges for object detection models to detect objects robustly regardless of their size.

Selecting a model architecture suitable for handling scale variation is the most common and effective way to deal with this issue, architectures with advanced connectivity patterns, feature pyramids, optimized/eliminated anchor boxes, and cascade modules are well suited for gaining scale invariance in object detection.

Table 4. Architectures that can handle objects in images at different sizes

Table 4. Architectures that can handle objects in images at different sizes

Bleeding edge:

- A robust approach for industrial small-object detection. Source

Summary

In this article, Part 2, we proposed solutions to four common issues in agricultural datasets for object detection models: occluded objects, inaccurate annotations, unrepresentative data, and varying image scales.

In Part 1, we outlined each of the four problems in detail. Occluded objects refer to those partially hidden from view, such as by foliage. Inaccurate or inconsistent annotations (i.e. poor label quality) introduce label noise into the dataset. Data imbalance does not capture the diversity of conditions in the real world. And varying image scales and resolutions make it difficult to apply a single model.

For each issue, we suggested one or more solutions appropriate for different use cases. For occlusions, automated removal of occluded objects or employing instance segmentation are some options. Ensuring label quality call for double-checking and correcting labels using both human tasks and automated approaches. Improving data imbalance may require augmenting the dataset. And for varying scales, solutions include adopting models robust to scale changes.

There are no simple or universal remedies for these agricultural object detection challenges. But with an understanding of use case requirements and model objectives, teams can determine what combination of automated tools and human judgement will yield good enough solutions.

References

- Unknown-Aware Object Detection: Learning What You Don’t Know from Videos in the Wild. Source

- Why Object Detectors Fail: Investigating the Influence of the Dataset. Source

- Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. Source

- You Only Look Once: Unified, Real-Time Object Detection. Source

- Fruit Detection Based on Automatic Occlusion Prediction and Improved YOLOv5s. Source

- EfficientDet: Scalable and Efficient Object Detection. Source

- Cascade R-CNN: Delving into High Quality Object Detection. Source

- Focal Loss for Dense Object Detection. Source

Authors: Jose Gabriel Islas Montero, Dmitry Kazhdan