This blog post was originally published at NVIDIA's website. It is reprinted here with the permission of NVIDIA.

In unveiling the specs of his new self-driving car computer at this week’s Tesla Autonomy Day investor event, Elon Musk made several things very clear to the world.

First, Tesla is raising the bar for all other carmakers.

Second, Tesla’s self-driving cars will be powered by a computer based on two of its new AI chips, each equipped with a CPU, GPU, and deep-learning accelerators. The computer delivers 144 trillion operations per second (TOPS), enabling it to collect data from a range of surround cameras, radars and ultrasonics and power deep neural network algorithms.

Third, Tesla is working on a next-generation chip, which says 144 TOPS isn’t enough.

At NVIDIA, we have long believed in the vision Tesla reiterated: self-driving cars require computers with extraordinary capabilities.

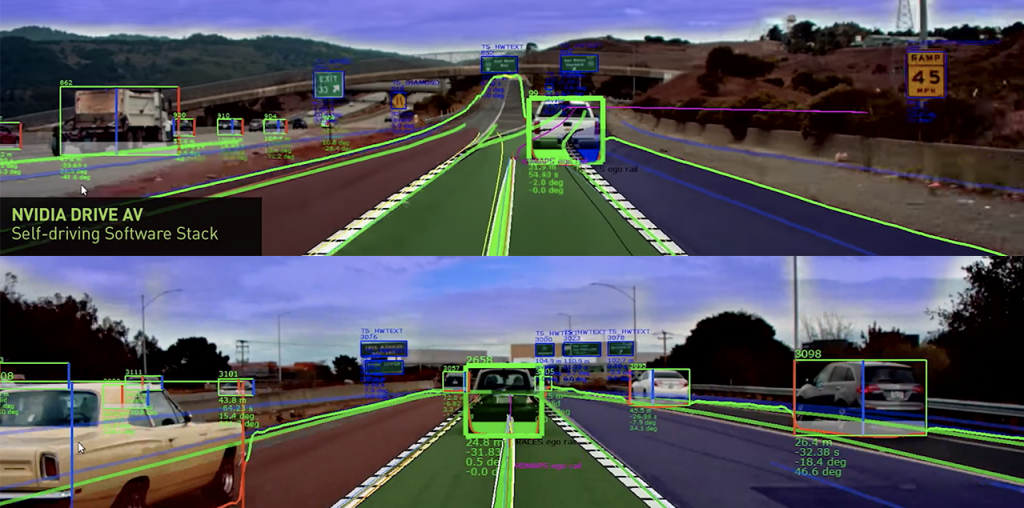

Which is exactly why we designed and built the NVIDIA Xavier SoC several years ago. The Xavier processor features a programmable CPU, GPU and deep learning accelerators, delivering 30 TOPs. We built a computer called DRIVE AGX Pegasus based on a two chip solution, pairing Xavier with a powerful GPU to deliver 160 TOPS, and then put two sets of them on the computer, to deliver a total of 320 TOPS.

And as we announced a year ago, we’re not sitting still. Our next-generation processor Orin is coming.

That’s why NVIDIA is the standard Musk compares Tesla to—we’re the only other company framing this problem in terms of trillions of operations per second, or TOPS.

But while we agree with him on the big picture—that this is a challenge that can only be tackled with supercomputer-class systems—there are a few inaccuracies in Tesla’s Autonomy Day presentation that we need to correct.

It’s not useful to compare the performance of Tesla’s two-chip Full Self Driving computer against NVIDIA’s single-chip driver assistance system. Tesla’s two-chip FSD computer at 144 TOPs would compare against the NVIDIA DRIVE AGX Pegasus computer which runs at 320 TOPS for AI perception, localization and path planning.

Additionally, while Xavier delivers 30 TOPS of processing, Tesla erroneously stated that it delivers 21 TOPS. Moreover, a system with a single Xavier processor is designed for assisted driving AutoPilot features, not full self-driving. Self-driving, as Tesla asserts, requires a good deal more compute.

Tesla, however, has the most important issue fully right: Self-driving cars—which are key to new levels of safety, efficiency, and convenience—are the future of the industry. And they require massive amounts of computing performance.

Indeed Tesla sees this approach as so important to the industry’s future that it’s building its future around it. This is the way forward. Every other automaker will need to deliver this level of performance.

There are only two places where you can get that AI computing horsepower: NVIDIA and Tesla.

And only one of these is an open platform that’s available for the industry to build on.

Rob Csongor

Vice President of Autonomous Machines, NVIDIA