Earlier this month, I pointed out several advancements in motion capture technology that had been made at the in-progress SIGGRAPH conference. Today, with thanks to Slashdot for the heads-up, I'll pass along another SIGGRAPH-delivered announcement, this one in the surface visualization technology space. From MIT News on August 9:

Traditionally, generating micrometer-scale images has required a large, expensive piece of equipment such as a confocal microscope or a white-light interferometer, which might take minutes or even hours to produce a 3-D image. Often, such a device has to be mounted on a vibration isolation table, which might consist of a granite slab held steady by shock absorbers. But Adelson and Johnson [editor note: Edward Adelson, the John and Dorothy Wilson Professor of Vision Science and a member of the Computer Science and Artificial Intelligence Laboratory, and Micah Kimo Johnson, who formerly was a postdoc in Adelson’s lab] have built a prototype sensor, about the size of a soda can, which an operator can move over the surface of an object with one hand, and which produces 3-D images almost instantly.

Their product is called GelSight, and it's been under development for several years; a previous-generation prototype was discussed in a paper published in 2009. Here's how it works:

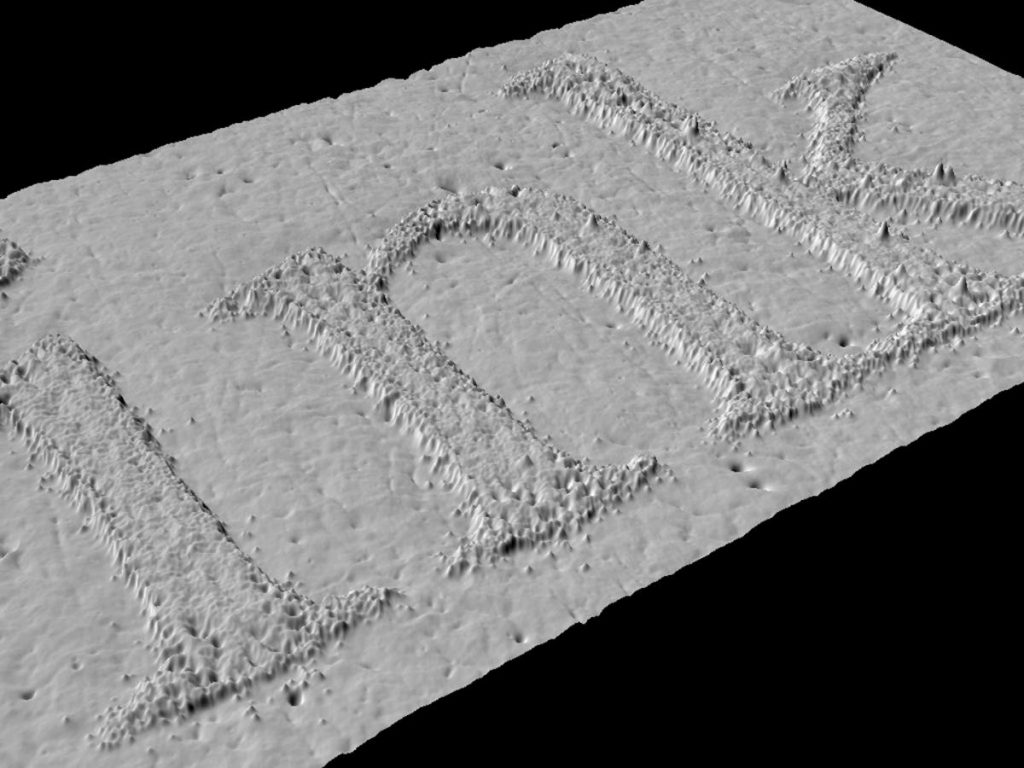

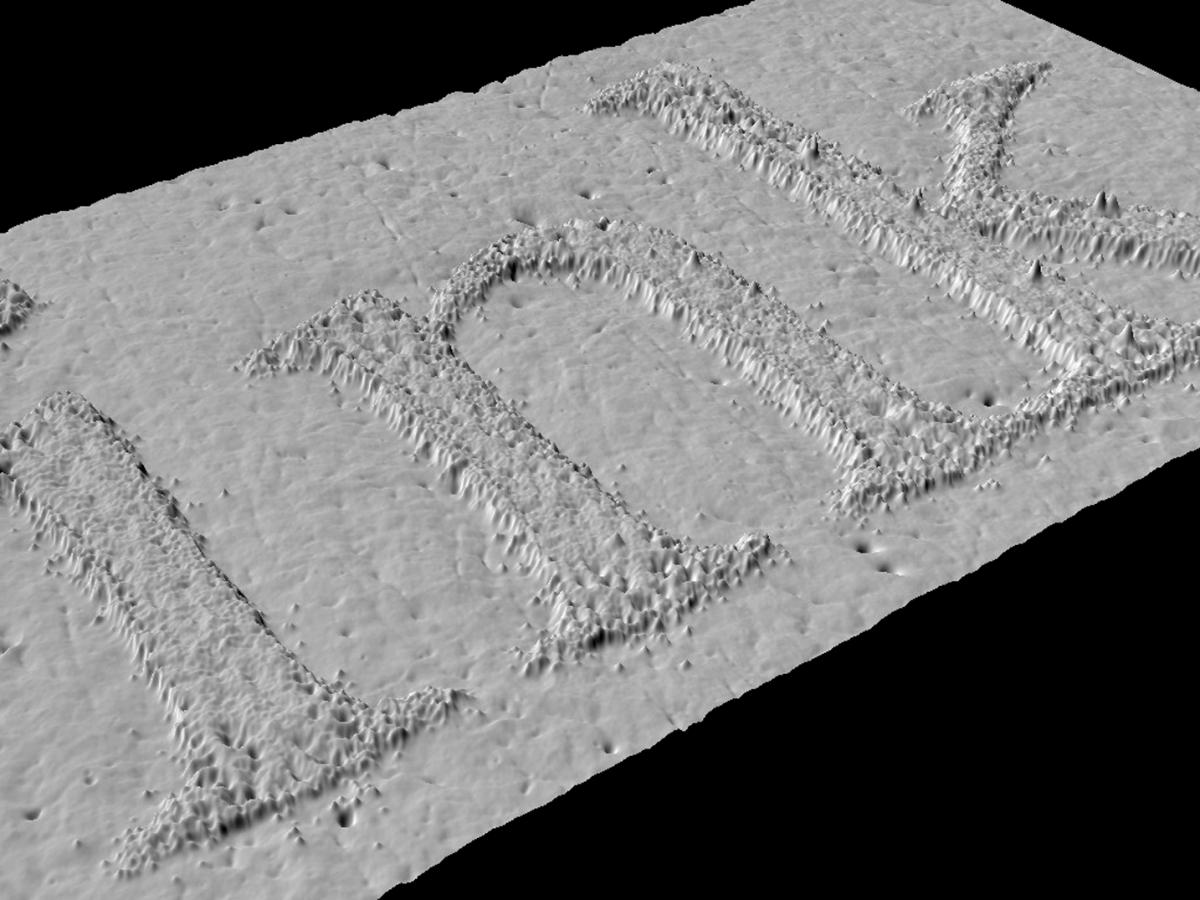

The heart of the system, dubbed GelSight, is a slab of transparent, synthetic rubber, one of whose sides is coated with a paint containing tiny flecks of metal. When pressed against the surface of an object, the paint-coated side of the slab deforms. Cameras mounted on the other side of the slab photograph the results, and computer-vision algorithms analyze the images.

How well does it work? Check out this quote:

A new, higher-resolution version of GelSight…can register physical features less than a micrometer in depth and about two micrometers across. Moreover, because GelSight makes multiple measurements of the rubber's deformation, with light coming in at several different angles, it can produce 3-D models of an object, which can be manipulated on a computer screen.

And not only does GelSight tangibly cost-reduce the surface visualization process versus prior methods, it also produces superior results in some situations.

Although GelSight’s design is simple, it addresses a fundamental difficulty in 3-D sensing. Johnson illustrates the problem with a magnified photograph of an emery board, whose surface, in close-up, looks a lot like marmalade — a seemingly gelatinous combination of reds and oranges. “The optical property of the material is making it very complicated to see the surface structure,” Johnson says. “The light is interacting with the material. It’s going through it, because the crystals are transparent, but it’s also reflecting off of it.” When a surface is pressed into the GelSight gel, however, the metallic paint conforms to its shape. All of a sudden, the optical properties of the surface become perfectly uniform. “Now, the surface structure is more readily visible, but it’s also measurable using some fairly standard computer-vision techniques,” Johnson explains.

The development of GelSight was an iterative and somewhat complicated-and-convoluted process:

GelSight grew out of a project to create tactile sensors for robots, giving them a sense of touch. But Adelson and Johnson quickly realized that their system provided much higher resolution than tactile sensing required. Once they recognized how promising GelSight was, they decided to see how far they could push the resolution. The first order of business was to shrink the flecks of metal in the paint. “We need the pigments to be smaller than the features we want to measure,” Johnson explains. But the different reflective properties of the new pigments required the use of a different lighting scheme, and that in turn required a redesign of the computer-vision algorithm that measures surface features.

But the results speak for themselves, leading to perhaps the most delightful testimonial (bolded emphasis is mine) that I've ever seen in a press release or news writeup, this from a well-known computer graphics researcher:

“I think it’s just a dandy thing,” says Paul Debevec, an associate professor of graphics research at the University of Southern California. “It’s absolutely amazing what they get out of it.” Debevec’s lab has been investigating the use of polarized light to compensate for the irregular reflective properties of some surfaces, but, he says, “they’re getting detail at the level that’s, for little patches, well more than an order of magnitude better than I’ve ever seen measured for these kinds of surfaces.” As a graphics researcher, Debevec — whose PhD thesis work was the basis for the effects in the movie The Matrix — is particularly interested in what GelSight will reveal about the surface characteristics of human skin. “This kind of data is absolutely necessary to simulate that accurately,” Debevec says. “It’s pure gold.”