In last Wednesday's writeup, I mentioned that PrimeSense was reportedly discussing integration of the company's gesture-based user interface technology within various manufacturers' televisions. PrimeSense's approach, with Microsoft's Kinect as its most visible implementation, is clearly an embedded vision case study. And presumably the focus of PrimeSense's pitch to TV folks is on UI control using only a viewer's hand, thereby not requiring (and therefore at least in some sense competing against) a traditional remote control.

As I wrote the above paragraph, I by-analogy thought of the contrast between Kinect's "you are the game controller" approach and the contrasting, more traditional controller-centric strategies taken by Nintendo (with the Wii) and Sony (with the PlayStation Move). Sony's approach is also an embedded vision implementation, albeit of a more simplified sort. Each player's controller has a RGB LED triplet-generated different-color circular 'orb', whose diameter the PlayStation Eye camera at the console calculates and uses to estimate the camera-to-controller 3-D depth::

The Nintendo Wii's approach is even more rudimentary. In this case, the image sensor is in the Wii Remote:

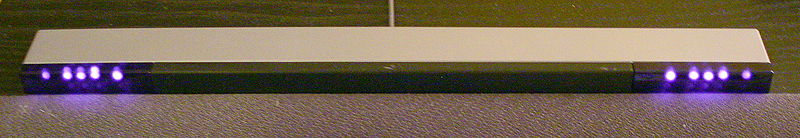

and it detects the invisible light emissions coming from infrared LEDs on both ends of the elementary Sensor Bar located at the console (the LEDs' illumination is, of course, not visible to the naked eye):

However, the Sensor Bar is predominantly used to determine 3-D player-to-screen distance. The left-vs-right end LED spacing at the Bar is a known quantity, and by comparing it against the sensed gap at the controller, an estimation of the span between the two can be calculated. Conversely, the controller's built-in accelerometer (and silicon gyro, in the initial MotionPlus accessory, followed by functional integration in the successive Remote Plus design generation) are predominantly employed to ascertain 2-D horizontal and vertical motion direction, speed and acceleration.

PrimeSense may be advocating discard of the traditional remote control, but remote control manufacturers motivated to preserve their existing businesses understandably have a different "take" on the situation. As such, they're augmenting their products with gesture capabilities (though not everyone seems to be enthralled with the debatable reduced-complexity outcome), assisted by core technology developers such as Hillcrest Labs and implemented by early adopters such as Roku. It'd be nice to think that these will end up being embedded vision business opportunities of one sort of another; if not Kinect-like, at least Move-like. But frankly, I'm not sure that even an entry-level Wii-ish approach is necessary.

After all, unless a manufacturer wants to develop a conventional remote control that also handles games, why is distance-from-screen information important? If distance isn't important, couldn't the television (or whatever's at the other end of the wireless link) assume that the remote control is being initially held in a neutral position and calibrate the center-of-screen cursor location to it? And from that point on, won't the embedded accelerometer and gyro suffice from a functional standpoint?

I welcome your perspectives on this subject!