By Jeff Bier

Founder, Embedded Vision Alliance

Co-Founder and President, BDTI

This article was originally published in abridged form by Electronic Product Design Magazine (Adobe Flash support is required to view). It is reprinted here with the permission of the original publisher.

The term “embedded vision” refers to the use of computer vision technology in embedded systems. Stated another way, “embedded vision” refers to embedded systems that extract meaning from visual inputs. Similar to the way that wireless communication has become pervasive over the past 10 years, embedded vision technology is poised to be widely deployed in the next 10 years.

It’s clear that embedded vision technology can bring huge value to a vast range of applications (Figure 1). Two examples are Mobileye’s driver assistance systems, which help prevent automobile accidents, and MG International’s swimming pool safety system, which helps prevent swimmers from drowning.

Figure 1. Embedded vision originated in computer vision applications such as assembly-line inspection, optical character recognition, robotics, surveillance, and military systems. In recent years, decreasing costs and increasing capabilities have broadened and accelerated its penetration into numerous other markets.

Just as high-speed wireless connectivity began as an exotic, costly technology, so far embedded vision technology typically has been found in complex, expensive systems, such as quality-control inspection systems for manufacturing. Advances in digital integrated circuits were critical in enabling high-speed wireless technology to evolve from exotic to mainstream. Similarly, advances in digital chips are now paving the way for the proliferation of embedded vision into high-volume applications.

With embedded vision, the industry is entering a “virtuous circle” of the sort that has characterized many other digital signal processing application domains. Although there are few chips dedicated to embedded vision applications today, these applications are adopting high-performance, cost-effective processing chips developed for other applications, including DSPs, CPUs, FPGAs and GPUs. As these chips continue to deliver more programmable performance per dollar and per watt, they will enable the creation of more high-volume embedded vision products. Those high-volume applications, in turn, will attract more attention from silicon providers, who will deliver even better performance, efficiency and programmability.

Processing Alternatives

Vision algorithms typically require high compute performance. And, of course, embedded systems of all kinds are usually required to fit into tight cost and power consumption envelopes. In other digital signal processing application domains, such as wireless communications and compression-centric consumer video equipment, chip designers achieve this challenging combination of high performance, low cost, and low power by using specialized coprocessors and accelerators to implement the most demanding processing tasks in the application. These coprocessors and accelerators are typically not programmable by the chip user, however.

This tradeoff is often acceptable in applications where algorithms are standardized. In vision applications, however, there are no standards constraining the choice of algorithms. On the contrary, there are often many approaches to choose from to solve a particular vision problem. Therefore, vision algorithms are very diverse, and change rapidly over time. As a result, the use of non-programmable accelerators and coprocessors is less attractive.

Achieving the combination of high performance, low cost, low power and programmability is challenging. Special-purpose hardware typically achieves high performance at low cost, but with little programmability. General-purpose CPUs provide programmability, but with lower performance, cost-effectiveness and energy-efficiency. Demanding embedded vision applications therefore often use a combination of processing elements. While any processor can in theory be used for embedded vision, the most promising types today are those discussed in the following sections.

High-Performance Embedded CPU

In many cases, embedded CPUs cannot provide enough performance (or cannot do so at acceptable price or power consumption levels) to implement demanding vision algorithms. Often, memory bandwidth is a key performance bottleneck, since vision algorithms typically use large amounts of data, and don’t tend to repeatedly access the same data. The memory systems of embedded CPUs are not designed for these kinds of data flows. However, like most types of processors, embedded CPUs become more powerful over time, and in some cases can provide adequate performance.

Compelling reasons exist to run vision algorithms on a CPU when possible. First, most embedded systems need a CPU for a variety of other functions. If the required vision functionality can be implemented using that same CPU, overall complexity is reduced relative to a multiprocessor solution. In addition, most vision algorithms are initially developed on PCs. Similarities between PC CPUs (and their associated tools) and embedded CPUs mean that it is typically easier to create embedded implementations of vision algorithms on embedded CPUs versus other kinds of embedded vision processors. Finally, embedded CPUs typically are the easiest to use, due to their relatively straightforward architectures and their sophisticated tools, operating systems and other application development infrastructure.

ASSP in Combination with a CPU

Application-specific standard products (ASSPs) are specialized, highly integrated chips tailored for specific applications. ASSPs may incorporate a CPU, or use a separate CPU chip. By virtue of specialization, ASSPs typically deliver superior cost- and energy-efficiency compared with other types of processing solutions. Among other techniques, ASSPs deliver this efficiency through the use of specialized coprocessors and accelerators. And, because ASSPs are by definition focused on a specific application, they are usually delivered with extensive application software.

The specialization that enables ASSPs to achieve strong efficiency, however, also leads to their key limitation: lack of flexibility. An ASSP designed for one application is typically not suitable for another application, even a related one. ASSPs use unique architectures, which can make programming them more difficult. And some ASSPs are not user-programmable. Another consideration is risk. ASSPs often are delivered by small suppliers, which may increase the risk that there will be difficulty in supplying the chip or in delivering successor products.

GPU with a CPU

Grahics processing units (GPUs), intended mainly for 2D and 3D graphics, are increasingly capable of being used for other functions (including vision applications). The GPUs used today are designed to be programmable to perform non-graphics functions. Such GPUs are termed “general-purpose GPUs” or “GPGPUs.” GPUs have massive parallel processing horsepower and are ubiquitous in personal computers. GPU software development tools are readily and freely available, and getting started with GPGPU programming is not terribly complex. For these reasons, GPUs are often the parallel processing engines of first resort for computer vision developers who need to accelerate algorithm execution.

GPUs are tightly integrated with general-purpose CPUs, sometimes on the same chip. However, one of the limitations of GPUs is the limited variety of CPUs with which they are currently integrated, and the limited number of operating systems that support that integration. Today, low-cost, low-power GPUs exist, designed for products like smartphones and tablets. However, these GPUs are often not GPGPUs, and therefore using them for applications other than 3D graphics is challenging.

DSP with Accelerator(s) and a CPU

Digital signal processors (DSPs) are microprocessors specialized for signal processing algorithms and applications. This specialization typically makes DSPs more efficient than general-purpose CPUs for the kinds of signal processing tasks that are at the heart of vision applications. In addition, DSPs are relatively mature and easy to use compared to other kinds of parallel processors.

Unfortunately, while DSPs deliver higher performance and efficiency than general-purpose CPUs, they often fail to deliver sufficient performance for demanding vision algorithms. For this reason, a typical chip for vision applications comprises a CPU, a DSP and coprocessors. This heterogeneous combination can yield excellent performance and efficiency, but can also be difficult to program. Indeed, DSP vendors typically do not enable users to program the coprocessors; rather, the coprocessors run software function libraries developed by the chip supplier.

Mobile Application Processor

A mobile application processor is a highly integrated system-on-chip, typically designed primarily for smart phones but used for other applications. Application processors typically comprise a high-performance CPU core and a constellation of specialized coprocessors, which may include a DSP, a GPU, a video processing unit , an image signal processor, etc.

These chips are specifically designed for battery-powered applications, and therefore place a premium on energy efficiency. In addition, because of the growing importance of smartphone and tablet applications, mobile application processors often have strong software development infrastructure, including low-cost development boards, operating system ports, etc. However, as with the DSPs discussed in the previous section, the specialized coprocessors found in application processors are usually not user-programmable, which limits their utility for vision applications.

FPGA with a CPU

Field programmable gate arrays (FPGAs) are flexible logic chips that can be reconfigured at the gate and block levels, enabling the user to craft computation structures that are tailored to the application at hand. Their flexibility also allows selection of I/O interfaces and on-chip peripherals matched to the application requirements. The ability to customize compute structures, coupled with the massive amount of resources available in modern FPGAs, yields high performance coupled with good cost- and energy-efficiency.

Using FGPAs, however, is essentially a hardware design function, rather than a software development activity. FPGA design is typically performed using hardware description languages (Verilog or VHDL) at the register transfer level (RTL)—a very low level of abstraction. This makes FPGA design time-consuming and expensive. With that said, using FPGAs is getting easier.

IP block libraries of reusable FPGA design components are becoming increasingly capable, in some cases directly addressing vision algorithms, in others enabling supporting functionality. FGPA suppliers and their partners also increasingly offer reference designs that target specific applications, such as vision. Finally, high-level synthesis tools are increasingly effective. Users can implement relatively low-performance CPUs in the FPGA fabric, and in a few cases, FPGA manufacturers are integrating high-performance CPU cores.

The Benefits of an Industry Alliance

Embedded vision technology has the potential to enable a wide range of electronic products that are more intelligent and responsive than before, and thus more valuable to users. It can add helpful features to existing products. And it can provide significant new markets for hardware, software and semiconductor manufacturers. The Embedded Vision Alliance, a worldwide organization of technology developers and providers, is working to empower engineers to transform this potential into reality (Figure 2).

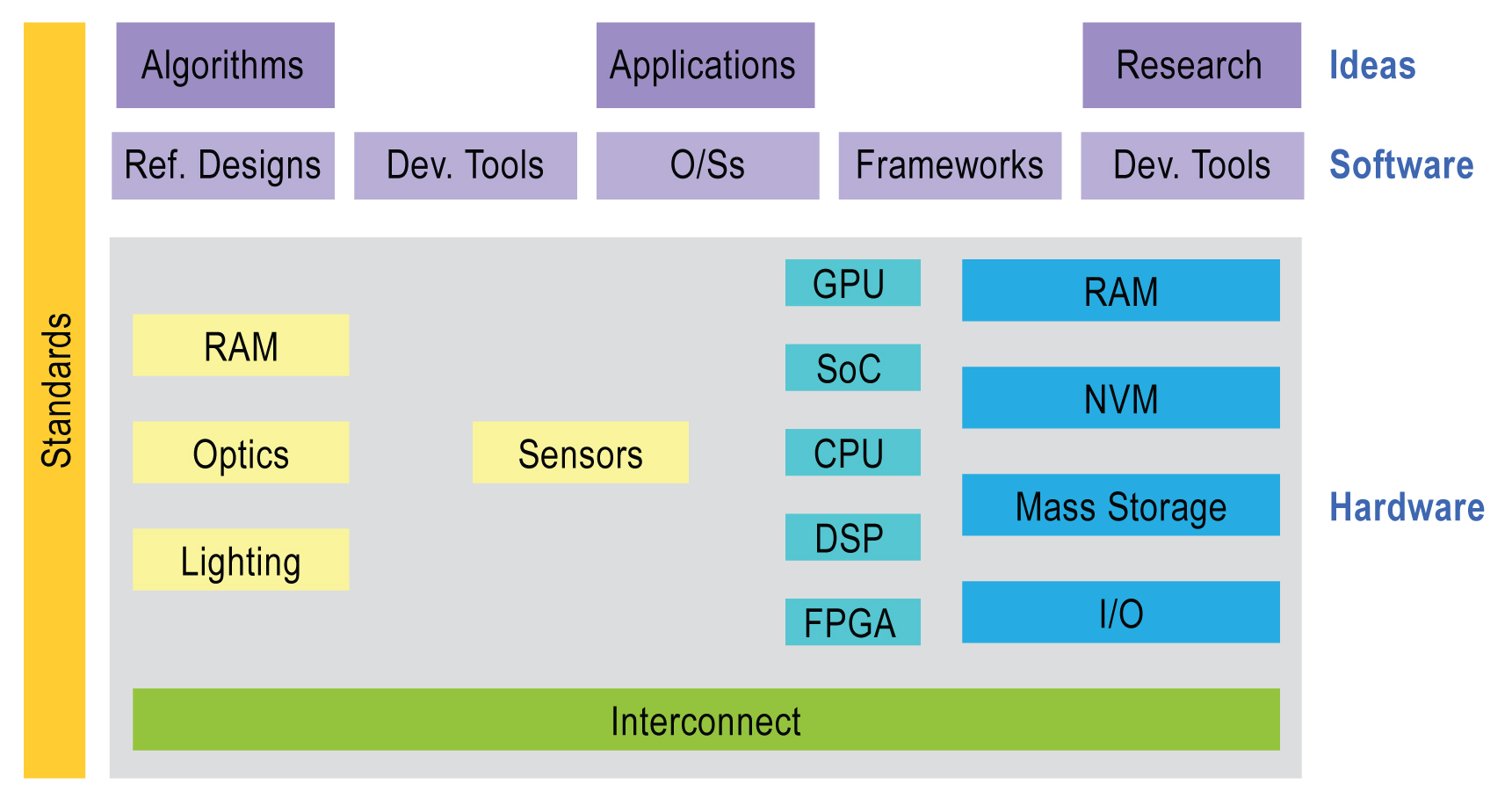

Figure 2. The embedded vision ecosystem spans hardware, semiconductor, and software component suppliers, subsystem developers, systems integrators, and end users, along with the fundamental research.

More specifically, the mission of the Alliance is to provide engineers with practical education, information, and insights to help them incorporate embedded vision capabilities into products. To execute this mission, the Alliance has developed a website providing tutorial articles, videos, code downloads and a discussion forum staffed by a diversity of technology experts.

For more information, please visit www.Embedded-Vision.com. Contact the Embedded Vision Alliance at [email protected] and +1-510-451-1800.

For more information about BDTI, please send the company an email or visit the company's website.