By Brian Dipert and Jeff Bier

Embedded Vision Alliance

This article was originally published at EE Times' Embedded.com Design Line. It is reprinted here with the permission of EE Times.

This article explores the opportunity for including embedded vision features in products, introduces an industry alliance created to help engineers incorporate vision capabilities into their designs, and outlines the technical resources that this alliance provides.

Thanks to the emergence of increasingly capable processors, image sensors, memories, and other semiconductor devices, along with robust algorithms, it's now practical to incorporate computer vision into a wide range of embedded systems, enabling those systems to analyze their environments via video and still image inputs. By 'embedded system', we are referring to any microprocessor-based system that isn’t a general-purpose computer. Embedded vision, therefore, refers to the implementation of computer vision technology in embedded systems.

'Computer Vision' Defined

Wikipedia defines the term 'computer vision' as A field that includes methods for acquiring, processing, analyzing, and understanding images…from the real world in order to produce numerical or symbolic information…A theme in the development of this field has been to duplicate the abilities of human vision by electronically perceiving and understanding an image.

As the name implies, this image perception, understanding, and decision-making process has historically only been achievable using large, heavy, expensive, and power-draining computers, restricting its usage to a short list of applications such as factory automation and military systems (Figure 1). Beyond these few success stories, computer vision has mainly been a field of academic research over the past several decades.

Figure 1: Embedded vision got its start as computer vision in applications such as assembly-line inspection, optical character recognition, robotics, high-end surveillance, and military systems. In recent years, however, decreasing costs and increasing capabilities have broadened and accelerated its penetration into numerous other markets.

Similar to the way that wireless communication technology has become pervasive over the past 10 years, embedded vision technology is poised to become widely deployed over the next 10 years. High-speed wireless connectivity began as a costly niche technology; advances in digital integrated circuits were critical in enabling it to evolve from exotic to mainstream. When chips got fast enough, inexpensive enough, and energy efficient enough, high-speed wireless became a mass-market technology. Today one can buy a broadband wireless modem for well under $100.

Embedded Vision Goes Mainstream

Advances in digital chips are now paving the way for the proliferation of embedded vision into high-volume applications. With embedded vision, the semiconductor industry is entering a virtuous circle of the sort that has characterized many other digital signal processing application domains. Although there are few devices specifically designed for embedded vision applications today, these applications are increasingly adopting ICs (including image sensors and various processor types) developed for other applications. As these chips continue to deliver more performance per dollar and per watt, they will enable the creation of more high-volume embedded vision products. Those high-volume applications, in turn, will attract more attention from silicon providers, who will deliver even better performance, power efficiency, and cost-effectiveness.

For example, odds are high that the cellular handset in your pocket and the tablet computer in your satchel contain at least one rear-mounted image sensor for photography (perhaps two for 3D image capture capabilities) and/or a front-mounted camera for video chat. Vision-based safety systems have been resident in high-end cars for several years now and are migrating downward into higher-volume mainstream models, increasing the number of cameras per car to fill in blind spots, assist in parking and other maneuvers, and provide early warning of impending collisions and other hazards.

Digital still and video cameras have displaced their analog precursors, with latest-generation models going beyond simple image capture and processing functions to incorporate more advanced analysis-and-response features such as face-detection-driven focus and exposure compensation. Advanced cameras will even delay the shutter activation until they discern that the subject is smiling. Similarly, video surveillance systems use motion sensing, face detection, and other techniques not only to activate the video recording function but also send alerts to their owners. As such, they not only 'see' but also are beginning to 'understand' the environments in which they operate.

Other consumer electronics systems also are becoming vision-augmented, with Microsoft's Kinect peripheral for the Xbox 360 game console leading the charge. Medical systems are increasingly supplementing human intelligence with computer vision-based algorithm analysis to assist in patient diagnosis and treatment. The ability to assess and react to a subject's emotional state is not only of interest to physicians; imagine the interest in such a capability to a toy manufacturer, for example, or to a retailer. And countless other embedded vision implementation examples, both evolutionary and revolutionary in nature, also exist.

The Embedded Vision Alliance

As engineers strive to incorporate vision capabilities into embedded systems, it has become clear that there is a lack of readily available, practical guidelines. While computer vision research has generated numerous textbooks and research papers, there is little information available to guide non-specialists in designing practical embedded vision solutions, including the selection of chips, cameras, algorithms, tools, and programming languages.

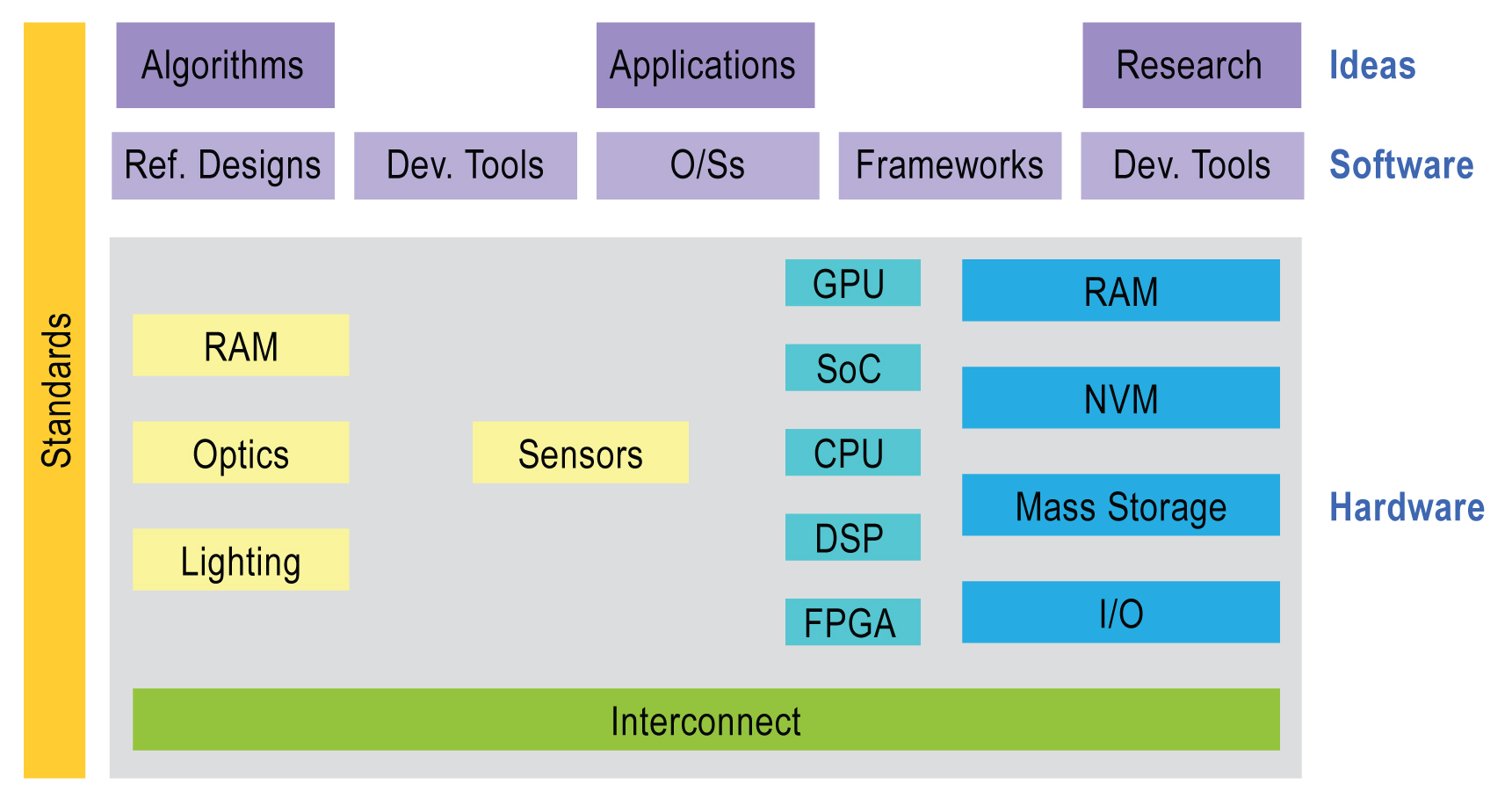

This gap between theory and implementation drove Berkeley Design Technology, Inc. (BDTI) to found and lead the Embedded Vision Alliance beginning in May of 2011. It is currently comprised of 28 member companies (and growing), and brings together the providers of technologies used in creating embedded computer vision applications (Figure 2).

Figure 2. The embedded vision ecosystem spans hardware, semiconductor, and software component suppliers, subsystem developers, systems integrators, and end users, along with the fundamental research that provides ongoing breakthroughs.

The Embedded Vision Alliance is a worldwide membership-based collaboration dedicated to inspiring and empowering product developers to incorporate embedded vision technology into their products. A key means of achieving this is by providing design engineers with the practical information they need in order to effectively use embedded vision technology. The Embedded Vision Alliance’s first step was the launch of its web site at www.Embedded-Vision.com. The site, increasing in content and visitors on a daily basis, serves as a source of practical information to help design engineers and application developers incorporate vision capabilities into their products.

Access to resources, provided by Embedded Vision Alliance staff and member companies, is free to all. Web site content includes articles, videos, a daily news portal, and a discussion forum staffed by a diversity of technology experts. Registered web site users can also receive the Embedded Vision Alliance's e-mail newsletter, Embedded Vision Insights, which is published on a twice-monthly cadence.

The Embedded Vision Academy

More recently, the Embedded Vision Alliance launched a free online training facility for embedded vision product developers: the Embedded Vision Academy. The program provides detailed technical training and other resources to help engineers integrate visual intelligence into next-generation embedded and consumer devices. Course material in the Embedded Vision Academy spans a wide range of vision-related subjects, from basic vision algorithms to image pre-processing, image sensor interfaces, and software development techniques and tools such as OpenCV.

Academy courses incorporate training videos, interviews, demonstrations, downloadable code, and other developer resources, all oriented toward developing embedded vision products. More than forty video seminars and interviews, thirty tutorial articles, and two downloadable software tool suites are currently available at the Academy, as well as an archive of presentations from the September 2012 Embedded Vision Summit. The Embedded Vision Alliance is continuously expanding the curriculum of the Embedded Vision Academy, so engineers will be able to return to the site on an ongoing basis for new courses and resources. Access is free to all through a simple registration process.

Academy Case Studies

A popular video series currently published on the Embedded Vision Academy site comes from José Alvarez, Video Technology Engineering Director at Xilinx Corporation. José has delivered four concise but detailed tutorials on vision system fundamentals and implementing various embedded vision systems using FPGAs:

- Using FPGAs to Interface to and Process Data from Image Sensors

- Implementing an Image Signal Processing Pipeline using FPGAs

- Introduction to Video and Image Processing System Design Using FPGAs, and

- Image Color Conversion and Formatting Using FPGAs

The Embedded Vision Alliance has also published in the Embedded Vision Academy a series of 16 technical presentation videos from the premier Embedded Vision Summit, a technical conference held in September 2012 in Boston, Massachusetts. The next Embedded Vision Summit will take place in San Jose, California on April 25, 2013, as part of UBM Media's DESIGN West event, which includes the Embedded Systems Conference, and an exhibition floor. The Embedded Vision Summit is a technical educational forum for engineers interested in incorporating visual intelligence into electronic systems and software, and includes how-to presentations, seminars, demonstrations, and opportunities to interact with Embedded Vision Alliance member companies. For more information on the Embedded Vision Summit, including online registration, please visit www.embeddedvisionsummit.com.

BDTI's OpenCV Executable Demo Package is one of the Embedded Vision Academy-housed software tools, and was also showcased in a popular article series co-developed by BDTI and the Embedded Vision Alliance and published on the Embedded.com site in May 2012, Introduction to Embedded Vision and the OpenCV Library and Building Machines That See: Finding Edges in Images.

Introduction to Computer Vision Using OpenCV is an easy-to-use tool that allows anyone with a Windows computer and a web camera to experiment with some of the algorithms in OpenCV. Also provided are a detailed user guide and video tutorial.

BDTI's other notable Embedded Vision Academy software offering is the Quick-Start OpenCV Kit. This VMware image, based on Linux, includes OpenCV and all required tools preinstalled, configured, and built, thereby making it easy to quickly get OpenCV running and begin developing vision algorithms. BDTI has provided a companion user guide, Start Developing OpenCV Applications Immediately Using the BDTI Quick-Start OpenCV Kit, that explains in detail how to install and use the Quick-Start OpenCV Kit.

Conclusion

Embedded vision technology has the potential to enable a wide range of electronic products that are more intelligent and responsive than before, and thus more valuable to users. It can add helpful features to existing products and it can provide significant new markets for hardware, software, semiconductor, and systems suppliers alike. The Embedded Vision Alliance provides engineers with tools to speed the transformation of this potential into reality. Specifically, the Embedded Vision Academy provides a diversity of detailed technical resources oriented to engineers developing embedded vision products.

Brian Dipert is Editor-In-Chief of the Embedded Vision Alliance. He is also a Senior Analyst at Berkeley Design Technology, Inc., which provides analysis, advice, and engineering for embedded processing technology and applications, and Editor-In-Chief of InsideDSP, the company's online newsletter dedicated to digital signal processing technology. Brian has a B.S. degree in Electrical Engineering from Purdue University in West Lafayette, IN. His professional career began at Magnavox Electronics Systems in Fort Wayne, IN; Brian subsequently spent eight years at Intel Corporation in Folsom, CA. He then spent 14 years at EDN Magazine.

Jeff Bier is Founder of the Embedded Vision Alliance. He is also Co-Founder and President of BDTI. Jeff oversees BDTI’s benchmarking and analysis of chips, tools, and other technology. He is a key contributor to BDTI’s consulting services, which focus on product development, marketing, and strategic advice for companies using and developing embedded digital signal processing technologies. Jeff received his B.S. and M.S. degrees from Princeton University and U.C. Berkeley. He has also held technical and management positions with Acuson Corporation, Hewlett-Packard Labs, Quinn & Feiner, and U.C. Berkeley.